Section 5: Distributions of Functions of Random Variables

Section 5: Distributions of Functions of Random Variables

As the name of this section suggests, we will now spend some time learning how to find the probability distribution of functions of random variables. For example, we might know the probability density function of \(X\), but want to know instead the probability density function of \(u(X)=X^2\). We'll learn several different techniques for finding the distribution of functions of random variables, including the distribution function technique, the change-of-variable technique and the moment-generating function technique.

The more important functions of random variables that we'll explore will be those involving random variables that are independent and identically distributed. For example, if \(X_1\) is the weight of a randomly selected individual from the population of males, \(X_2\) is the weight of another randomly selected individual from the population of males, ..., and \(X_n\) is the weight of yet another randomly selected individual from the population of males, then we might be interested in learning how the random function:

\(\bar{X}=\dfrac{X_1+X_2+\cdots+X_n}{n}\)

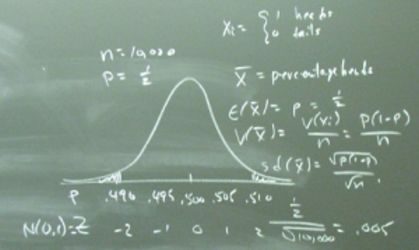

is distributed. We'll first learn how \(\bar{X}\) is distributed assuming that the \(X_i\)'s are normally distributed. Then, we'll strip away the assumption of normality, and use a classic theorem, called the Central Limit Theorem, to show that, for large \(n\), the function:

\(\dfrac{\sqrt{n}(\bar{X}-\mu)}{\sigma}\)

approximately follows the standard normal distribution. Finally, we'll use the Central Limit Theorem to use the normal distribution to approximate discrete distributions, such as the binomial distribution and the Poisson distribution.

Lesson 22: Functions of One Random Variable

Lesson 22: Functions of One Random VariableOverview

We'll begin our exploration of the distributions of functions of random variables, by focusing on simple functions of one random variable. For example, if \(X\) is a continuous random variable, and we take a function of \(X\), say:

\(Y=u(X)\)

then \(Y\) is also a continuous random variable that has its own probability distribution. We'll learn how to find the probability density function of \(Y\), using two different techniques, namely the distribution function technique and the change-of-variable technique. At first, we'll focus only on one-to-one functions. Then, once we have that mastered, we'll learn how to modify the change-of-variable technique to find the probability of a random variable that is derived from a two-to-one function. Finally, we'll learn how the inverse of a cumulative distribution function can help us simulate random numbers that follow a particular probability distribution.

Objectives

- To learn how to use the distribution function technique to find the probability distribution of \(Y=u(X)\), a one-to-one transformation of a random variable \(X\).

- To learn how to use the change-of-variable technique to find the probability distribution of \(Y=u(X)\), a one-to-one transformation of a random variable \(X\).

- To learn how to use the change-of-variable technique to find the probability distribution of \(Y=u(X)\), a two-to-one transformation of a random variable \(X\).

- To learn how to use a cumulative distribution function to simulate random numbers that follow a particular probability distribution.

- To understand all of the proofs in the lesson.

- To be able to apply the methods learned in the lesson to new problems.

22.1 - Distribution Function Technique

22.1 - Distribution Function TechniqueYou might not have been aware of it at the time, but we have already used the distribution function technique at least twice in this course to find the probability density function of a function of a random variable. For example, we used the distribution function technique to show that:

\(Z=\dfrac{X-\mu}{\sigma}\)

follows a standard normal distribution when \(X\) is normally distributed with mean \(\mu\) and standard deviation \(\sigma\). And, we used the distribution function technique to show that, when \(Z\) follows the standard normal distribution:

\(Z^2\)

follows the chi-square distribution with 1 degree of freedom. In summary, we used the distribution function technique to find the p.d.f. of the random function \(Y=u(X)\) by:

-

First, finding the cumulative distribution function:

\(F_Y(y)=P(Y\leq y)\)

-

Then, differentiating the cumulative distribution function \(F(y)\) to get the probability density function \(f(y)\). That is:

\(f_Y(y)=F'_Y(y)\)

Now that we've officially stated the distribution function technique, let's take a look at a few more examples.

Example 22-1

Let \(X\) be a continuous random variable with the following probability density function:

\(f(x)=3x^2\)

for \(0<x<1\). What is the probability density function of \(Y=X^2\)?

Solution

If you look at the graph of the function (above and to the right) of \(Y=X^2\), you might note that (1) the function is an increasing function of \(X\), and (2) \(0<y<1\). That noted, let's now use the distribution function technique to find the p.d.f. of \(Y\). First, we find the cumulative distribution function of \(Y\):

Having shown that the cumulative distribution function of \(Y\) is:

\(F_Y(y)=y^{3/2}\)

for \(0<y<1\), we now just need to differentiate \(F(y)\) to get the probability density function \(f(y)\). Doing so, we get:

\(f_Y(y)=F'_Y(y)=\dfrac{3}{2} y^{1/2}\)

for \(0<y<1\). Our calculation is complete! We have successfully used the distribution function technique to find the p.d.f of \(Y\), when \(Y\) was an increasing function of \(X\). (By the way, you might find it reassuring to verify that \(f(y)\) does indeed integrate to 1 over the support of \(y\). In general, that's not a bad thing to check.)

One thing you might note in the last example is that great care was used to subscript the cumulative distribution functions and probability density functions with either an \(X\) or a \(Y\) to indicate to which random variable the functions belonged. For example, in finding the cumulative distribution function of \(Y\), we started with the cumulative distribution function of \(Y\), and ended up with a cumulative distribution function of \(X\)! If we didn't use the subscripts, we would have had a good chance of throwing up our hands and botching the calculation. In short, using subscripts is a good habit to follow!

Example 22-2

Let \(X\) be a continuous random variable with the following probability density function:

\(f(x)=3(1-x)^2\)

for \(0<x<1\). What is the probability density function of \(Y=(1-X)^3\) ?

Solution

If you look at the graph of the function (above and to the right) of:

\(Y=(1-X)^3\)

you might note that the function is a decreasing function of \(X\), and \(0<y<1\). That noted, let's now use the distribution function technique to find the p.d.f. of \(Y\). First, we find the cumulative distribution function of \(Y\):

Having shown that the cumulative distribution function of \(Y\) is:

\(F_Y(y)=y\)

for \(0<y<1\), we now just need to differentiate \(F(y)\) to get the probability density function \(f(y)\). Doing so, we get:

\(f_Y(y)=F'_Y(y)=1\)

for \(0<y<1\). That is, \(Y\) is a \(U(0,1)\) random variable. (Again, you might find it reassuring to verify that \(f(y)\) does indeed integrate to 1 over the support of \(y\).)

22.2 - Change-of-Variable Technique

22.2 - Change-of-Variable TechniqueOn the last page, we used the distribution function technique in two different examples. In the first example, the transformation of \(X\) involved an increasing function, while in the second example, the transformation of \(X\) involved a decreasing function. On this page, we'll generalize what we did there first for an increasing function and then for a decreasing function. The generalizations lead to what is called the change-of-variable technique.

Generalization for an Increasing Function

Let \(X\) be a continuous random variable with a generic p.d.f. \(f(x)\) defined over the support \(c_1<x<c_2\). And, let \(Y=u(X)\) be a continuous, increasing function of \(X\) with inverse function \(X=v(Y)\). Here's a picture of what the continuous, increasing function might look like:

The blue curve, of course, represents the continuous and increasing function \(Y=u(X)\). If you put an \(x\)-value, such as \(c_1\) and \(c_2\), into the function \(Y=u(X)\), you get a \(y\)-value, such as \(u(c_1)\) and \(u(c_2)\). But, because the function is continuous and increasing, an inverse function \(X=v(Y)\) exists. In that case, if you put a \(y\)-value into the function \(X=v(Y)\), you get an \(x\)-value, such as \(v(y)\).

Okay, now that we have described the scenario, let's derive the distribution function of \(Y\). It is:

\(F_Y(y)=P(Y\leq y)=P(u(X)\leq y)=P(X\leq v(y))=\int_{c_1}^{v(y)} f(x)dx\)

for \(d_1=u(c_1)<y<u(c_2)=d_2\). The first equality holds from the definition of the cumulative distribution function of \(Y\). The second equality holds because \(Y=u(X)\). The third equality holds because, as shown in red on the following graph, for the portion of the function for which \(u(X)\le y\), it is also true that \(X\le v(Y)\):

And, the last equality holds from the definition of probability for a continuous random variable \(X\). Now, we just have to take the derivative of \(F_Y(y)\), the cumulative distribution function of \(Y\), to get \(f_Y(y)\), the probability density function of \(Y\). The Fundamental Theorem of Calculus, in conjunction with the Chain Rule, tells us that the derivative is:

\(f_Y(y)=F'_Y(y)=f_x (v(y))\cdot v'(y)\)

for \(d_1=u(c_1)<y<u(c_2)=d_2\).

Generalization for a Decreasing Function

Let \(X\) be a continuous random variable with a generic p.d.f. \(f(x)\) defined over the support \(c_1<x<c_2\). And, let \(Y=u(X)\) be a continuous, decreasing function of \(X\) with inverse function \(X=v(Y)\). Here's a picture of what the continuous, decreasing function might look like:

The blue curve, of course, represents the continuous and decreasing function \(Y=u(X)\). Again, if you put an \(x\)-value, such as \(c_1\) and \(c_2\), into the function \(Y=u(X)\), you get a \(y\)-value, such as \(u(c_1)\) and \(u(c_2)\). But, because the function is continuous and decreasing, an inverse function \(X=v(Y)\) exists. In that case, if you put a \(y\)-value into the function \(X=v(Y)\), you get an x-value, such as \(v(y)\).

That said, the distribution function of \(Y\) is then:

\(F_Y(y)=P(Y\leq y)=P(u(X)\leq y)=P(X\geq v(y))=1-P(X\leq v(y))=1-\int_{c_1}^{v(y)} f(x)dx\)

for \(d_2=u(c_2)<y<u(c_1)=d_1\). The first equality holds from the definition of the cumulative distribution function of \(Y\). The second equality holds because \(Y=u(X)\). The third equality holds because, as shown in red on the following graph, for the portion of the function for which \(u(X)\le y\), it is also true that \(X\ge v(Y)\):

The fourth equality holds from the rule of complementary events. And, the last equality holds from the definition of probability for a continuous random variable \(X\). Now, we just have to take the derivative of \(F_Y(y)\), the cumulative distribution function of \(Y\), to get \(f_Y(y)\), the probability density function of \(Y\). Again, the Fundamental Theorem of Calculus, in conjunction with the Chain Rule, tells us that the derivative is:

\(f_Y(y)=F'_Y(y)=-f_x (v(y))\cdot v'(y)\)

for \(d_2=u(c_2)<y<u(c_1)=d_1\). You might be alarmed in that it seems that the p.d.f. \(f(y)\) is negative, but note that the derivative of \(v(y)\) is negative, because \(X=v(Y)\) is a decreasing function in \(Y\). Therefore, the two negatives cancel each other out, and therefore make \(f(y)\) positive.

Phew! We have now derived what is called the change-of-variable technique first for an increasing function and then for a decreasing function. But, continuous, increasing functions and continuous, decreasing functions, by their one-to-one nature, are both invertible functions. Let's, once and for all, then write the change-of-variable technique for any generic invertible function.

Definition. Let \(X\) be a continuous random variable with generic probability density function \(f(x)\) defined over the support \(c_1<x<c_2\). And, let \(Y=u(X)\) be an invertible function of \(X\) with inverse function \(X=v(Y)\). Then, using the change-of-variable technique, the probability density function of \(Y\) is:

\(f_Y(y)=f_X(v(y))\times |v'(y)|\)

defined over the support \(u(c_1)<y<u(c_2)\).

Having summarized the change-of-variable technique, once and for all, let's revisit an example.

Example 22-1 Continued

Let's return to our example in which \(X\) is a continuous random variable with the following probability density function:

\(f(x)=3x^2\)

for \(0<x<1\). Use the change-of-variable technique to find the probability density function of \(Y=X^2\).

Solution

Note that the function:

\(Y=X^2\)

defined over the interval \(0<x<1\) is an invertible function. The inverse function is:

\(x=v(y)=\sqrt{y}=y^{1/2}\)

for \(0<y<1\). (That range is because, when \(x=0, y=0\); and when \(x=1, y=1\)). Now, taking the derivative of \(v(y)\), we get:

\(v'(y)=\dfrac{1}{2} y^{-1/2}\)

Therefore, the change-of-variable technique:

\(f_Y(y)=f_X(v(y))\times |v'(y)|\)

tells us that the probability density function of \(Y\) is:

\(f_Y(y)=3[y^{1/2}]^2\cdot \dfrac{1}{2} y^{-1/2}\)

And, simplifying we get that the probability density function of \(Y\) is:

\(f_Y(y)=\dfrac{3}{2} y^{1/2}\)

for \(0<y<1\). We shouldn't be surprised by this result, as it is the same result that we obtained using the distribution function technique.

Example 22-2 continued

Let's return to our example in which \(X\) is a continuous random variable with the following probability density function:

\(f(x)=3(1-x)^2\)

for \(0<x<1\). Use the change-of-variable technique to find the probability density function of \(Y=(1-X)^3\).

Solution

Note that the function:

\(Y=(1-X)^3\)

defined over the interval \(0<x<1\) is an invertible function. The inverse function is:

\(x=v(y)=1-y^{1/3}\)

for \(0<y<1\). (That range is because, when \(x=0, y=1\); and when \(x=1, y=0\)). Now, taking the derivative of \(v(y)\), we get:

\(v'(y)=-\dfrac{1}{3} y^{-2/3}\)

Therefore, the change-of-variable technique:

\(f_Y(y)=f_X(v(y))\times |v'(y)|\)

tells us that the probability density function of \(Y\) is:

\(f_Y(y)=3[1-(1-y^{1/3})]^2\cdot |-\dfrac{1}{3} y^{-2/3}|=3y^{2/3}\cdot \dfrac{1}{3} y^{-2/3} \)

And, simplifying we get that the probability density function of Y is:

\(f_Y(y)=1\)

for \(0<y<1\). Again, we shouldn't be surprised by this result, as it is the same result that we obtained using the distribution function technique.

22.3 - Two-to-One Functions

22.3 - Two-to-One FunctionsYou might have noticed that all of the examples we have looked at so far involved monotonic functions that, because of their one-to-one nature, could therefore be inverted. The question naturally arises then as to how we modify the change-of-variable technique in the situation in which the transformation is not monotonic, and therefore not one-to-one. That's what we'll explore on this page! We'll start with an example in which the transformation is two-to-one. We'll use the distribution function technique to find the p.d.f of the transformed random variable. In so doing, we'll take note of how the change-of-variable technique must be modified to handle the two-to-one portion of the transformation. After summarizing the necessary modification to the change-of-variable technique, we'll take a look at another example using the change-of-variable technique.

Example 22-3

Suppose \(X\) is a continuous random variable with probability density function:

\(f(x)=\dfrac{x^2}{3}\)

for \(-1<x<2\). What is the p.d.f. of \(Y=X^2\)?

Solution

First, note that the transformation:

\(Y=X^2\)

is not one-to-one over the interval \(-1<x<2\):

For example, in the interval \(-1<x<1\), if we take the inverse of \(Y=X^2\), we get:

\(X_1=-\sqrt{Y}=v_1(Y)\)

for \(-1<x<0\), and:

\(X_2=+\sqrt{Y}=v_2(Y)\)

for \(0<x<1\).

As the graph suggests, the transformation is two-to-one between when \(0<y<1\), and one-to-one when \(1<y<4\). So, let's use the distribution function technique, separately, over each of these ranges. First, consider when \(0<y<1\). In that case:

\(F_Y(y)=P(Y\leq y)=P(X^2 \leq y)=P(-\sqrt{y}\leq X \leq \sqrt{y})=F_X(\sqrt{y})-F_X(-\sqrt{y})\)

The first equality holds by the definition of the cumulative distribution function. The second equality holds because the transformation of interest is \(Y=X^2\). The third equality holds, because when \(X^2\le y\), the random variable \(X\) is between the positive and negative square roots of \(y\). And, the last equality holds again by the definition of the cumulative distribution function. Now, taking the derivative of the cumulative distribution function \(F(y)\), we get (from the Fundamental Theorem of Calculus and the Chain Rule) the probability density function \(f(y)\):

\(f_Y(y)=F'_Y(y)=f_X(\sqrt{y})\cdot \dfrac{1}{2} y^{-1/2} + f_X(-\sqrt{y})\cdot \dfrac{1}{2} y^{-1/2}\)

Using what we know about the probability density function of \(X\):

\(f(x)=\dfrac{x^2}{3}\)

we get:

\(f_Y(y)=\dfrac{(\sqrt{y})^2}{3} \cdot \dfrac{1}{2} y^{-1/2}+\dfrac{(-\sqrt{y})^2}{3} \cdot \dfrac{1}{2} y^{-1/2}\)

And, simplifying, we get:

\(f_Y(y)=\dfrac{1}{6}y^{1/2}+\dfrac{1}{6}y^{1/2}=\dfrac{\sqrt{y}}{3}\)

for \(0<y<1\). Note that it readily becomes apparent that in the case of a two-to-one transformation, we need to sum two terms, each of which arises from a one-to-one transformation.

So, we've found the p.d.f. of \(Y\) when \(0<y<1\). Now, we have to find the p.d.f. of \(Y\) when \(1<y<4\). In that case:

\(F_Y(y)=P(Y\leq y)=P(X^2 \leq y)=P(X\leq \sqrt{y})=F_X(\sqrt{y})\)

The first equality holds by the definition of the cumulative distribution function. The second equality holds because \(Y=X^2\). The third equality holds, because when \(X^2\le y\), the random variable \(X \le \sqrt{y}\). And, the last equality holds again by the definition of the cumulative distribution function. Now, taking the derivative of the cumulative distribution function \(F(y)\), we get (from the Fundamental Theorem of Calculus and the Chain Rule) the probability density function \(f(y)\):

\(f_Y(y)=F'_Y(y)=f_X(\sqrt{y})\cdot \dfrac{1}{2} y^{-1/2}\)

Again, using what we know about the probability density function of \(X\), and simplifying, we get:

\(f_Y(y)=\dfrac{(\sqrt{y})^2}{3} \cdot \dfrac{1}{2} y^{-1/2}=\dfrac{\sqrt{y}}{6}\)

for \(1<y<4\).

Now that we've seen how the distribution function technique works when we have a two-to-one function, we should now be able to summarize the necessary modifications to the change-of-variable technique.

Generalization

Let \(X\) be a continuous random variable with probability density function \(f(x)\) for \(c_1<x<c_2\).

Let \(Y=u(X)\) be a continuous two-to-one function of \(X\), which can be “broken up” into two one-to-one invertible functions with:

\(X_1=v_1(Y)\) and \(X_2=v_2(Y)\)

-

Then, the probability density function for the two-to-one portion of \(Y\) is:

\(f_Y(y)=f_X(v_1(y))\cdot |v'_1(y)|+f_X(v_2(y))\cdot |v'_2(y)|\)

for the “appropriate support” for \(y\). That is, you have to add the one-to-one portions together.

-

And, the probability density function for the one-to-one portion of \(Y\) is, as always:

\(f_Y(y)=f_X(v_2(y))\cdot |v'_2(y)|\)

for the “appropriate support” for \(y\).

Example 22-4

Suppose \(X\) is a continuous random variable with that follows the standard normal distribution with, of course, \(-\infty<x<\infty\). Use the change-of-variable technique to show that the p.d.f. of \(Y=X^2\) is the chi-square distribution with 1 degree of freedom.

Solution

The transformation \(Y=X^2\) is two-to-one over the entire support \(-\infty<x<\infty\):

That is, when \(-\infty<x<0\), we have:

\(X_1=-\sqrt{Y}=v_1(Y)\)

and when \(0<x<\infty\), we have:

\(X_2=+\sqrt{Y}=v_2(Y)\)

Then, the change of variable technique tells us that, over the two-to-one portion of the transformation, that is, when \(0<y<\infty\):

\(f_Y(y)=f_X(\sqrt{y})\cdot \left |\dfrac{1}{2} y^{-1/2}\right|+f_X(-\sqrt{y})\cdot \left|-\dfrac{1}{2} y^{-1/2}\right|\)

Recalling the p.d.f. of the standard normal distribution:

\(f_X(x)=\dfrac{1}{\sqrt{2\pi}} \text{exp}\left[-\dfrac{x^2}{2}\right]\)

the p.d.f. of \(Y\) is then:

\(f_Y(y)=\dfrac{1}{\sqrt{2\pi}} \text{exp}\left[-\dfrac{(\sqrt{y})^2}{2}\right]\cdot \left|\dfrac{1}{2} y^{-1/2}\right|+\dfrac{1}{\sqrt{2\pi}} \text{exp}\left[-\dfrac{(\sqrt{y})^2}{2}\right]\cdot \left|-\dfrac{1}{2} y^{-1/2}\right|\)

Adding the terms together, and simplifying a bit, we get:

\(f_Y(y)=2 \dfrac{1}{\sqrt{2\pi}} \text{exp}\left[-\dfrac{y}{2}\right]\cdot \dfrac{1}{2} y^{-1/2}\)

Crossing out the 2s, recalling that \(\Gamma(1/2)=\sqrt{\pi}\), and rewriting things just a bit, we should be able to recognize that, with \(0<y<\infty\), the probability density function of \(Y\):

\(f_Y(y)=\dfrac{1}{\Gamma(1/2) 2^{1/2}} e^{-y/2} y^{-1/2}\)

is indeed the p.d.f. of a chi-square random variable with 1 degree of freedom!

22.4 - Simulating Observations

22.4 - Simulating ObservationsNow that we've learned the mechanics of the distribution function and change-of-variable techniques to find the p.d.f. of a transformation of a random variable, we'll now turn our attention for a few minutes to an application of the distribution function technique. In doing so, we'll learn how statistical software, such as Minitab or SAS, generates (or "simulates") 1000 random numbers that follow a particular probability distribution. More specifically, we'll explore how statistical software simulates, say, 1000 random numbers from an exponential distribution with mean \(\theta=5\).

The Idea

If we take a look at the cumulative distribution function of an exponential random variable with a mean of \(\theta=5\):

the idea might just jump out at us. You might notice that the cumulative distribution function \(F(x)\) is a number (a cumulative probability, in fact!) between 0 and 1. So, one strategy we might use to generate a 1000 numbers following an exponential distribution with a mean of 5 is:

- Generate a \(Y\sim U(0,1)\) random number. That is, generate a number between 0 and 1 such that each number between 0 and 1 is equally likely.

- Then, use the inverse of \(Y=F(x)\) to get a random number \(X=F^{-1}(y)\) whose distribution function is \(F(x)\). This is, in fact, illustrated on the graph. If \(F(x)=0.8\), for example, then the inverse \(X\) is about 8.

- Repeat steps 1 and 2 one thousand times.

By looking at the graph, you should get the idea, by using this strategy, that the shape of the distribution function dictates the probability distribution of the resulting \(X\) values. In this case, the steepness of the curve up to about \(F(x)=0.8\) suggests that most of the \(X\) values will be less than 8. That's what the probability density function of an exponential random variable with a mean of 5 suggests should happen:

We can even do the calculation, of course, to illustrate this point. If \(X\) is an exponential random variable with a mean of 5, then:

\(P(X<8)=1-P(X>8)=1-e^{-8/5}=0.80\)

A theorem (naturally!) formalizes our idea of how to simulate random numbers following a particular probability distribution.

Let \(Y\sim U(0,1)\). Let \(F(x)\) have the properties of a distribution function of the continuous type with \(F(a)=0\) and \(F(b)=1\). Suppose that \(F(x)\) is strictly increasing on the support \(a<x<b\), where \(a\) and \(b\) could be \(-\infty\) and \(\infty\), respectively. Then, the random variable \(X\) defined by:

\(X=F^{-1}(Y)\)

is a continuous random variable with cumulative distribution function \(F(x)\).

Proof.

In order to prove the theorem, we need to show that the cumulative distribution function of \(X\) is \(F(x)\). That is, we need to show:

\(P(X\leq x)=F(x)\)

It turns out that the proof is a one-liner! Here it is:

\(P(X\leq x)=P(F^{-1}(Y)\leq x)=P(Y \leq F(x))=F(x)\)

We've set out to prove what we intended, namely that:

\(P(X\leq x)=F(x)\)

Well, okay, maybe some explanation is needed! The first equality in the one-line proof holds, because:

\(X=F^{-1}(Y)\)

Then, the second equality holds because of the red portion of this graph:

That is, when:

\(F^{-1}(Y)\leq x\)

is true, so is

\(Y \leq F(x)\)

Finally, the last equality holds because it is assumed that \(Y\) is a uniform(0, 1) random variable, and therefore the probability that \(Y\) is less than or equal to some \(y\) is, in fact, \(y\) itself:

\(P(Y\leq y)=F(y)=\int_0^y dt=y\)

That means that the probability that \(Y\) is less than or equal to some \(F(x)\) is, in fact, \(F(x)\) itself:

\(P(Y \leq F(x))=F(x)\)

Our one-line proof is complete!

Example 22-5

A student randomly draws the following three uniform(0, 1) numbers:

| 0.2 | 0.5 | 0.9 |

Use the three uniform(0,1) numbers to generate three random numbers that follow an exponential distribution with mean \(\theta=5\).

Solution

The cumulative distribution function of an exponential random variable with a mean of 5 is:

\(y=F(x)=1-e^{-x/5}\)

for \(0\le x<\infty\). We need to invert the cumulative distribution function, that is, solve for \(x\), in order to be able to determine the exponential(5) random numbers. Manipulating the above equation a bit, we get:

\(1-y=e^{-x/5}\)

Then, taking the natural log of both sides, we get:

\(\text{log}(1-y)=-\dfrac{x}{5}\)

And, multiplying both sides by −5, we get:

\(x=-5\text{log}(1-y)\)

for \(0<y<1\). Now, it's just a matter of inserting the student's three random U(0,1) numbers into the above equation to get our three exponential(5) random numbers:

- If \(y=0.2\), we get \(x=1.1\)

- If \(y=0.5\), we get \(x=3.5\)

- If \(y=0.9\), we get \(x=11.5\)

We would simply continue the same process — that is, generating \(y\), a random U(0,1) number, inserting y into the above equation, and solving for \(x\) — 997 more times if we wanted to generate 1000 exponential(5) random numbers. Of course, we wouldn't really do it by hand, but rather let statistical software do it for us. At least we now understand how random number generation works!

Lesson 23: Transformations of Two Random Variables

Lesson 23: Transformations of Two Random VariablesIntroduction

In this lesson, we consider the situation where we have two random variables and we are interested in the joint distribution of two new random variables which are a transformation of the original one. Such a transformation is called a bivariate transformation. We use a generalization of the change of variables technique which we learned in Lesson 22. We provide examples of random variables whose density functions can be derived through a bivariate transformation.

Objectives

- To learn how to use the change-of-variable technique to find the probability distribution of \(Y_1 = u_1(X_1, X_2), Y_2 = u_2(X_1, X_2)\), a one-to-one transformation of the two random variables \(X_1\) and \(X_2\).

23.1 - Change-of-Variables Technique

23.1 - Change-of-Variables TechniqueRecall, that for the univariate (one random variable) situation: Given \(X\) with pdf \(f(x)\) and the transformation \(Y=u(X)\) with the single-valued inverse \(X=v(Y)\), then the pdf of \(Y\) is given by

\(\begin{align*} g(y) = |v^\prime(y)| f\left[ v(y) \right]. \end{align*}\)

Now, suppose \((X_1, X_2)\) has joint density \(f(x_1, x_2)\). and support \(S_X\).

Let \((Y_1, Y_2)\) be some function of \((X_1, X_2)\) defined by \(Y_1 = u_1(X_1, X_2)\) and \(Y_2 = u_2(X_1, X_2)\) with the single-valued inverse given by \(X_1 = v_1(Y_1, Y_2)\) and \(X_2 = v_2(Y_1, Y_2)\). Let \(S_Y\) be the support of \(Y_1, Y_2\).

Then, we usually find \(S_Y\) by considering the image of \(S_X\) under the transformation \((Y_1, Y_2)\). Say, given \(x_1, x_2 \in S_X\), we can find \((y_1, y_2) \in S_Y\) by

\(\begin{align*} x_1 = v_1(y_1, y_2), \hspace{1cm} x_2 = v_2(y_1, y_2) \end{align*}\)

The joint pdf \(Y_1\) and \(Y_2\) is

\(\begin{align*} g(y_1, y_2) = |J| f\left[ v_1(y_1, y_2), v_2(y_1, y_2) \right] \end{align*}\)

In the above expression, \(|J|\) refers to the absolute value of the Jacobian, \(J\). The Jacobian, \(J\), is given by

\(\begin{align*} \left| \begin{array}{cc} \frac{\partial v_1(y_1, y_2)}{\partial y_1} & \frac{\partial v_1(y_1, y_2)}{\partial y_2} \\ \frac{\partial v_2(y_1, y_2)}{\partial y_1} & \frac{\partial v_2(y_1, y_2)}{\partial y_2} \end{array} \right| \end{align*}\)

i.e. it is the determinant of the matrix

\(\begin{align*} \left( \begin{array}{cc} \frac{\partial v_1(y_1, y_2)}{\partial y_1} & \frac{\partial v_1(y_1, y_2)}{\partial y_2} \\ \frac{\partial v_2(y_1, y_2)}{\partial y_1} & \frac{\partial v_2(y_1, y_2)}{\partial y_2} \end{array} \right) \end{align*}\)

Example 23-1

Suppose \(X_1\) and \(X_2\) are independent exponential random variables with parameter \(\lambda = 1\) so that

\(\begin{align*} &f_{X_1}(x_1) = e^{-x_1} \hspace{1.5 cm} 0< x_1 < \infty \\&f_{X_2}(x_2) = e^{-x_2} \hspace{1.5 cm} 0< x_2 < \infty \end{align*}\)

The joint pdf is given by

\(\begin{align*} f(x_1, x_2) = f_{X_1}(x_1)f_{X_2}(x_2) = e^{-x_1-x_2} \hspace{1.5 cm} 0< x_1 < \infty, 0< x_2 < \infty \end{align*}\)

Consider the transformation: \(Y_1 = X_1-X_2, Y_2 = X_1+X_2\). We wish to find the joint distribution of \(Y_1\) and \(Y_2\).

We have

\(\begin{align*} x_1 = \frac{y_1+y_2}{2}, x_2=\frac{y_2-y_1}{2} \end{align*}\)

OR

\(\begin{align*} v_1(y_1, y_2) = \frac{y_1+y_2}{2}, v_2(y_1, y_2)=\frac{y_2-y_1}{2} \end{align*}\)

The Jacobian, \(J\) is

\(\begin{align*} \left| \begin{array}{cc} \frac{\partial \left( \frac{y_1+y_2}{2} \right) }{\partial y_1} & \frac{\partial \left( \frac{y_1+y_2}{2} \right)}{\partial y_2} \\ \frac{\partial \left( \frac{y_2-y_1}{2} \right)}{\partial y_1} & \frac{\partial \left( \frac{y_2-y_1}{2} \right)}{\partial y_2} \end{array} \right| \end{align*}\)

\(\begin{align*} =\left| \begin{array}{cc} \frac{1}{2} & \frac{1}{2} \\ -\frac{1}{2} & \frac{1}{2} \end{array} \right| = \frac{1}{2} \end{align*}\)

So,

\(\begin{align*} g(y_1, y_2) & = e^{-v_1(y_1, y_2) - v_2(y_1, y_2) }|\frac{1}{2}| \\ & = e^{- \left[\frac{y_1+y_2}{2}\right] - \left[\frac{y_2-y_1}{2}\right] }|\frac{1}{2}| \\ & = \frac{e^{-y_2}}{2} \end{align*}\)

Now, we determine the support of \((Y_1, Y_2)\). Since \(0< x_1 < \infty, 0< x_2 < \infty\), we have \(0< \frac{y_1+y_2}{2} < \infty, 0< \frac{y_2-y_1}{2} < \infty\) or \(0< y_1+y_2 < \infty, 0< y_2-y_1 < \infty\). This may be rewritten as \(-y_2< y_1 < y_2, 0< y_2 < \infty\).

Using the joint pdf, we may find the marginal pdf of \(Y_2\) as

\(\begin{align*} g(y_2) & = \int_{-\infty}^{\infty} g(y_1, y_2) dy_1 \\& = \int_{-y_2}^{y_2}\frac{1}{2}e^{-y_2} dy_1 \\& = \left. \frac{1}{2} \left[ e^{-y_2} y_1 \right|_{y_1=-y_2}^{y_1=y_2} \right] \\& = \frac{1}{2} e^{-y_2} (y_2 + y_2) \\& = y_2 e^{-y_2}, \hspace{1cm} 0< y_2 < \infty \end{align*}\)

Similarly, we may find the marginal pdf of \(Y_1\) as

\(\begin{align*} g(y_1)=\begin{cases} \int_{-y_1}^{\infty} \frac{1}{2}e^{-y_2} dy_2 = \frac{1}{2} e^{y_1} & -\infty < y_1 < 0 \\ \int_{y_1}^{\infty} \frac{1}{2}e^{-y_2} dy_2 = \frac{1}{2} e^{-y_1} & 0 < y_1 < \infty \\ \end{cases} \end{align*}\)

Equivalently,

\(\begin{align*} g(y_1) = \frac{1}{2} e^{-|y_1|} & 0 < y_1 < \infty \end{align*}\)

This pdf is known as the double exponential or Laplace pdf.

23.2 - Beta Distribution

23.2 - Beta DistributionLet \(X_1\) and \(X_2\) have independent gamma distributions with parameters \(\alpha, \theta\) and \(\beta\) respectively. Therefore, the joint pdf of \(X_1\) and \(X_2\) is given by

\(\begin{align*} f(x_1, x_2) = \frac{1}{\Gamma(\alpha) \Gamma(\beta)\theta^{\alpha + \beta}} x_1^{\alpha-1}x_2^{\beta-1}\text{ exp }\left( -\frac{x_1 + x_2}{\theta} \right), 0 <x_1 <\infty, 0 <x_2 <\infty. \end{align*}\)

We make the following transformation:

\(\begin{align*} Y_1 = \frac{X_1}{X_1+X_2}, Y_2 = X_1+X_2 \end{align*}\)

The inverse transformation is given by

\(\begin{align*} &X_1=Y_1Y_2, \\& X_2=Y_2-Y_1Y_2 \end{align*}\)

The Jacobian is

\(\begin{align*} \left| \begin{array}{cc} y_2 & y_1 \\ -y_2 & 1-y_1 \end{array} \right| = y_2(1-y_1) + y_1y_2 = y_2 \end{align*}\)

The joint pdf \(g(y_1, y_2)\) is

\(\begin{align*} g(y_1, y_2) = |y_2| \frac{1}{\Gamma(\alpha) \Gamma(\beta)\theta^{\alpha + \beta}} (y_1y_2)^{\alpha - 1}(y_2 - y_1y_2)^{\beta - 1}e^{-y_2/\theta} \end{align*}\)

with support is \(0<y_1<1, 0<y_2<\infty\)

It may be shown that the marginal pdf of \(Y_1\) is

\(\begin{align*} g(y_1) & = \frac{y_1^{\alpha - 1}(1 - y_1)^{\beta - 1}}{\Gamma(\alpha) \Gamma(\beta) } \int_0^{\infty} \frac{y_2^{\alpha + \beta -1}}{\theta^{\alpha + \beta}} e^{-y_2/\theta} dy_2 g(y_1) \\& = \frac{ \Gamma(\alpha + \beta) }{\Gamma(\alpha) \Gamma(\beta) } y_1^{\alpha - 1}(1 - y_1)^{\beta - 1}, \hspace{1cm} 0<y_1<1. \end{align*}\)

\(Y_1\) is said to have a beta pdf with parameters \(\alpha\) and \(\beta\).

23.3 - F Distribution

23.3 - F DistributionWe describe a very useful distribution in Statistics known as the F distribution.

Let \(U\) and \(V\) be independent chi-square variables with \(r_1\) and \(r_2\) degrees of freedom, respectively. The joint pdf is

\(\begin{align*}

g(u, v) = \frac{ u^{r_1/2-1}e^{-u/2} v^{r_2/2-1}e^{-v/2} } { \Gamma (r_1/2) 2^{r_1/2} \Gamma

(r_2/2) 2^{r_2/2} } , \hspace{1cm} 0<u<\infty, 0<v<\infty

\end{align*}\)

Define the random variable \(W = \frac{U/r_1}{V/r_2}\)

This time we use the distribution function technique described in lesson 22,

\(\begin{align*}

F(w) = P(W \leq w)

= P \left( \frac{U/r_1}{V/r_2} \leq w \right) = P(U \leq \frac{r_1}{r_2} wV) = \int_0^\infty \int_0^{(r_1/r_2)wv} g

(u, v) du dv

\end{align*}\)

\(\begin{align*}

F(w) =\frac{1}{ \Gamma (r_1/2) \Gamma (r_2/2) } \int_0^\infty \left[ \int_0^

{(r_1/r_2)wv} \frac{ u^{r_1/2-1}e^{-u/2}}{2^{(r_1+r_2)/2}} du \right] v^{r_1/2-1}e^{-v/2} dv

\end{align*}\)

By differentiating the cdf , it can be shown that \(f(w) = F^\prime(w)\) is given by

\(\begin{align*}

f(w) = \frac{ \left( r_1/r_2 \right)^{r_1/2} \Gamma \left[ \left(r_1+r_2\right)/2 \right]w^{r_1/2-1} }

{\Gamma(r_1/2)\Gamma(r_2/2) \left[1+(r_1w/r_2)\right]^{(r_1+r_2)/2}}, \hspace{1cm} w>0

\end{align*}\)

A random variable with the pdf \(f(w)\) is said to have an F distribution with \(r_1\) and \(r_2\) degrees of freedom. We write this as \(F(r_1, r_2)\). Table VII in Appendix B of the textbook can be used to find probabilities for a random variable with the \(F(r_1, r_2)\) distribution.

It contains the F-values for various cumulative probabilities \((0.95, 0.975, 0.99)\) (or the equivalent upper − \(\alpha\)th probabilities \((0.05, 0.025, 0.01)\)) of various \(F (r1, r2)\) distributions.

When using this table, it is helpful to note that if a random variable (say, \(W\)) has the \(F(r_1, r_2)\) distribution, then its inverse \(\dfrac{1}{W}\) has the \(F(r_2, r_1)\) distribution.

Illustration

The shape of the F distribution is determined by the degrees of freedom \(r_1\) and \(r_2\). The histogram below shows how an F random variable is generated using 1000 observations each from two chi-square random variables (\(U\) and \(V\)) with degrees of freedom 4 and 8 respectively and forming the ratio \(\dfrac{U/4}{V/8}\).

The lower plot (below histogram) illustrates how the shape of an F distribution changes with the degrees of freedom \(r_1\) and \(r_2\).

Lesson 24: Several Independent Random Variables

Lesson 24: Several Independent Random VariablesIntroduction

In the previous lessons, we explored functions of random variables. We'll do the same in this lesson, too, except here we'll add the requirement that the random variables be independent, and in some cases, identically distributed. Suppose, for example, that we were interested in determining the average weight of the thousands of pumpkins grown on a pumpkin farm. Since we couldn't possibly weigh all of the pumpkins on the farm, we'd want to weigh just a small random sample of pumpkins. If we let:

- \(X_1\) denote the weight of the first pumpkin sampled

- \(X_2\) denote the weight of the second pumpkin sampled

- ...

- \(X_n\) denote the weight of the \(n^{th}\) pumpkin sampled

then we could imagine calculating the average weight of the sampled pumpkins as:

\(\bar{X}=\dfrac{X_1+X_2+\cdots+X_n}{n}\)

Now, because the pumpkins were randomly sampled, we wouldn't expect the weight of one pumpkin, say \(X_1\), to affect the weight of another pumpkin, say \(X_2\). Therefore, \(X_1, X_2, \ldots, X_n\) can be assumed to be independent random variables. And, since \(\bar{X}\) , as defined above, is a function of those independent random variables, it too must be a random variable with a certain probability distribution, a certain mean and a certain variance. Our work in this lesson will all be directed towards the end goal of being able to calculate the mean and variance of the random variable \(\bar{X}\). We'll learn a number things along the way, of course, including a formal definition of a random sample, the expectation of a product of independent variables, and the mean and variance of a linear combination of independent random variables.

Objectives

- To get the big picture for the remainder of the course.

- To learn a formal definition of a random sample.

- To learn what i.i.d. means.

- To learn how to find the expectation of a function of \(n\) independent random variables.

- To learn how to find the expectation of a product of functions of \(n\) independent random variables.

- To learn how to find the mean and variance of a linear combination of random variables.

- To learn that the expected value of the sample mean is \(\mu\).

- To learn that the variance of the sample mean is \(\frac{\sigma^2}{n}\).

- To understand all of the proofs presented in the lesson.

- To be able to apply the methods learned in this lesson to new problems.

24.1 - Some Motivation

24.1 - Some MotivationConsider the population of 8 million college students. Suppose we are interested in determining \(\mu\), the unknown mean distance (in miles) from the students' schools to their hometowns. We can't possibly determine the distance for each of the 8 million students in order to calculate the population mean \(\mu\) and the population variance \(\sigma^2\). We could, however, take a random sample of, say, 100 college students, determine:

\(X_i\)= the distance (in miles) from the home of student \(i\) for \(i=1, 2, \ldots, 100\)

and use the resulting data to learn about the population of college students. How could we obtain that random sample though? Would it be okay to stand outside a major classroom building on the Penn State campus, such as the Willard Building, and ask random students how far they are from their hometown? Probably not! The average distance for Penn State students probably differs greatly from that of college students attending a school in a major city, such as, say The University of California in Los Angeles (UCLA). We need to use a method that ensures that the sample is representative of all college students in the population, not just a subset of the students. Any method that ensures that our sample is truly random will suffice. The following definition formalizes what makes a sample truly random.

Definition. The random variables \(X_i\) constitute a random sample of size \(n\) if and only if:

-

the \(X_i\) are independent, and

-

the \(X_i\) are identically distributed, that is, each \(X_i\) comes from the same distribution \(f(x)\) with mean \(\mu\) and variance \(\sigma^2\).

We say that the \(X_i\) are "i.i.d." (The first i. stands for independent, and the i.d. stands for identically distributed.)

Now, once we've obtained our (truly) random sample, we'll probably want to use the resulting data to calculate the sample mean:

\(\bar{X}=\dfrac{\sum_{i=1}^n X_i}{n}=\dfrac{X_1+X_2+\cdots+X_{100}}{100}\)

and sample variance:

\(S^2=\dfrac{\sum_{i=1}^n (X_i-\bar{X})^2}{n-1}=\dfrac{(X_1-\bar{X})^2+\cdots+(X_{100}-\bar{X})^2}{99}\)

In Stat 415, we'll learn that the sample mean \(\bar{X}\) is the "best" estimate of the population mean \(\mu\) and the sample variance \(S^2\) is the "best" estimate of the population variance \(\sigma^2\). (We'll also learn in what sense the estimates are "best.") Now, before we can use the sample mean and sample variance to draw conclusions about the possible values of the unknown population mean \(\mu\) and unknown population variance \(\sigma^2\), we need to know how \(\bar{X}\) and \(S^2\) behave. That is, we need to know:

- the probability distribution of \(\bar{X}\) and \(S^2\)

- the theoretical mean of of \(\bar{X}\) and \(S^2\)

- the theoretical variance of \(\bar{X}\) and \(S^2\)

Now, note that \(\bar{X}\) and \(S^2\) are sums of independent random variables. That's why we are working in a lesson right now called Several Independent Random Variables. In this lesson, we'll learn about the mean and variance of the random variable \(\bar{X}\). Then, in the lesson called Random Functions Associated with Normal Distributions, we'll add the assumption that the \(X_i\) are measurements from a normal distribution with mean \(\mu\) and variance \(\sigma^2\) to see what we can learn about the probability distribution of \(\bar{X}\) and \(S^2\). In the lesson called The Central Limit Theorem, we'll learn that those results still hold even if our measurements aren't from a normal distribution, providing we have a large enough sample. Along the way, we'll pick up a new tool for our toolbox, namely The Moment-Generating Function Technique. And in the final lesson for the Section (and Course!), we'll see another application of the Central Limit Theorem, namely using the normal distribution to approximate discrete distributions, such as the binomial and Poisson distributions. With our motivation presented, and our curiosity now piqued, let's jump right in and get going!

24.2 - Expectations of Functions of Independent Random Variables

24.2 - Expectations of Functions of Independent Random VariablesOne of our primary goals of this lesson is to determine the theoretical mean and variance of the sample mean:

\(\bar{X}=\dfrac{X_1+X_2+\cdots+X_n}{n}\)

Now, assume the \(X_i\) are independent, as they should be if they come from a random sample. Then, finding the theoretical mean of the sample mean involves taking the expectation of a sum of independent random variables:

\(E(\bar{X})=\dfrac{1}{n} E(X_1+X_2+\cdots+X_n)\)

That's why we'll spend some time on this page learning how to take expectations of functions of independent random variables! A simple example illustrates that we already have a number of techniques sitting in our toolbox ready to help us find the expectation of a sum of independent random variables.

Example 24-1

Suppose we toss a penny three times. Let \(X_1\) denote the number of heads that we get in the three tosses. And, suppose we toss a second penny two times. Let \(X_2\) denote the number of heads we get in those two tosses. If we let:

\(Y=X_1+X_2\)

then \(Y\) denotes the number of heads in five tosses. Note that the random variables \(X_1\) and \(X_2\) are independent and therefore \(Y\) is the sum of independent random variables. Furthermore, we know that:

- \(X_1\) is a binomial random variable with \(n=3\) and \(p=\frac{1}{2}\)

- \(X_2\) is a binomial random variable with \(n=2\) and \(p=\frac{1}{2}\)

- \(Y\) is a binomial random variable with \(n=5\) and \(p=\frac{1}{2}\)

What is the mean of \(Y\), the sum of two independent random variables? And, what is the variance of \(Y\)?

Solution

We can calculate the mean and variance of \(Y\) in three different ways.

-

By recognizing that \(Y\) is a binomial random variable with \(n=5\) and \(p=\frac{1}{2}\), we can use what know about the mean and variance of a binomial random variable, namely that the mean of \(Y\) is:

\(E(Y)=np=5(\frac{1}{2})=\frac{5}{2}\)

and the variance of \(Y\) is:

\(Var(Y)=np(1-p)=5(\frac{1}{2})(\frac{1}{2})=\frac{5}{4}\)

Since sums of independent random variables are not always going to be binomial, this approach won't always work, of course. It would be good to have alternative methods in hand!

-

We could use the linear operator property of expectation. Before doing so, it would be helpful to note that the mean of \(X_1\) is:

\(E(X_1)=np=3(\frac{1}{2})=\frac{3}{2}\)

and the mean of \(X_2\) is:

\(E(X_2)=np=2(\frac{1}{2})=1\)

Now, using the property, we get that the mean of \(Y\) is (thankfully) again \(\frac{5}{2}\):

\(E(Y)=E(X_1+X_2)=E(X_1)+E(X_2)=\dfrac{3}{2}+1=\dfrac{5}{2}\)

Recall that the second equality comes from the linear operator property of expectation. Now, using the linear operator property of expectation to find the variance of \(Y\) takes a bit more work. First, we should note that the variance of \(X_1\) is:

\(Var(X_1)=np(1-p)=3(\frac{1}{2})(\frac{1}{2})=\frac{3}{4}\)

and the variance of \(X_2\) is:

\(Var(X_2)=np(1-p)=2(\frac{1}{2})(\frac{1}{2})=\frac{1}{2}\)

Now, we can (thankfully) show again that the variance of \(Y\) is \(\frac{5}{4}\):

Okay, as if two methods aren't enough, we still have one more method we could use.

-

We could use the independence of the two random variables \(X_1\) and \(X_2\), in conjunction with the definition of expected value of \(Y\) as we know it. First, using the binomial formula, note that we can present the probability mass function of \(X_1\) in tabular form as:

\(x_1\) 0 1 2 3 \(f(x_1)\) \(\frac{1}{8}\) \(\frac{3}{8}\) \(\frac{3}{8}\) \(\frac{1}{8}\) And, we can present the probability mass function of \(X_2\) in tabular form as well:

\(x_2\) 0 1 2 \(f(x_2)\) \(\frac{1}{4}\) \(\frac{2}{4}\) \(\frac{1}{4}\) Now, recall that if \(X_1\) and \(X_2\) are independent random variables, then:

\(f(x_1,x_2)=f(x_1)\cdot f(x_2)\)

We can use this result to help determine \(g(y)\), the probability mass function of \(Y\). First note that, since \(Y\) is the sum of \(X_1\) and \(X_2\), the support of \(Y\) is {0, 1, 2, 3, 4 and 5}. Now, by brute force, we get:

\(g(0)=P(Y=0)=P(X_1=0,X_2=0)=f(0,0)=f_{X_1}(0) \cdot f_{X_2}(0)=\dfrac{1}{8} \cdot \dfrac{1}{4}=\dfrac{1}{32}\)

The second equality comes from the fact that the only way that \(Y\) can equal 0 is if \(X_1=0\) and \(X_2=0\), and the fourth equality comes from the independence of \(X_1\)and \(X_2\). We can make a similar calculation to find the probability that \(Y=1\):

\(g(1)=P(X_1=0,X_2=1)+P(X_1=1,X_2=0)=f_{X_1}(0) \cdot f_{X_2}(1)+f_{X_1}(1) \cdot f_{X_2}(0)=\dfrac{1}{8} \cdot \dfrac{2}{4}+\dfrac{3}{8} \cdot \dfrac{1}{4}=\dfrac{5}{32}\)

The first equality comes from the fact that there are two (mutually exclusive) ways that \(Y\) can equal 1, namely if \(X_1=0\) and \(X_2=1\) or if \(X_1=1\) and \(X_2=0\). The second equality comes from the independence of \(X_1\) and \(X_2\). We can make similar calculations to find \(g(2), g(3), g(4)\), and \(g(5)\). Once we've done that, we can present the p.m.f. of \(Y\) in tabular form as:

\(y = x_1 + x_2\) 0 1 2 3 4 5 \(g(y)\) \(\frac{1}{32}\) \(\frac{5}{32}\) \(\frac{10}{32}\) \(\frac{10}{32}\) \(\frac{5}{32}\) \(\frac{1}{32}\) Then, it is a straightforward calculation to use the definition of the expected value of a discrete random variable to determine that (again!) the expected value of \(Y\) is \(\frac{5}{2}\):

\(E(Y)=0(\frac{1}{32})+1(\frac{5}{32})+2(\frac{10}{32})+\cdots+5(\frac{1}{32})=\frac{80}{32}=\frac{5}{2}\)

The variance of \(Y\) can be calculated similarly. (Do you want to calculate it one more time?!)

The following summarizes the method we've used here in calculating the expected value of \(Y\):

\begin{align} E(Y)=E(X_1+X_2) &= \sum\limits_{x_1 \in S_1}\sum\limits_{x_2 \in S_2} (x_1+x_2)f(x_1,x_2)\\ &= \sum\limits_{x_1 \in S_1}\sum\limits_{x_2 \in S_2} (x_1+x_2)f(x_1) f(x_2)\\ &= \sum\limits_{y \in S} yg(y)\\ \end{align}

The first equality comes, of course, from the definition of \(Y\). The second equality comes from the definition of the expectation of a function of discrete random variables. The third equality comes from the independence of the random variables \(X_1\) and \(X_2\). And, the fourth equality comes from the definition of the expected value of \(Y\), as well as the fact that \(g(y)\) can be determined by summing the appropriate joint probabilities of \(X_1\) and \(X_2\).

The following theorem formally states the third method we used in determining the expected value of \(Y\), the function of two independent random variables. We state the theorem without proof. (If you're interested, you can find a proof of it in Hogg, McKean and Craig, 2005.)

Let \(X_1, X_2, \ldots, X_n\) be \(n\) independent random variables that, by their independence, have the joint probability mass function:

\(f_1(x_1)f_2(x_2)\cdots f_n(x_n)\)

Let the random variable \(Y=u(X_1,X_2, \ldots, X_n)\) have the probability mass function \(g(y)\). Then, in the discrete case:

\(E(Y)=\sum\limits_y yg(y)=\sum\limits_{x_1}\sum\limits_{x_2}\cdots\sum\limits_{x_n}u(x_1,x_2,\ldots,x_n) f_1(x_1)f_2(x_2)\cdots f_n(x_n)\)

provided that these summations exist. For continuous random variables, integrals replace the summations.

In the special case that we are looking for the expectation of the product of functions of \(n\) independent random variables, the following theorem will help us out.

\(E[u_1(x_1)u_2(x_2)\cdots u_n(x_n)]=E[u_1(x_1)]E[u_2(x_2)]\cdots E[u_n(x_n)]\)

That is, the expectation of the product is the product of the expectations.

Proof

For the sake of concreteness, let's assume that the random variables are discrete. Then, the definition of expectation gives us:

\(E[u_1(x_1)u_2(x_2)\cdots u_n(x_n)]=\sum\limits_{x_1}\sum\limits_{x_2}\cdots \sum\limits_{x_n} u_1(x_1)u_2(x_2)\cdots u_n(x_n) f_1(x_1)f_2(x_2)\cdots f_n(x_n)\)

Then, since functions that don't depend on the index of the summation signs can get pulled through the summation signs, we have:

\(E[u_1(x_1)u_2(x_2)\cdots u_n(x_n)]=\sum\limits_{x_1}u_1(x_1)f_1(x_1) \sum\limits_{x_2}u_2(x_2)f_2(x_2)\cdots \sum\limits_{x_n}u_n(x_n)f_n(x_n)\)

Then, by the definition, in the discrete case, of the expected value of \(u_i(X_i)\), our expectation reduces to:

\(E[u_1(x_1)u_2(x_2)\cdots u_n(x_n)]=E[u_1(x_1)]E[u_2(x_2)]\cdots E[u_n(x_n)]\)

Our proof is complete. If our random variables are instead continuous, the proof would be similar. We would just need to make the obvious change of replacing the summation signs with integrals.

Let's return to our example in which we toss a penny three times, and let \(X_1\) denote the number of heads that we get in the three tosses. And, again toss a second penny two times, and let \(X_2\) denote the number of heads we get in those two tosses. In our previous work, we learned that:

- \(E(X_1)=\frac{3}{2}\) and \(\text{Var}(X_1)=\frac{3}{4}\)

- \(E(X_2)=1\) and \(\text{Var}(X_2)=\frac{1}{2}\)

What is the expected value of \(X_1^2X_2\)?

Solution

We'll use the fact that the expectation of the product is the product of the expectations:

24.3 - Mean and Variance of Linear Combinations

24.3 - Mean and Variance of Linear CombinationsWe are still working towards finding the theoretical mean and variance of the sample mean:

\(\bar{X}=\dfrac{X_1+X_2+\cdots+X_n}{n}\)

If we re-write the formula for the sample mean just a bit:

\(\bar{X}=\dfrac{1}{n} X_1+\dfrac{1}{n} X_2+\cdots+\dfrac{1}{n} X_n\)

we can see more clearly that the sample mean is a linear combination of the random variables \(X_1, X_2, \ldots, X_n\). That's why the title and subject of this page! That is, here on this page, we'll add a few a more tools to our toolbox, namely determining the mean and variance of a linear combination of random variables \(X_1, X_2, \ldots, X_n\). Before presenting and proving the major theorem on this page, let's revisit again, by way of example, why we would expect the sample mean and sample variance to have a theoretical mean and variance.

Example 24-2

A statistics instructor conducted a survey in her class. The instructor was interested in learning how many siblings, on average, the students at Penn State University have? She took a random sample of \(n=4\) students, and asked each student how many siblings he/she has. The resulting data were: 0, 2, 1, 1. In an attempt to summarize the data she collected, the instructor calculated the sample mean and sample variance, getting:

\(\bar{X}=\dfrac{4}{4}=1\) and \(S^2=\dfrac{(0-1)^2+(2-1)^2+(1-1)^2+(1-1)^2}{3}=\dfrac{2}{3}\)

The instructor realized though, that if she had asked a different sample of \(n=4\) students how many siblings they have, she'd probably get different results. So, she took a different random sample of \(n=4\) students. The resulting data were: 4, 1, 2, 1. Calculating the sample mean and variance once again, she determined:

\(\bar{X}=\dfrac{8}{4}=2\) and \(S^2=\dfrac{(4-2)^2+(1-2)^2+(2-2)^2+(1-2)^2}{3}=\dfrac{6}{3}=2\)

Hmmm, the instructor thought that was quite a different result from the first sample, so she decided to take yet another sample of \(n=4\) students. Doing so, the resulting data were: 5, 3, 2, 2. Calculating the sample mean and variance yet again, she determined:

\(\bar{X}=\dfrac{12}{4}=3\) and \(S^2=\dfrac{(5-3)^2+(3-3)^2+(2-3)^2+(2-3)^2}{3}=\dfrac{6}{3}=2\)

That's enough of this! I think you can probably see where we are going with this example. It is very clear that the values of the sample mean \(\bar{X}\)and the sample variance \(S^2\) depend on the selected random sample. That is, \(\bar{X}\) and \(S^2\) are continuous random variables in their own right. Therefore, they themselves should each have a particular:

- probability distribution (called a "sampling distribution"),

- mean, and

- variance.

We are still in the hunt for all three of these items. The next theorem will help move us closer towards finding the mean and variance of the sample mean \(\bar{X}\).

Suppose \(X_1, X_2, \ldots, X_n\) are \(n\) independent random variables with means \(\mu_1,\mu_2,\cdots,\mu_n\) and variances \(\sigma^2_1,\sigma^2_2,\cdots,\sigma^2_n\).

Then, the mean and variance of the linear combination \(Y=\sum\limits_{i=1}^n a_i X_i\), where \(a_1,a_2, \ldots, a_n\) are real constants are:

\(\mu_Y=\sum\limits_{i=1}^n a_i \mu_i\)

and:

\(\sigma^2_Y=\sum\limits_{i=1}^n a_i^2 \sigma^2_i\)

respectively.

Proof

Let's start with the proof for the mean first:

Now for the proof for the variance. Starting with the definition of the variance of \(Y\), we have:

\(\sigma^2_Y=Var(Y)=E[(Y-\mu_Y)^2]\)

Now, substituting what we know about \(Y\) and the mean of \(Y\) Y, we have:

\(\sigma^2_Y=E\left[\left(\sum\limits_{i=1}^n a_i X_i-\sum\limits_{i=1}^n a_i \mu_i\right)^2\right]\)

Because the summation signs have the same index (\(i=1\) to \(n\)), we can replace the two summation signs with one summation sign:

\(\sigma^2_Y=E\left[\left(\sum\limits_{i=1}^n( a_i X_i-a_i \mu_i)\right)^2\right]\)

And, we can factor out the constants \(a_i\):

\(\sigma^2_Y=E\left[\left(\sum\limits_{i=1}^n a_i (X_i-\mu_i)\right)^2\right]\)

Now, let's rewrite the squared term as the product of two terms. In doing so, use an index of \(i\) on the first summation sign, and an index of \(j\) on the second summation sign:

\(\sigma^2_Y=E\left[\left(\sum\limits_{i=1}^n a_i (X_i-\mu_i)\right) \left(\sum\limits_{j=1}^n a_j (X_j-\mu_j)\right) \right]\)

Now, let's pull the summation signs together:

\(\sigma^2_Y=E\left[\sum\limits_{i=1}^n \sum\limits_{j=1}^n a_i a_j (X_i-\mu_i) (X_j-\mu_j) \right]\)

Then, by the linear operator property of expectation, we can distribute the expectation:

\(\sigma^2_Y=\sum\limits_{i=1}^n \sum\limits_{j=1}^n a_i a_j E\left[(X_i-\mu_i) (X_j-\mu_j) \right]\)

Now, let's rewrite the variance of \(Y\) by evaluating each of the terms from \(i=1\) to \(n\) and \(j=1\) to \(n\). In doing so, recognize that when \(i=j\), the expectation term is the variance of \(X_i\), and when \(i\ne j\), the expectation term is the covariance between \(X_i\) and \(X_j\), which by the assumed independence, is 0:

Simplifying then, we get:

\(\sigma^2_Y=a_1^2 E\left[(X_1-\mu_1)^2\right]+a_2^2 E\left[(X_2-\mu_2)^2\right]+\cdots+a_n^2 E\left[(X_n-\mu_n)^2\right]\)

And, simplifying yet more using variance notation:

\(\sigma^2_Y=a_1^2 \sigma^2_1+a_2^2 \sigma^2_2+\cdots+a_n^2 \sigma^2_n\)

Finally, we have:

\(\sigma^2_Y=\sum\limits_{i=1}^n a_i^2 \sigma^2_i\)

as was to be proved.

Example 24-3

Let \(X_1\) and \(X_2\) be independent random variables. Suppose the mean and variance of \(X_1\) are 2 and 4, respectively. Suppose, the mean and variance of \(X_2\) are 3 and 5 respectively. What is the mean and variance of \(X_1+X_2\)?

Solution

The mean of the sum is:

\(E(X_1+X_2)=E(X_1)+E(X_2)=2+3=5\)

and the variance of the sum is:

\(Var(X_1+X_2)=(1)^2Var(X_1)+(1)^2Var(X_2)=4+5=9\)

What is the mean and variance of \(X_1-X_2\)?

Solution

The mean of the difference is:

\(E(X_1-X_2)=E(X_1)-E(X_2)=2-3=-1\)

and the variance of the difference is:

\(Var(X_1-X_2)=Var(X_1+(-1)X_2)=(1)^2Var(X_1)+(-1)^2Var(X_2)=4+5=9\)

That is, the variance of the difference in the two random variables is the same as the variance of the sum of the two random variables.

What is the mean and variance of \(3X_1+4X_2\)?

Solution

The mean of the linear combination is:

\(E(3X_1+4X_2)=3E(X_1)+4E(X_2)=3(2)+4(3)=18\)

and the variance of the linear combination is:

\(Var(3X_1+4X_2)=(3)^2Var(X_1)+(4)^2Var(X_2)=9(4)+16(5)=116\)

24.4 - Mean and Variance of Sample Mean

24.4 - Mean and Variance of Sample MeanWe'll finally accomplish what we set out to do in this lesson, namely to determine the theoretical mean and variance of the continuous random variable \(\bar{X}\). In doing so, we'll discover the major implications of the theorem that we learned on the previous page.

Let \(X_1,X_2,\ldots, X_n\) be a random sample of size \(n\) from a distribution (population) with mean \(\mu\) and variance \(\sigma^2\). What is the mean, that is, the expected value, of the sample mean \(\bar{X}\)?

Solution

Starting with the definition of the sample mean, we have:

\(E(\bar{X})=E\left(\dfrac{X_1+X_2+\cdots+X_n}{n}\right)\)

Then, using the linear operator property of expectation, we get:

\(E(\bar{X})=\dfrac{1}{n} [E(X_1)+E(X_2)+\cdots+E(X_n)]\)

Now, the \(X_i\) are identically distributed, which means they have the same mean \(\mu\). Therefore, replacing \(E(X_i)\) with the alternative notation \(\mu\), we get:

\(E(\bar{X})=\dfrac{1}{n}[\mu+\mu+\cdots+\mu]\)

Now, because there are \(n\) \(\mu\)'s in the above formula, we can rewrite the expected value as:

\(E(\bar{X})=\dfrac{1}{n}[n \mu]=\mu \)

We have shown that the mean (or expected value, if you prefer) of the sample mean \(\bar{X}\) is \(\mu\). That is, we have shown that the mean of \(\bar{X}\) is the same as the mean of the individual \(X_i\).

Let \(X_1,X_2,\ldots, X_n\) be a random sample of size \(n\) from a distribution (population) with mean \(\mu\) and variance \(\sigma^2\). What is the variance of \(\bar{X}\)?

Solution

Starting with the definition of the sample mean, we have:

\(Var(\bar{X})=Var\left(\dfrac{X_1+X_2+\cdots+X_n}{n}\right)\)

Rewriting the term on the right so that it is clear that we have a linear combination of \(X_i\)'s, we get:

\(Var(\bar{X})=Var\left(\dfrac{1}{n}X_1+\dfrac{1}{n}X_2+\cdots+\dfrac{1}{n}X_n\right)\)

Then, applying the theorem on the last page, we get:

\(Var(\bar{X})=\dfrac{1}{n^2}Var(X_1)+\dfrac{1}{n^2}Var(X_2)+\cdots+\dfrac{1}{n^2}Var(X_n)\)

Now, the \(X_i\) are identically distributed, which means they have the same variance \(\sigma^2\). Therefore, replacing \(\text{Var}(X_i)\) with the alternative notation \(\sigma^2\), we get:

\(Var(\bar{X})=\dfrac{1}{n^2}[\sigma^2+\sigma^2+\cdots+\sigma^2]\)

Now, because there are \(n\) \(\sigma^2\)'s in the above formula, we can rewrite the expected value as:

\(Var(\bar{X})=\dfrac{1}{n^2}[n\sigma^2]=\dfrac{\sigma^2}{n}\)

Our result indicates that as the sample size \(n\) increases, the variance of the sample mean decreases. That suggests that on the previous page, if the instructor had taken larger samples of students, she would have seen less variability in the sample means that she was obtaining. This is a good thing, but of course, in general, the costs of research studies no doubt increase as the sample size \(n\) increases. There is always a trade-off!

24.5 - More Examples

24.5 - More ExamplesOn this page, we'll just take a look at a few examples that use the material and methods we learned about in this lesson.

Example 24-4

If \(X_1,X_2,\ldots, X_n\) are a random sample from a population with mean \(\mu\) and variance \(\sigma^2\), then what is:

\(E[(X_i-\mu)(X_j-\mu)]\)

for \(i\ne j\), \(i=1, 2, \ldots, n\)?

Solution

The fact that \(X_1,X_2,\ldots, X_n\) constitute a random sample tells us that (1) \(X_i\) is independent of \(X_j\), for all \(i\ne j\), and (2) the \(X_i\) are identically distributed. Now, we know from our previous work that if \(X_i\) is independent of \(X_j\), for \(i\ne j\), then the covariance between \(X_i\) is independent of \(X_j\) is 0. That is:

\(E[(X_i-\mu)(X_j-\mu)]=Cov(X_i,X_j)=0\)

Example 24-5

Let \(X_1, X_2, X_3\) be a random sample of size \(n=3\) from a distribution with the geometric probability mass function:

\(f(x)=\left(\dfrac{3}{4}\right) \left(\dfrac{1}{4}\right)^{x-1}\)

for \(x=1, 2, 3, \ldots\). What is \(P(\max X_i\le 2)\)?

Solution

The only way that the maximum of the \(X_i\) will be less than or equal to 2 is if all of the \(X_i\) are less than or equal to 2. That is:

\(P(\max X_i\leq 2)=P(X_1\leq 2,X_2\leq 2,X_3\leq 2)\)

Now, because \(X_1,X_2,X_3\) are a random sample, we know that (1) \(X_i\) is independent of \(X_j\), for all \(i\ne j\), and (2) the \(X_i\) are identically distributed. Therefore:

\(P(\max X_i\leq 2)=P(X_1\leq 2)P(X_2\leq 2)P(X_3\leq 2)=[P(X_1\leq 2)]^3\)

The first equality comes from the independence of the \(X_i\), and the second equality comes from the fact that the \(X_i\) are identically distributed. Now, the probability that \(X_1\) is less than or equal to 2 is:

\(P(X\leq 2)=P(X=1)+P(X=2)=\left(\dfrac{3}{4}\right) \left(\dfrac{1}{4}\right)^{1-1}+\left(\dfrac{3}{4}\right) \left(\dfrac{1}{4}\right)^{2-1}=\dfrac{3}{4}+\dfrac{3}{16}=\dfrac{15}{16}\)

Therefore, the probability that the maximum of the \(X_i\) is less than or equal to 2 is:

\(P(\max X_i\leq 2)=[P(X_1\leq 2)]^3=\left(\dfrac{15}{16}\right)^3=0.824\)

Lesson 25: The Moment-Generating Function Technique

Lesson 25: The Moment-Generating Function TechniqueOverview

In the previous lesson, we learned that the expected value of the sample mean \(\bar{X}\) is the population mean \(\mu\). We also learned that the variance of the sample mean \(\bar{X}\) is \(\dfrac{\sigma^2}{n}\), that is, the population variance divided by the sample size \(n\). We have not yet determined the probability distribution of the sample mean when, say, the random sample comes from a normal distribution with mean \(\mu\) and variance \(\sigma^2\). We are going to tackle that in the next lesson! Before we do that, though, we are going to want to put a few more tools into our toolbox. We already have learned a few techniques for finding the probability distribution of a function of random variables, namely the distribution function technique and the change-of-variable technique. In this lesson, we'll learn yet another technique called the moment-generating function technique. We'll use the technique in this lesson to learn, among other things, the distribution of sums of chi-square random variables, Then, in the next lesson, we'll use the technique to find (finally) the probability distribution of the sample mean when the random sample comes from a normal distribution with mean \(\mu\) and variance \(\sigma^2\).

Objectives

- To refresh our memory of the uniqueness property of moment-generating functions.

- To learn how to calculate the moment-generating function of a linear combination of \(n\) independent random variables.

- To learn how to calculate the moment-generating function of a linear combination of \(n\) independent and identically distributed random variables.

- To learn the additive property of independent chi-square random variables.

- To use the moment-generating function technique to prove the additive property of independent chi-square random variables.

- To understand the steps involved in each of the proofs in the lesson.

- To be able to apply the methods learned in the lesson to new problems.

25.1 - Uniqueness Property of M.G.F.s

25.1 - Uniqueness Property of M.G.F.sRecall that the moment generating function:

\(M_X(t)=E(e^{tX})\)

uniquely defines the distribution of a random variable. That is, if you can show that the moment generating function of \(\bar{X}\) is the same as some known moment-generating function, then \(\bar{X}\)follows the same distribution. So, one strategy to finding the distribution of a function of random variables is:

- To find the moment-generating function of the function of random variables

- To compare the calculated moment-generating function to known moment-generating functions

- If the calculated moment-generating function is the same as some known moment-generating function of \(X\), then the function of the random variables follows the same probability distribution as \(X\)

Example 25-1

In the previous lesson, we looked at an example that involved tossing a penny three times and letting \(X_1\) denote the number of heads that we get in the three tosses. In the same example, we suggested tossing a second penny two times and letting \(X_2\) denote the number of heads we get in those two tosses. We let:

\(Y=X_1+X_2\)

denote the number of heads in five tosses. What is the probability distribution of \(Y\)?

Solution

We know that:

- \(X_1\) is a binomial random variable with \(n=3\) and \(p=\frac{1}{2}\)

- \(X_2\) is a binomial random variable with \(n=2\) and \(p=\frac{1}{2}\)

Therefore, based on what we know of the moment-generating function of a binomial random variable, the moment-generating function of \(X_1\) is:

\(M_{X_1}(t)=\left(\dfrac{1}{2}+\dfrac{1}{2} e^t\right)^3\)

And, similarly, the moment-generating function of \(X_2\) is:

\(M_{X_2}(t)=\left(\dfrac{1}{2}+\dfrac{1}{2} e^t\right)^2\)

Now, because \(X_1\) and \(X_2\) are independent random variables, the random variable \(Y\) is the sum of independent random variables. Therefore, the moment-generating function of \(Y\) is:

\(M_Y(t)=E(e^{tY})=E(e^{t(X_1+X_2)})=E(e^{tX_1} \cdot e^{tX_2} )=E(e^{tX_1}) \cdot E(e^{tX_2} )\)

The first equality comes from the definition of the moment-generating function of the random variable \(Y\). The second equality comes from the definition of \(Y\). The third equality comes from the properties of exponents. And, the fourth equality comes from the expectation of the product of functions of independent random variables. Now, substituting in the known moment-generating functions of \(X_1\) and \(X_2\), we get:

\(M_Y(t)=\left(\dfrac{1}{2}+\dfrac{1}{2} e^t\right)^3 \cdot \left(\dfrac{1}{2}+\dfrac{1}{2} e^t\right)^2=\left(\dfrac{1}{2}+\dfrac{1}{2} e^t\right)^5\)

That is, \(Y\) has the same moment-generating function as a binomial random variable with \(n=5\) and \(p=\frac{1}{2}\). Therefore, by the uniqueness properties of moment-generating functions, \(Y\) must be a binomial random variable with \(n=5\) and \(p=\frac{1}{2}\). (Of course, we already knew that!)

It seems that we could generalize the way in which we calculated, in the above example, the moment-generating function of \(Y\), the sum of two independent random variables. Indeed, we can! On the next page!

25.2 - M.G.F.s of Linear Combinations

25.2 - M.G.F.s of Linear CombinationsTheorem

If \(X_1, X_2, \ldots, X_n\) are \(n\) independent random variables with respective moment-generating functions \(M_{X_i}(t)=E(e^{tX_i})\) for \(i=1, 2, \ldots, n\), then the moment-generating function of the linear combination:

\(Y=\sum\limits_{i=1}^n a_i X_i\)

is:

\(M_Y(t)=\prod\limits_{i=1}^n M_{X_i}(a_it)\)

Proof

The proof is very similar to the calculation we made in the example on the previous page. That is:

\begin{align} M_Y(t) &= E[e^{tY}]\\ &= E[e^{t(a_1X_1+a_2X_2+\ldots+a_nX_n)}]\\ &= E[e^{a_1tX_1}]E[e^{a_2tX_2}]\ldots E[e^{a_ntX_n}]\\ &= M_{X_1}(a_1t)M_{X_2}(a_2t)\ldots M_{X_n}(a_nt)\\ &= \prod\limits_{i=1}^n M_{X_i}(a_it)\\ \end{align}

The first equality comes from the definition of the moment-generating function of the random variable \(Y\). The second equality comes from the given definition of \(Y\). The third equality comes from the properties of exponents, as well as from the expectation of the product of functions of independent random variables. The fourth equality comes from the definition of the moment-generating function of the random variables \(X_i\), for \(i=1, 2, \ldots, n\). And, the fifth equality comes from using product notation to write the product of the moment-generating functions.

While the theorem is useful in its own right, the following corollary is perhaps even more useful when dealing not just with independent random variables, but also random variables that are identically distributed — two characteristics that we get, of course, when we take a random sample.

Corollary

If \(X_1, X_2, \ldots, X_n\) are observations of a random sample from a population (distribution) with moment-generating function \(M(t)\), then:

- The moment generating function of the linear combination \(Y=\sum\limits_{i=1}^n X_i\) is \(M_Y(t)=\prod\limits_{i=1}^n M(t)=[M(t)]^n\).

- The moment generating function of the sample mean \(\bar{X}=\sum\limits_{i=1}^n \left(\dfrac{1}{n}\right) X_i\) is \(M_{\bar{X}}(t)=\prod\limits_{i=1}^n M\left(\dfrac{t}{n}\right)=\left[M\left(\dfrac{t}{n}\right)\right]^n\).

Proof

- use the preceding theorem with \(a_i=1\) for \(i=1, 2, \ldots, n\)

- use the preceding theorem with \(a_i=\frac{1}{n}\) for \(i=1, 2, \ldots, n\)

Example 25-2

Let \(X_1, X_2\), and \(X_3\) denote a random sample of size 3 from a gamma distribution with \(\alpha=7\) and \(\theta=5\). Let \(Y\) be the sum of the three random variables:

\(Y=X_1+X_2+X_3\)

What is the distribution of \(Y\)?

Solution

The moment-generating function of a gamma random variable \(X\) with \(\alpha=7\) and \(\theta=5\) is:

\(M_X(t)=\dfrac{1}{(1-5t)^7}\)

for \(t<\frac{1}{5}\). Therefore, the corollary tells us that the moment-generating function of \(Y\) is:

\(M_Y(t)=[M_{X_1}(t)]^3=\left(\dfrac{1}{(1-5t)^7}\right)^3=\dfrac{1}{(1-5t)^{21}}\)

for \(t<\frac{1}{5}\), which is the moment-generating function of a gamma random variable with \(\alpha=21\) and \(\theta=5\). Therefore, \(Y\) must follow a gamma distribution with \(\alpha=21\) and \(\theta=5\).

What is the distribution of the sample mean \(\bar{X}\)?

Solution

Again, the moment-generating function of a gamma random variable \(X\) with \(\alpha=7\) and \(\theta=5\) is:

\(M_X(t)=\dfrac{1}{(1-5t)^7}\)

for \(t<\frac{1}{5}\). Therefore, the corollary tells us that the moment-generating function of \(\bar{X}\) is:

\(M_{\bar{X}}(t)=\left[M_{X_1}\left(\dfrac{t}{3}\right)\right]^3=\left(\dfrac{1}{(1-5(t/3))^7}\right)^3=\dfrac{1}{(1-(5/3)t)^{21}}\)

for \(t<\frac{3}{5}\), which is the moment-generating function of a gamma random variable with \(\alpha=21\) and \(\theta=\frac{5}{3}\). Therefore, \(\bar{X}\) must follow a gamma distribution with \(\alpha=21\) and \(\theta=\frac{5}{3}\).

25.3 - Sums of Chi-Square Random Variables

25.3 - Sums of Chi-Square Random VariablesWe'll now turn our attention towards applying the theorem and corollary of the previous page to the case in which we have a function involving a sum of independent chi-square random variables. The following theorem is often referred to as the "additive property of independent chi-squares."

Theorem

Let \(X_i\) denote \(n\) independent random variables that follow these chi-square distributions:

- \(X_1 \sim \chi^2(r_1)\)

- \(X_2 \sim \chi^2(r_2)\)

- \(\vdots\)

- \(X_n \sim \chi^2(r_n)\)

Then, the sum of the random variables:

\(Y=X_1+X_2+\cdots+X_n\)

follows a chi-square distribution with \(r_1+r_2+\ldots+r_n\) degrees of freedom. That is:

\(Y\sim \chi^2(r_1+r_2+\cdots+r_n)\)

Proof

We have shown that \(M_Y(t)\) is the moment-generating function of a chi-square random variable with \(r_1+r_2+\ldots+r_n\) degrees of freedom. That is:

\(Y\sim \chi^2(r_1+r_2+\cdots+r_n)\)as was to be shown.

Theorem

Let \(Z_1, Z_2, \ldots, Z_n\) have standard normal distributions, \(N(0,1)\). If these random variables are independent, then:

\(W=Z^2_1+Z^2_2+\cdots+Z^2_n\)

follows a \(\chi^2(n)\) distribution.

Proof

Recall that if \(Z_i\sim N(0,1)\), then \(Z_i^2\sim \chi^2(1)\) for \(i=1, 2, \ldots, n\). Then, by the additive property of independent chi-squares:

\(W=Z^2_1+Z^2_2+\cdots+Z^2_n \sim \chi^2(1+1+\cdots+1)=\chi^2(n)\)

That is, \(W\sim \chi^2(n)\), as was to be proved.

Corollary

If \(X_1, X_2, \ldots, X_n\) are independent normal random variables with different means and variances, that is:

\(X_i \sim N(\mu_i,\sigma^2_i)\)

for \(i=1, 2, \ldots, n\). Then:

\(W=\sum\limits_{i=1}^n \dfrac{(X_i-\mu_i)^2}{\sigma^2_i} \sim \chi^2(n)\)

Proof

Recall that:

\(Z_i=\dfrac{(X_i-\mu_i)}{\sigma_i} \sim N(0,1)\)

Therefore:

\(W=\sum\limits_{i=1}^n Z^2_i=\sum\limits_{i=1}^n \dfrac{(X_i-\mu_i)^2}{\sigma^2_i} \sim \chi^2(n)\)

as was to be proved.

Lesson 26: Random Functions Associated with Normal Distributions

Lesson 26: Random Functions Associated with Normal DistributionsOverview

In the previous lessons, we've been working our way up towards fully defining the probability distribution of the sample mean \(\bar{X}\) and the sample variance \(S^2\). We have determined the expected value and variance of the sample mean. Now, in this lesson, we (finally) determine the probability distribution of the sample mean and sample variance when a random sample \(X_1, X_2, \ldots, X_n\) is taken from a normal population (distribution). We'll also learn about a new probability distribution called the (Student's) t distribution.

Objectives

- To learn the probability distribution of a linear combination of independent normal random variables \(X_1, X_2, \ldots, X_n\).

- To learn how to find the probability that a linear combination of independent normal random variables \(X_1, X_2, \ldots, X_n\) takes on a certain interval of values.

- To learn the sampling distribution of the sample mean when \(X_1, X_2, \ldots, X_n\) are a random sample from a normal population with mean \(\mu\) and variance \(\sigma^2\).

- To use simulation to get a feel for the shape of a probability distribution.

- To learn the sampling distribution of the sample variance when \(X_1, X_2, \ldots, X_n\) are a random sample from a normal population with mean \(\mu\) and variance \(\sigma^2\).

- To learn the formal definition of a \(T\) random variable.

- To learn the characteristics of Student's \(t\) distribution.

- To learn how to read a \(t\)-table to find \(t\)-values and probabilities associated with \(t\)-values.

- To understand each of the steps in the proofs in the lesson.

- To be able to apply the methods learned in this lesson to new problems.

26.1 - Sums of Independent Normal Random Variables

26.1 - Sums of Independent Normal Random VariablesWell, we know that one of our goals for this lesson is to find the probability distribution of the sample mean when a random sample is taken from a population whose measurements are normally distributed. Then, let's just get right to the punch line! Well, first we'll work on the probability distribution of a linear combination of independent normal random variables \(X_1, X_2, \ldots, X_n\). On the next page, we'll tackle the sample mean!

If \(X_1, X_2, \ldots, X_n\) >are mutually independent normal random variables with means \(\mu_1, \mu_2, \ldots, \mu_n\) and variances \(\sigma^2_1,\sigma^2_2,\cdots,\sigma^2_n\), then the linear combination:

\(Y=\sum\limits_{i=1}^n c_iX_i\)

follows the normal distribution:

\(N\left(\sum\limits_{i=1}^n c_i \mu_i,\sum\limits_{i=1}^n c^2_i \sigma^2_i\right)\)

Proof

We'll use the moment-generating function technique to find the distribution of \(Y\). In the previous lesson, we learned that the moment-generating function of a linear combination of independent random variables \(X_1, X_2, \ldots, X_n\) >is:

\(M_Y(t)=\prod\limits_{i=1}^n M_{X_i}(c_it)\)