Section 3: Nonparametric Methods

Section 3: Nonparametric Methods

In the previous sections, all of the methods we derived were based on making some sort of underlying assumptions about the data − for example, "the data are normally distributed," or the "population variances are equal." How do we go about using our data to answer our scientific questions if the assumptions on which our methods are based don't hold? That's what we'll tackle in this section. Specifically, we will:

- learn how to use the chi-square goodness of fit test to test whether random categorical variables follow a particular probability distribution

- learn how to use the chi-square test for testing whether two or more multinomial distributions are equal

- learn how to use the chi-square test to test whether two (or more) random categorical variables are independent

- define, determine, and use order statistics to draw conclusions about a median, as well as other percentiles

- learn how to use the Wilcoxon test to conduct a hypothesis test for the median of a population

- learn how to use a run test to test the equality of two distribution functions

- learn how to use a run test to test for the randomness of a sequence of events

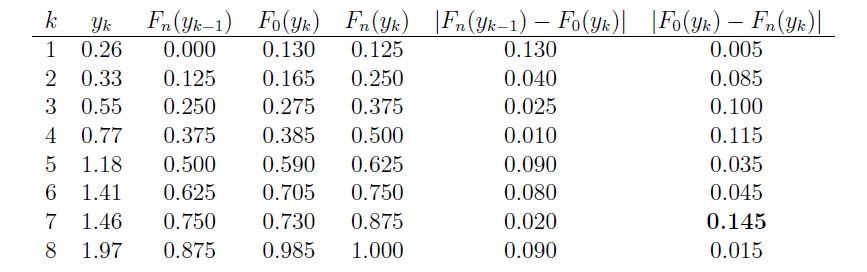

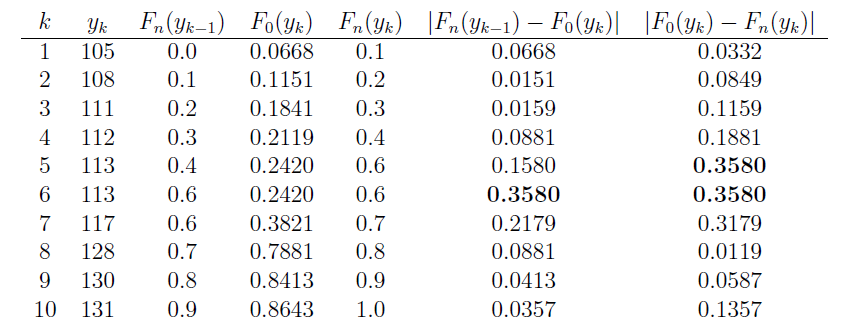

- learn how to use the Kolmogorov-Smirnov goodness-of-fit test to test how well an empirical distribution function fits a hypothesized distribution function

- learn how to use resampling methods to find approximate distributions of statistics that are used to make statistical inference

Lesson 16: Chi-Square Goodness-of-Fit Tests

Lesson 16: Chi-Square Goodness-of-Fit TestsSuppose the Penn State student population is 60% female and 40%, male. Then, if a sample of 100 students yields 53 females and 47 males, can we conclude that the sample is (random and) representative of the population? That is, how "good" do the data "fit" the probability model? As the title of the lesson suggests, that's the kind of question that we will answer in this lesson.

16.1 - The General Approach

16.1 - The General ApproachExample 16-1

As is often the case, we'll motivate the methods of this lesson by way of example. Specifically, we'll return to the question posed in the introduction to this lesson.

Suppose the Penn State student population is 60% female and 40%, male. Then, if a sample of 100 students yields 53 females and 47 males, can we conclude that the sample is (random and) representative of the population? That is, how "good" do the data "fit" the assumed probability model of 60% female and 40% male?

Answer

Testing whether there is a "good fit" between the observed data and the assumed probability model amounts to testing:

\(H_0 : p_F =0.60\)

\(H_A : p_F \ne 0.60\)

Now, letting \(Y_1\) denote the number of females selected, we know that \(Y_1\) follows a binomial distribution with n trials and probability of success \(p_1\). That is:

\(Y_1 \sim b(n,p_1)\)

Therefore, the expected value and variance of \(Y_1\) are, respectively:

\(E(Y_1)=np_1\) and \(Var (Y_1) =np_1(1-p_1)\)

And, letting \(Y_2\) denote the number of males selected, we know that \(Y_2 = n − Y_1\) follows a binomial distribution with n trials and probability of success \(p_2\). That is:

\(Y_2 = n-Y_1 \sim (b(n,p_2)=b(n,1-p_1)\)

Therefore, the expected value and variance of \(Y_2\) are, respectively:

\(E(Y_2)=n(1-p_1)=np_2\) and \(Var(Y_2)=n(1-p_1)(1-(1-p_1))=np_1(1-p_1)=np_2(1-p_2)\)

Now, for large samples (\(np_1 ≥ 5\) and \(n(1 − p_1) ≥ 5)\), the Central Limit Theorem yields the normal approximation to the binomial distribution. That is:

\(Z=\dfrac{(Y_1-np_1)}{\sqrt{np_1(1-p_1)}}\)

follows, at least approximately, the standard normal N(0,1) distribution. Therefore, upon squaring Z, we get that:

\(Z^2=Q_1=\dfrac{(Y_1-np_1)^2}{np_1(1-p_1)}\)

follows an approximate chi-square distribution with one degree of freedom. Now, we could stop there. But, that's not typically what is done. Instead, we can rewrite \(Q_1\) a bit. Let's start by multiplying \(Q_1\) by 1 in a special way, that is, by multiplying it by \(\left( \left( 1 − p_1 \right) + p_1 \right)\):

\(Q_1=\dfrac{(Y_1-np_1)^2}{np_1(1-p_1)} \times ((1-p_1)+p_1)\)

Then, distributing the "1" across the numerator, we get:

\(Q_1=\dfrac{(Y_1-np_1)^2(1-p_1)}{np_1(1-p_1)}+\dfrac{(Y_1-np_1)^2p_1}{np_1(1-p_1)}\)

which simplies to:

\(Q_1=\dfrac{(Y_1-np_1)^2}{np_1}+\dfrac{(Y_1-np_1)^2}{n(1-p_1)}\)

Now, taking advantage of the fact that \(Y_1 = n − Y_2\) and \(p_1 = 1 − p_2\), we get:

\(Q_1=\dfrac{(Y_1-np_1)^2}{np_1}+\dfrac{(n-Y_2-n(1-p_2))^2}{np_2}\)

which simplifies to:

\(Q_1=\dfrac{(Y_1-np_1)^2}{np_1}+\dfrac{(-(Y_2-np_2))^2}{np_2}\)

Just one more thing to simplify before we're done:

\(Q_1=\dfrac{(Y_1-np_1)^2}{np_1}+\dfrac{(Y_2-np_2)^2}{np_2}\)

In summary, we have rewritten \(Q_1\) as:

\( Q_1=\sum_{i=1}^{2}\dfrac{(Y_i-np_i)^2}{np_i}=\sum_{i=1}^{2}\dfrac{(\text{OBSERVED }-\text{ EXPECTED})^2}{EXPECTED} \)

We'll use this form of \(Q_1\), and the fact that \(Q_1\) follows an approximate chi-square distribution with one degree of freedom, to conduct the desired hypothesis test.

Before we return to solving the problem posed by our example, a couple of points are worthy of emphasis.

- First, \(Q_1\) has only one degree of freedom, since there is only one independent count, namely \(Y_1\). Once \(Y_1\) is known, the value of \(Y_2 = n − Y_1\) immediately follows.

- Note that the derived approach requires the Central Limit Theorem to kick in. The general rule of thumb is that the expected number of successes must be at least 5 (that is, \(np_1 ≥ 5\)) and the expected number of failures must be at least 5 (that is, \(n(1−p_1) ≥ 5\)).

- The statistic \(Q_1\) will be large if the observed counts are very different from the expected counts. Therefore, we must reject the null hypothesis \(H_0\) if \(Q_1\) is large. How large is large? Large is determined by the values of a chi-square random variable with one degree of freedom, which can be obtained either from a statistical software package, such as Minitab or SAS or from a standard chi-square table, such as the one in the back of our textbook.

- The statistic \(Q_1\) is called the chi-square goodness-of-fit statistic.

Example 16-2

Suppose the Penn State student population is 60% female and 40%, male. Then, if a sample of 100 students yields 53 females and 47 males, can we conclude that the sample is (random and) representative of the population? Use the chi-square goodness-of-fit statistic to test the hypotheses:

\(H_0 : p_F =0.60\)

\(H_A : p_F \ne 0.60\)

using a significance level of \(\alpha = 0.05\).

Answer

The value of the test statistic \(Q_1\) is:

\(Q_1=\dfrac{(53-60)^2}{60}+\dfrac{(47-40)^2}{40}=2.04\)

We should reject the null hypothesis if the observed number of counts is very different from the expected number of counts, that is if \(Q_1\) is large. Because \(Q_1\) follows a chi-square distribution with one degree of freedom, we should reject the null hypothesis, at the 0.05 level, if:

\(Q_1 \ge \chi_{0.05, 1}^{2}=3.84\)

Because:

\(Q_1=2.04 < 3.84\)

we do not reject the null hypothesis. There is not enough evidence at the 0.05 level to conclude that the data don't fit the assumed probability model.

As an aside, it is interesting to note the relationship between using the chi-square goodness of fit statistic \(Q_1\) and the Z-statistic we've previously used for testing a null hypothesis about a proportion. In this case:

\( Z=\dfrac{0.53-0.60}{\sqrt{\frac{(0.60)(0.40)}{100}}}=-1.428 \)

which, you might want to note that, if we square it, we get the same value as we did for \(Q_1\):

\(Q_1=Z^2 =(-1.428)^2=2.04\)

as we should expect. The Z-test for a proportion tells us that we should reject if:

\(|Z| \ge 1.96 \)

Well, again, if we square it, we should see that that's equivalent to rejecting if:

\(Q_1 \ge (1.96)^2 =3.84\)

And not surprisingly, the P-values obtained from the two approaches are identical. The P-value for the chi-square goodness-of-fit test is:

\(P=P(\chi_{(1)}^{2} > 2.04)=0.1532\)

while the P-value for the Z-test is:

\(P=2 \times P(Z>1.428)=2(0.0766)=0.1532\)

Identical, as we should expect!

16.2 - Extension to K Categories

16.2 - Extension to K CategoriesThe work on the previous page is all well and good if your probability model involves just two categories, which as we have seen, reduces to conducting a test for one proportion. What happens if our probability model involves three or more categories? It takes some theoretical work beyond the scope of this course to show it, but the chi-square statistic that we derived on the previous page can be extended to accommodate any number of k categories.

The Extension

Suppose an experiment can result in any of k mutually exclusive and exhaustive outcomes, say \(A_1, A_2, \dots, A_k\). If the experiment is repeated n independent times, and we let \(p_i = P(A_i)\) and \(Y_i\) = the number of times the experiment results in \(A_i, i = 1, \dots, k\), then we can summarize the number of observed outcomes and the number of expected outcomes for each of the k categories in a table as follows:

| Categories | 1 | 2 | . . . | \(k - 1\) | \(k\) |

|---|---|---|---|---|---|

| Observed | \(Y_1\) | \(Y_2\) | . . . | \(Y_{k - 1}\) | \(n - Y_1 - Y_2 - . . . - Y_{k - 1}\) |

| Expected | \(np_1\) | \(np_2\) | . . . | \(np_{k - 1}\) | \(np_k\) |

Karl Pearson showed that the chi-square statistic Q_k−1 defined as:

\[Q_{k-1}=\sum_{i=1}^{k}\frac{(Y_i - np_i)^2}{np_i} \]

follows approximately a chi-square random variable with k−1 degrees of freedom. Let's try it out on an example.

Example 16-3

A particular brand of candy-coated chocolate comes in five different colors that we shall denote as:

- \(A_1 = \text{{brown}}\)

- \(A_2 = \text{{yellow}}\)

- \(A_3 = \text{{orange}}\)

- \(A_4 = \text{{green}}\)

- \(A_5 = \text{{coffee}}\)

Let \(p_i\) equal the probability that the color of a piece of candy selected at random belongs to \(A_i\), for \(i = 1, 2 3, 4, 5\). Test the following null and alternative hypotheses:

\(H_0 : p_{Br}=0.4,p_{Y}=0.2,p_{O}=0.2,p_{G}=0.1,p_{C}=0.1 \)

\(H_A : p_{i} \text{ not specified in null (many possible alternatives) } \)

using a random sample of n = 580 pieces of candy whose colors yielded the respective frequencies 224, 119, 130, 48, and 59. (This example comes from exercises 8.1-2 in the Hogg and Tanis (8th edition) textbook).

Answer

We can summarize the observed \((y_i)\) and expected \((np_i)\) counts in a table as follows:

| Categories | Brown | Yellow | Orange | Green | Coffee | Total |

|---|---|---|---|---|---|---|

| Observed \(y_i\) | 224 | 119 | 130 | 48 | 59 | 580 |

| Assumed \(H_0 (p_i)\) | 0.4 | 0.2 | 0.2 | 0.1 | 0.1 | 1.0 |

| Expected \(np_i\) | 232 | 116 | 116 | 58 | 58 | 580 |

where, for example, the expected number of brown candies is:

\(np_1 = 580(0.40) = 232\)

and the expected number of green candies is:

\(np_4 = 580(0.10) = 58\)

Once we have the observed and expected number of counts, the calculation of the chi-square statistic is straightforward. It is:

\(Q_4=\dfrac{(224-232)^2}{232}+d\frac{(119-116)^2}{116}+\dfrac{(130-116)^2}{116}+\dfrac{(48-58)^2}{58}+\dfrac{(59-58)^2}{58} \)

Simplifying, we get:

\(Q_4=\dfrac{64}{232}+\dfrac{9}{116}+\dfrac{196}{116}+\dfrac{100}{58}+\dfrac{1}{58}=3.784 \)

Because there are k = 5 categories, we have to compare our chi-square statistic \(Q_4\) to a chi-square distribution with k−1 = 5−1 = 4 degrees of freedom:

\(\text{Reject }H_0 \text{ if } Q_4\ge \chi_{4,0.05}^{2}=9.488\)

Because \(Q_4 = 3.784 < 9.488\), we fail to reject the null hypothesis. There is insufficient evidence at the 0.05 level to conclude that the distribution of the color of the candies differs from that specified in the null hypothesis.

By the way, this might be a good time to think about the practical meaning of the term "degrees of freedom." Recalling the example on the last page, we had two categories (male and female) and one degree of freedom. If we are sampling n = 100 people and 53 of them are female, then we absolutely must have 100−53 = 47 males. If we had instead 62 females, then we absolutely must have 100−62 = 38 males. That is, the number of females is the one number that is "free" to be any number, but once it is determined, then the number of males immediately follows. It is in this sense that we have "one degree of freedom."

With the example on this page, we have five categories of candies (brown, yellow, orange, green, coffee) and four degrees of freedom. If we are sampling n = 580 candies, and 224 are brown, 119 are yellow, 130 are orange, and 48 are green, then we absolutely must have 580−(224+119+130+48) = 59 coffee-colored candies. In this case, we have four numbers that are "free" to be any number, but once they are determined, then the number of coffee-colored candies immediately follows. It is in this sense that we have "four degrees of freedom."

16.3 - Unspecified Probabilities

16.3 - Unspecified ProbabilitiesFor the two examples that we've thus far considered, the probabilities were pre-specified. For the first example, we were interested in seeing if the data fit a probability model in which there was a 0.60 probability that a randomly selected Penn State student was female. In the second example, we were interested in seeing if the data fit a probability model in which the probabilities of selecting a brown, yellow, orange, green, and coffee-colored candy was 0.4, 0.2, 0.2, 0.1, and 0.1, respectively. That is, we were interested in testing specific probabilities:

\(H_0 : p_{B}=0.40,p_{Y}=0.20,p_{O}=0.20,p_{G}=0.10,p_{C}=0.10 \)

Someone might be also interested in testing whether a data set follows a specific probability distribution, such as:

\(H_0 : X \sim b(n, 1/2)\)

What if the probabilities aren't pre-specified though? That is, suppose someone is interested in testing whether a random variable is binomial, but with an unspecified probability of success:

\(H_0 : X \sim b(n, p)\)

Can we still use the chi-square goodness-of-fit statistic? The short answer is yes... with just a minor modification.

Example 16-4

Let X denote the number of heads when four dimes are tossed at random. One hundred repetitions of this experiment resulted in 0, 1, 2, 3, and 4 heads being observed on 8, 17, 41, 30, and 4 trials, respectively. Under the assumption that the four dimes are independent, and the probability of getting a head on each coin is p, the random variable X is b(4, p). In light of the observed data, is b(4, p) a reasonable model for the distribution of X?

Answer

In order to use the chi-square statistic to test the data, we need to be able to determine the observed and expected number of trials in which we'd get 0, 1, 2, 3, and 4 heads. The observed part is easy... we know those:

| X | 0 | 1 | 2 | 3 | 4 |

|---|---|---|---|---|---|

| Observed | 8 | 17 | 41 | 30 | 4 |

| Expected |

It's the expected numbers that are a problem. If the probability p of getting ahead were specified, we'd be able to calculate the expected numbers. Suppose, for example, that p = 1/2. Then, the probability of getting zero heads in four dimes is:

\(P(X=0)=\binom{4}{0}\left(\dfrac{1}{2}\right)^0\left(\dfrac{1}{2}\right)^4=0.0625 \)

and therefore the expected number of trials resulting in 0 heads is 100 × 0.0625 = 6.25. We could make similar calculations for the case of 1, 2, 3, and 4 heads, and we would be well on our way to using the chi-square statistic:

\(Q_4=\sum_{i=0}^{4}\dfrac{(Obs_i-Exp_i)^2}{Exp_i} \)

and comparing it to a chi-square distribution with 5−1 = 4 degrees of freedom. But, we don't know p, as it is unspecified! What do you think the logical thing would be to do in this case? Sure... we'd probably want to estimate p. But then that begs the question... what should we use as an estimate of p?

One way of estimating p would be to minimize the chi-square statistic \(Q_4\) with respect to p, yielding an estimator \(\tilde{p}\). This \(\tilde{p}\) estimator is called, perhaps not surprisingly, a minimum chi-square estimator of p. If \(\tilde{p}\) is used in calculating the expected numbers that appear in \(Q_4\), it can be shown (not easily, and therefore we won't!) that \(Q_4\) still has an approximate chi-square distribution but with only 4−1 = 3 degrees of freedom. The number of degrees of freedom of the approximating chi-square distribution is reduced by one because we have to estimate one parameter in order to calculate the chi-square statistic. In general, the number of degrees of freedom of the approximating chi-square distribution is reduced by d, the number of parameters estimated. If we estimate two parameters, we reduce the degrees of freedom by two. And so on.

This all seems simple enough. There's just one problem... it is usually very difficult to find minimum chi-square estimators. So what to do? Well, most statisticians just use some other reasonable method of estimating the unspecified parameters, such as maximum likelihood estimation. The good news is that the chi-square statistic testing method still works well. (It should be noted, however, that the approach does provide a slightly larger probability of rejecting the null hypothesis than would the approach based purely on the minimized chi-square.)

Let's summarize

Chi-square method when parameters are unspecified. If you are interested in testing whether a data set fits a probability model with d parameters left unspecified:

- Estimate the d parameters using the maximum likelihood method (or another reasonable method).

- Calculate the chi-square statistic \(Q_{k−1}\) using the obtained estimates.

- Compare the chi-square statistic to a chi-square distribution with (k−1)−d degrees of freedom.

Example 16-5

Let X denote the number of heads when four dimes are tossed at random. One hundred repetitions of this experiment resulted in 0, 1, 2, 3, and 4 heads being observed on 8, 17, 41, 30, and 4 trials, respectively. Under the assumption that the four dimes are independent, and the probability of getting a head on each coin is p, the random variable X is b(4, p). In light of the observed data, is b(4, p) a reasonable model for the distribution of X?

Answer

Given that four dimes are tossed 100 times, we have 400 coin tosses resulting in 205 heads for an estimated probability of success of 0.5125:

\(\hat{p}=\dfrac{0(8)+1(17)+2(41)+3(30)+4(4)}{400}=\dfrac{205}{400}=0.5125 \)

Using 0.5125 as the estimate of p, we can use the binomial p.m.f. (or Minitab!) to calculate the probability that X = 0, 1, ..., 4:

| x | P ( X = x ) |

|---|---|

| 0 | 0.056480 |

| 1 | 0.237508 |

| 2 | 0.374531 |

| 3 | 0.262492 |

| 4 | 0.068988 |

and then, using the probabilities, the expected number of trials resulting in 0, 1, 2, 3, and 4 heads:

| X | 0 | 1 | 2 | 3 | 4 |

|---|---|---|---|---|---|

| Observed | 8 | 17 | 41 | 30 | 4 |

| \(P(X = i)\) | 0.0565 | 0.2375 | 0.3745 | 0.2625 | 0.0690 |

| Expected | 5.65 | 23.75 | 37.45 | 26.25 | 6.90 |

Calculating the chi-square statistic, we get:

\(Q_4=\dfrac{(8-5.65)^2}{5.65}+\dfrac{(17-23.75)^2}{23.75}+ ... + \dfrac{(4-6.90)^2}{6.90} =4.99\)

We estimated the d = 1 parameter in calculating the chi-square statistic. Therefore, we compare the statistic to a chi-square distribution with (5−1)−1 = 3 degrees of freedom. Doing so:

\(Q_4= 4.99 < \chi_{3,0.05}^{2}=7.815\)

we fail to reject the null hypothesis. There is insufficient evidence at the 0.05 level to conclude that the data don't fit a binomial probability model.

Let's take a look at another example.

Example 16-6

Let X equal the number of alpha particles emitted from barium-133 in 0.1 seconds and counted by a Geiger counter. One hundred observations of X produced these data.

It is claimed that X follows a Poisson distribution. Use a chi-square goodness-of-fit statistic to test whether this is true.

Answer

Note that very few observations resulted in 0, 1, or 2 alpha particles being emitted in 0.1 second. And, very few observations resulted in 10, 11, or 12 alpha particles being emitted in 0.1 second. Therefore, let's "collapse" the data at the two ends, yielding us nine "not-so-sparse" categories:

| Category | \(X\) | Obs'd |

|---|---|---|

| 1 | 0,1,2* | 5 |

| 2 | 3 | 13 |

| 3 | 4 | 19 |

| 4 | 5 | 16 |

| 5 | 6 | 15 |

| 6 | 7 | 9 |

| 7 | 8 | 12 |

| 8 | 9 | 7 |

| 9 | 10,11,12* | 4 |

| \(n = 100\) |

Because \(\lambda\), the mean of X, is not specified, we can estimate it with its maximum likelihood estimator, namely, the sample mean. Using the data, we get:

\(\bar{x}=\dfrac{1(1)+2(4)+3(13)+ ... + 12(1)}{100}=\dfrac{559}{100}=5.6\)

We can now estimate the probability that an observation will fall into each of the categories. The probability of falling into category 1, for example, is:

\(P(\{1\})=P(X=0)+P(X=1)+P(X=2) =\dfrac{e^{-5.6}5.6^0}{0!}+\dfrac{e^{-5.6}5.6^1}{1!}+\dfrac{e^{-5.6}5.6^2}{2!}=0.0824 \)

Here's what our table looks like now, after adding a column containing the estimated probabilities:

| Category | \(X\) | Obs'd | \(p_i = \left(e^{-5.6}5.6^x\right) / x!\) |

|---|---|---|---|

| 1 | 0,1,2* | 5 | 0.0824 |

| 2 | 3 | 13 | 0.1082 |

| 3 | 4 | 19 | 0.1515 |

| 4 | 5 | 16 | 0.1697 |

| 5 | 6 | 15 | 0.1584 |

| 6 | 7 | 9 | 0.1267 |

| 7 | 8 | 12 | 0.0887 |

| 8 | 9 | 7 | 0.0552 |

| 9 | 10,11,12* | 4 | 0.0539 |

| \(n = 100\) |

Now, we just have to add a column containing the expected number falling into each category. The expected number falling into category 1, for example, is 0.0824 × 100 = 8.24. Doing a similar calculation for each of the categories, we can add our column of expected numbers:

| Category | \(X\) | Obs'd | \(p_i = \left(e^{-5.6}5.6^x\right) / x!\) | Exp'd |

|---|---|---|---|---|

| 1 | 0,1,2* | 5 | 0.0824 | 8.24 |

| 2 | 3 | 13 | 0.1082 | 10.82 |

| 3 | 4 | 19 | 0.1515 | 15.15 |

| 4 | 5 | 16 | 0.1697 | 16.97 |

| 5 | 6 | 15 | 0.1584 | 15.84 |

| 6 | 7 | 9 | 0.1267 | 12.67 |

| 7 | 8 | 12 | 0.0887 | 8.87 |

| 8 | 9 | 7 | 0.0552 | 5.52 |

| 9 | 10,11,12* | 4 | 0.0539 | 5.39 |

| \(n = 100\) | 99.47 |

Now, we can use the observed numbers and the expected numbers to calculate our chi-square test statistic. Doing so, we get:

\(Q_{9-1}=\dfrac{(5-8.24)^2}{8.24}+\dfrac{(13-10.82)^2}{10.82}+ ... +\dfrac{(4-5.39)^2}{5.39}=5.7157 \)

Because we estimated d = 1 parameter, we need to compare our chi-square statistic to a chi-square distribution with (9−1)−1 = 7 degrees of freedom. That is, our critical region is defined as:

\(\text{Reject } H_0 \text{ if } Q_8 \ge \chi_{8-1, 0.05}^{2}=\chi_{7, 0.05}^{2}=14.07 \)

Because our test statistic doesn't fall in the rejection region, that is:

\(Q_8=5.77157 < \chi_{7, 0.05}^{2}=14.07\)

we fail to reject the null hypothesis. There is insufficient evidence at the 0.05 level to conclude that the data don't fit a Poisson probability model.

16.4 - Continuous Random Variables

16.4 - Continuous Random VariablesWhat if we are interested in using a chi-square goodness-of-fit test to see if our data follow some continuous distribution? That is, what if we want to test:

\( H_0 : F(w) =F_0(w)\)

where \(F_0 (w)\) is some known, specified distribution. Clearly, in this situation, it is no longer obvious what constitutes each of the categories. Perhaps we could all agree that the logical thing to do would be to divide up the interval of possible values into k "buckets" or "categories," called \(A_1, A_2, \dots, A_k\), say, into which the observed data can fall. Letting \(Y_i\) denote the number of times the observed value of W belongs to bucket \(A_i, i = 1, 2, \dots, k\), the random variables \(Y_1, Y_2, \dots, Y_k\) follow a multinomial distribution with parameters \(n, p_1, p_2, \dots, p_{k−1}\). The hypothesis that we actually test is a modification of the null hypothesis above, namely:

\(H_{0}^{'} : p_i = p_{i0}, i=1, 2, \dots , k \)

The hypothesis is rejected if the observed value of the chi-square statistic:

\(Q_{k-1} =\sum_{i=1}^{k}\frac{(Obs_i - Exp_i)^2}{Exp_i}\)

is at least as great as \(\chi_{\alpha}^{2}(k-1)\). If the hypothesis \(H_{0}^{'} : p_i = p_{i0}, i=1, 2, \dots , k\) is not rejected, then we do not reject the original hypothesis \(H_0 : F(w) =F_0(w)\) .

Let's make this proposed procedure more concrete by taking a look at an example.

Example 16-7

The IQs of one-hundred randomly selected people were determined using the Stanford-Binet Intelligence Quotient Test. The resulting data were, in sorted order, as follows:

| 54 | 66 | 74 | 74 | 75 | 78 | 79 | 80 | 81 | 82 |

|---|---|---|---|---|---|---|---|---|---|

| 82 | 82 | 83 | 84 | 87 | 88 | 88 | 88 | 88 | 89 |

| 89 | 89 | 89 | 89 | 90 | 90 | 90 | 91 | 92 | 93 |

| 93 | 93 | 94 | 96 | 96 | 97 | 97 | 98 | 98 | 99 |

| 99 | 99 | 99 | 99 | 100 | 100 | 100 | 102 | 102 | 102 |

| 102 | 102 | 103 | 103 | 104 | 104 | 104 | 105 | 105 | 105 |

| 105 | 106 | 106 | 106 | 107 | 107 | 108 | 108 | 108 | 109 |

| 109 | 109 | 110 | 111 | 111 | 111 | 111 | 112 | 112 | 112 |

| 114 | 114 | 115 | 115 | 115 | 116 | 118 | 118 | 120 | 121 |

| 121 | 122 | 123 | 125 | 126 | 127 | 127 | 131 | 132 | 139 |

Test the null hypothesis that the data come from a normal distribution with a mean of 100 and a standard deviation of 16.

Answer

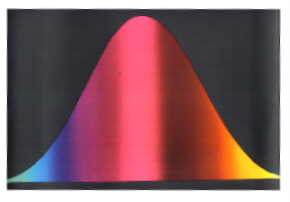

Hmm. So, where do we start? Well, we first have to define some categories. Let's divide up the interval of possible IQs into \(k = 10\) sets of equal probability \(\dfrac{1}{k} = \dfrac{1}{10}\). Perhaps this is best seen pictorially:

So, what's going on in this picture? Well, first the normal density is divided up into 10 intervals of equal probability (0.10). Well, okay, so the picture is not drawn very well to scale. At any rate, we then find the IQs that correspond to the \(k = 10\) cumulative probabilities of 0.1, 0.2, 0.3, etc. This is done in two steps:

- Step 1

first by finding the Z-scores associated with the cumulative probabilities 0.1, 0.2, 0.3, etc.

- Step 2

then by converting each Z-score into an X-value. It is those X-values (IQs) that will make up the "right-hand side" of each bucket:

Category \(X\) Obs'd \(p_i = \left(e^{-5.6}5.6^x\right) / x!\) Exp'd 1 0,1,2* 5 0.0824 8.24 2 3 13 0.1082 10.82 3 4 19 0.1515 15.15 4 5 16 0.1697 16.97 5 6 15 0.1584 15.84 6 7 9 0.1267 12.67 7 8 12 0.0887 8.87 8 9 7 0.0552 5.52 9 10,11,12* 4 0.0539 5.39 \(n = 100\) 99.47 -

Now, it's just a matter of counting the number of observations that fall into each bucket to get the observed (Obs'd) column, and calculating the expected number (0.10 × 100 = 10) to get the expected (Exp'd) column:Category Class 1 (\(-\infty\),79.5) 2 (79.5, 86.5) 3 (86.5, 91.6) 4 (91.6, 95.9) 5 (95.9, 100.0) 6 (100.0, 104.1) 7 (104.1, 108.4) 8 (108.4, 113.5) 9 (113.5, 120.5) 10 (120.5, \(\infty\))

-

| Category | Class | Obs'd | Exp'd | Contribution to \(Q\) |

|---|---|---|---|---|

| 1 | (\(-\infty\),79.5) | 7 | 10 | \(\left(7-10\right)^2 / 10 = 0.9\) |

| 2 | (79.5, 86.5) | 7 | 10 | \(\left(7-10\right)^2 / 10 = 0.9\) |

| 3 | (86.5, 91.6) | 14 | 10 | \(\left(14-10\right)^2 / 10 = 1.6\) |

| 4 | (91.6, 95.9) | 5 | 10 | \(\left(5-10\right)^2 / 10 = 2.5\) |

| 5 | (95.9, 100.0) | 14 | 10 | \(\left(14-10\right)^2 / 10 = 1.6\) |

| 6 | (100.0, 104.1) | 10 | 10 | \(\left(10-10\right)^2 / 10 = 0.0\) |

| 7 | (104.1, 108.4) | 12 | 10 | \(\left(12-10\right)^2 / 10 = 0.4\) |

| 8 | (108.4, 113.5) | 11 | 10 | \(\left(11-10\right)^2 / 10 = 0.1\) |

| 9 | (113.5, 120.5) | 9 | 10 | \(\left(9-10\right)^2 / 10 = 0.1\) |

| 10 | (120.5, \(\infty\)) | 11 | 10 | \(\left(11-10\right)^2 / 10 = 0.1\) |

| \(n = 100\) | \(n = 100\) | \(Q_9 = 8.2\) |

As illustrated in the table, using the observed and expected numbers, we see that the chi-square statistic is 8.2. We reject if the following is true:

\(Q_9 =8.2 \ge \chi_{10-1, 0.05}^{2} =\chi_{9, 0.05}^{2}=16.92\)

It isn't! We do not reject the null hypothesis at the 0.05 level. There is insufficient evidence to conclude that the data do not follow a normal distribution with a mean of 100 and a standard deviation 16.

16.5 - Using Minitab to Lighten the Workload

16.5 - Using Minitab to Lighten the WorkloadExample 16-8

This is how I used Minitab to help with the calculations of the alpha particle example on the Unspecified Probabilities page in this lesson.

-

Use Minitab's Calc >> Probability distribution >> Poisson command to determine the Poisson(5.6) probabilities:

Poisson with mean = 5.6 x P (X = x) 0 0.003698 1 0.020708 2 0.057983 3 0.108234 4 0.151528 5 0.169711 6 0.158397 7 0.126717 8 0.088702 9 0.055193 10 0.030908 11 0.015735 12 0.007343 -

Enter the observed counts into one column and copy the probabilities (collapsing some categories, if necessary) into another column. Use Minitab's Calc >> Calculator command to generate the remaining necessary columns:

Poisson with mean = 5.6 Row Obsd p_i Expd Chisq 1 5 0.082389 8.2389 1.27329 2 15 0.108234 10.8234 0.43772 3 19 0.151528 15.1528 0.97678 4 16 0.169711 16.9711 0.05557 5 15 0.158397 15.8397 0.04451 6 9 0.126717 12.6717 1.06390 7 12 0.088702 8.8702 1.10433 8 7 0.055193 5.5193 0.39724 9 4 0.053986 5.3986 0.36233 Sum up the "Chisq" column to obtain the chi-square statistic Q.

-

-

Example 16-9

This is how I used Minitab to help with the calculations of the IQ example on the Continuous Random Variables page in this lesson.

-

The sorted data:

Sample 54 66 74 74 75 78 79 80 81 82 82 82 83 84 87 88 88 88 88 89 89 89 89 89 90 90 90 91 92 93 93 93 94 96 96 97 97 98 98 99 99 99 99 99 100 100 100 102 102 102 102 102 103 103 104 104 104 105 105 105 105 106 106 106 107 107 108 108 108 109 109 109 110 111 111 111 111 112 112 112 114 114 115 115 115 116 118 118 120 121 121 122 123 125 126 127 127 131 132 139 -

The working table:

Row CProb Z Sample Obsd Expd Chisq 1 0.1 -1.28155 79.495 7 10 0.9 2 0.2 -0.84162 86.534 7 10 0.9 3 0.3 -0.52440 91.610 14 10 1.6 4 0.4 -0.25355 95.946 5 10 2.5 5 0.5 0.00000 100.000 14 10 1.6 6 0.6 0.25355 104.054 10 10 0.0 7 0.7 0.52440 108.390 12 10 0.4 8 0.8 0.84162 113.466 11 10 0.1 9 0.9 1.28155 120.505 9 10 0.1 10 11 10 0.1

-

-

The chi-square statistic:

Lesson 17: Contingency Tables

Lesson 17: Contingency TablesOverview

In this lesson, we'll investigate two more applications of the chi-square test. We'll first look at a method for testing whether two or more multinomial distributions are equal. This method is often referred to as the test for homogeneity. (Homogenized milk... get it?)

We'll then look at a method for testing whether two or more categorical variables are independent. This test is often referred to as the test for independence. It allows us to test for independence of, say, an individual's political affiliation and his/her preference for a particular presidential candidate.

Objectives

- To learn how to conduct a test for homogeneity.

- To learn how to conduct a test for independence.

- To understand the proofs in the lesson.

- To be able to apply the methods learned in the lesson to new situations.

17.1 - Test For Homogeneity

17.1 - Test For HomogeneityAs suggested in the introduction to this lesson, the test for homogeneity is a method, based on the chi-square statistic, for testing whether two or more multinomial distributions are equal. Let's start by trying to get a feel for how our data might "look" if we have two equal multinomial distributions.

Example 17-1

A university admissions officer was concerned that males and females were accepted at different rates into the four different schools (business, engineering, liberal arts, and science) at her university. She collected the following data on the acceptance of 1200 males and 800 females who applied to the university:

| #(Acceptances) | Business | Engineer | Lib Arts | Science | (FIXED) Total |

|---|---|---|---|---|---|

| Male | 300 (25%) | 240 (20%) | 300 (25%) | 360 (30%) | 1200 |

| Female | 200 (25%) | 160 (20%) | 200 (25%) | 240 (30%) | 800 |

| Total | 500 (25%) | 400 (20%) | 500 (25%) | 600 (30%) | 2000 |

Are males and females distributed equally among the various schools?

Answer

Let's start by focusing on the business school. We can see that, of the 1200 males who applied to the university, 300 (or 25%) were accepted into the business school. Of the 800 females who applied to the university, 200 (or 25%) were accepted into the business school. So, the business school looks to be in good shape, as an equal percentage of males and females, namely 25%, were accepted into it.

Now, for the engineering school. We can see that, of the 1200 males who applied to the university, 240 (or 20%) were accepted into the engineering school. Of the 800 females who applied to the university, 160 (or 20%) were accepted into the engineering school. So, the engineering school also looks to be in good shape, as an equal percentage of males and females, namely 20%, were accepted into it.

We probably don't have to drag this out any further. If we look at each column in the table, we see that the proportion of males accepted into each school is the same as the proportion of females accepted into each school... which therefore happens to equal the proportion of students accepted into each school, regardless of gender. Therefore, we can conclude that males and females are distributed equally among the four schools.

Example 17-2

A university admissions officer was concerned that males and females were accepted at different rates into the four different schools (business, engineering, liberal arts, and science) at her university. She collected the following data on the acceptance of 1200 males and 800 females who applied to the university:

| #(Acceptances) | Business | Engineer | Lib Arts | Science | (FIXED) Total |

|---|---|---|---|---|---|

| Male | 240 (20%) | 480 (40%) | 120 (10%) | 360 (30%) | 1200 |

| Female | 240 (30%) | 80 (10%) | 320 (40%) | 160 (20%) | 800 |

| Total | 480 (24%) | 560 (28%) | 440 (22%) | 520 (26%) | 2000 |

Are males and females distributed equally among the various schools?

Answer

Let's again start by focusing on the business school. In this case, of the 1200 males who applied to the university, 240 (or 20%) were accepted into the business school. And, of the 800 females who applied to the university, 240 (or 30%) were accepted into the business school. So, the business school appears to have different rates of acceptance for males and females, 20% compared to 30%.

Now, for the engineering school. We can see that, of the 1200 males who applied to the university, 480 (or 40%) were accepted into the engineering school. Of the 800 females who applied to the university, only 80 (or 10%) were accepted into the engineering school. So, the engineering school also appears to have different rates of acceptance for males and females, 40% compared to 10%.

Again, there's no need drag this out any further. If we look at each column in the table, we see that the proportion of males accepted into each school is different than the proportion of females accepted into each school... and therefore the proportion of students accepted into each school, regardless of gender, is different than the proportion of males and females accepted into each school. Therefore, we can conclude that males and females are not distributed equally among the four schools.

In the context of the two examples above, it quickly becomes apparent that if we wanted to formally test the hypothesis that males and females are distributed equally among the four schools, we'd want to test the hypotheses:

\(H_0 : p_{MB} =p_{FB} \text{ and } p_{ME} =p_{FE} \text{ and } p_{ML} =p_{FL} \text{ and } p_{MS} =p_{FS}\)

\(H_A : p_{MB} \ne p_{FB} \text{ or } p_{ME} \ne p_{FE} \text{ or } p_{ML} \ne p_{FL} \text{ or } p_{MS} \ne p_{FS}\)

where:

- \(p_{Mj}\) is the proportion of males accepted into school j = B, E, L, or S

- \(p_{Fj}\) is the proportion of females accepted into school j = B, E, L, or S

In conducting such a hypothesis test, we're comparing the proportions of two multinomial distributions. Before we can develop the method for conducting such a hypothesis test, that is, for comparing the proportions of two multinomial distributions, we first need to define some notation.

Notation

We'll use what I think most statisticians would consider standard notation, namely that:

- The letter i will index the h row categories, and

- The letter j will index the k column categories

(The text reverses the use of the i index and the j index.) That said, let's use the framework of the previous examples to introduce the notation we'll use. That is, rewrite the tables above using the following generic notation:

| #(Acc) | Bus \(\left(j = 1 \right)\) | Eng \(\left(j = 2 \right)\) | L Arts \(\left(j = 3 \right)\) | Sci \(\left(j = 4 \right)\) | (FIXED) Total |

|---|---|---|---|---|---|

| M \(\left(i = 1 \right)\) | \(y_{11} \left(\hat{p}_{11} \right)\) | \(y_{12} \left(\hat{p}_{12} \right)\) | \(y_{13} \left(\hat{p}_{13} \right)\) | \(y_{14} \left(\hat{p}_{14} \right)\) | \(n_{1}=\sum_\limits{j=1}^{k} y_{1 j}\) |

| F \(\left(i = 2 \right)\) | \(y_{21} \left(\hat{p}_{21} \right)\) | \(y_{22} \left(\hat{p}_{22} \right)\) | \(y_{23} \left(\hat{p}_{23} \right)\) | \(y_{24} \left(\hat{p}_{24} \right)\) | \(n_{2}=\sum_\limits{j=1}^{k} y_{2 j}\) |

| Total | \(y_{11} + y_{21} \left(\hat{p}_1 \right)\) | \(y_{12} + y_{22} \left(\hat{p}_2 \right)\) | \(y_{13} + y_{23} \left(\hat{p}_3 \right)\) | \(y_{14} + y_{24} \left(\hat{p}_4 \right)\) | \(n_1 + n_2\) |

with:

- \(y_{ij}\) denoting the number falling into the \(j^{th}\) category of the \(i^{th}\) sample

- \(\hat{p}_{ij}=y_{ij}/n_i\)denoting the proportion in the \(i^{th}\) sample falling into the \(j^{th}\) category

- \(n_i=\sum_{j=1}^{k}y_{ij}\)denoting the total number in the \(i^{th}\) sample

- \( \hat{p}_{j}=(y_{1j}+y_{2j})/(n_1+n_2) \)denoting the (overall) proportion falling into the \(j^{th}\) category

With the notation defined as such, we are now ready to formulate the chi-square test statistic for testing the equality of two multinomial distributions.

The Chi-Square Test Statistic

Theorem

The chi-square test statistic for testing the equality of two multinomial distributions:

\(Q=\sum_{i=1}^{2}\sum_{j=1}^{k}\frac{(y_{ij}- n_i\hat{p}_j)^2}{n_i\hat{p}_j}\)

follows an approximate chi-square distribution with k−1 degrees of freedom. Reject the null hypothesis of equal proportions if Q is large, that is, if:

\(Q \ge \chi_{\alpha, k-1}^{2}\)

Proof

For the sake of concreteness, let's again use the framework of our example above to derive the chi-square test statistic. For one of the samples, say for the males, we know that:

\(\sum_{j=1}^{k}\frac{(\text{observed }-\text{ expected})^2}{\text{expected}}=\sum_{j=1}^{k}\frac{(y_{1j}- n_1p_{1j})^2}{n_1p_{1j}} \)

follows an approximate chi-square distribution with k−1 degrees of freedom. For the other sample, that is, for the females, we know that:

\(\sum_{j=1}^{k}\frac{(\text{observed }-\text{ expected})^2}{\text{expected}}=\sum_{j=1}^{k}\frac{(y_{2j}- n_2p_{2j})^2}{n_2p_{2j}} \)

follows an approximate chi-square distribution with k−1 degrees of freedom. Therefore, by the independence of two samples, we can "add up the chi-squares," that is:

\(\sum_{i=1}^{2}\sum_{j=1}^{k}\frac{(y_{ij}- n_ip_{ij})^2}{n_ip_{ij}}\)

follows an approximate chi-square distribution with k−1+ k−1 = 2(k−1) degrees of freedom.

Oops.... but we have a problem! The \(p_{ij}\)'s are unknown to us. Of course, we know by now that the solution is to estimate the \(p_{ij}\)'s. Now just how to do that? Well, if the null hypothesis is true, the proportions are equal, that is, if:

\(p_{11}=p_{21}, p_{21}=p_{22}, ... , p_{1k}=p_{2k} \)

we would be best served by using all of the data across the sample categories. That is, the best estimate for each\(j^{th}\) category is the pooled estimate:

\(\hat{p}_j=\frac{y_{1j}+y_{2j}}{n_1+n_2}\)

We also know by now that because we are estimating some paremeters, we have to adjust the degrees of freedom. The pooled estimates \(\hat{p}_j\) estimate the true unknown proportions \(p_{1j} = p_{2j} = p_j\). Now, if we know the first k−1 estimates, that is, if we know:

\(\hat{p}_1, \hat{p}_2, ... , \hat{p}_{k-1}\)

then the \(k^{th}\) one, that is \(\hat{p}_k\), is determined because:

\(\sum_{j=1}^{k}\hat{p}_j=1\)

That is:

\(\hat{p}_k=1-(\hat{p}_1+\hat{p}_2+ ... + \hat{p}_{k-1})\)

So, we are estimating k−1 parameters, and therefore we have to subtract k−1 from the degrees of freedom. Doing so, we get that

\(Q=\sum_{i=1}^{2}\sum_{j=1}^{k}\frac{(y_{ij}- n_i\hat{p}_j)^2}{n_i\hat{p}_j}\)

follows an approximate chi-square distribution with 2(k−1) − (k−1) = k − 1 degrees of freedom. As was to be proved!

Note

Our only example on this page has involved \(h = 2\) samples. If there are more than two samples, that is, if \(h > 2\), then the definition of the chi-square statistic is appropriately modified. That is:

\(Q=\sum_{i=1}^{h}\sum_{j=1}^{k}\frac{(y_{ij}- n_i\hat{p}_j)^2}{n_i\hat{p}_j}\)

follows an approximate chi-square distribution with \(h(k−1) − (k−1) = (h−1)(k − 1)\) degrees of freedom.

Let's take a look at another example.

Example 17-3

The head of a surgery department at a university medical center was concerned that surgical residents in training applied unnecessary blood transfusions at a different rate than the more experienced attending physicians. Therefore, he ordered a study of the 49 Attending Physicians and 71 Residents in Training with privileges at the hospital. For each of the 120 surgeons, the number of blood transfusions prescribed unnecessarily in a one-year period was recorded. Based on the number recorded, a surgeon was identified as either prescribing unnecessary blood transfusions Frequently, Occasionally, Rarely, or Never. Here's a summary table (or "contingency table") of the resulting data:

| Physician | Frequent | Occasionally | Rarely | Never | Total |

|---|---|---|---|---|---|

| Attending | 2 (4.1%) | 3 (6.1%) | 31 (63.3%) | 13 (26.5%) | 49 |

| Resident | 15 (21.1%) | 28 (39.4%) | 23 (32.4%) | 5 (7.0%) | 71 |

| Total | 17 | 31 | 54 | 18 | 120 |

Are attending physicians and residents in training distributed equally among the various unnecessary blood transfusion categories?

Answer

We are interested in testing the null hypothesis:

\(H_0 : p_{RF} =p_{AF} \text{ and } p_{RO} =p_{AO} \text{ and } p_{RR} =p_{AR} \text{ and } p_{RN} =p_{AN}\)

against the alternative hypothesis:

\(H_A : p_{RF} \ne p_{AF} \text{ or } p_{RO} \ne p_{AO} \text{ or } p_{RR} \ne p_{AR} \text{ or } p_{RN} \ne p_{AN}\)

The observed data were given to us in the table above. So, the next thing we need to do is find the expected counts for each cell of the table:

| Physician | Frequent | Occasionally | Rarely | Never | Total |

|---|---|---|---|---|---|

| Attending | 49 | ||||

| Resident | 71 | ||||

| Total | 17 | 31 | 54 | 18 | 120 |

It is in the calculation of the expected values that you can readily see why we have (2−1)(4−1) = 3 degrees of freedom in this case. That's because, we only have to calculate three of the cells directly.

| Physician | Frequent | Occasionally | Rarely | Never | Total |

|---|---|---|---|---|---|

| Attending | 6.942 | 12.658 | 22.05 | 49 | |

| Resident | 71 | ||||

| Total | 17 | 31 | 54 | 18 | 120 |

Once we do that, the remaining five cells can be calculated by way of subtraction:

| Physician | Frequent | Occasionally | Rarely | Never | Total |

|---|---|---|---|---|---|

| Attending | 6.942 | 12.658 | 22.05 | 7.35 | 49 |

| Resident | 10.058 | 18.342 | 31.95 | 10.65 | 71 |

| Total | 17 | 31 | 54 | 18 | 120 |

Now that we have the observed and expected counts, calculating the chi-square statistic is a straightforward exercise:

\(Q=\frac{(2-6.942)^2}{6.942}+ ... +\frac{(5-10.65)^2}{10.65} =31.88 \)

The chi-square test tells us to reject the null hypothesis, at the 0.05 level, if Q is greater than a chi-square random variable with 3 degrees of freedom, that is, if \(Q > 7.815\). Because \(Q = 31.88 > 7.815\), we reject the null hypothesis. There is sufficient evidence at the 0.05 level to conclude that the distribution of unnecessary transfusions differs among attending physicians and residents.

Minitab®

Using Minitab

If you...

- Enter the data (in the inside of the frequency table only) into the columns of the worksheet

- Select Stat >> Tables >> Chi-square test

then you'll get typical chi-square test output that looks something like this:

| Freq | Occ | Rare | Never | Total | |

|---|---|---|---|---|---|

| 1 | 2 6.94 |

3 12.66 |

31 22.05 |

13 7.35 |

49 |

| 2 | 15 10.06 |

28 18.34 |

23 31.95 |

5 10.65 |

71 |

| Total | 17 | 31 | 54 | 18 | 120 |

Chi- sq = 3.518 + 7.369 + 3.633 + 4.343 +

2.428 + 5.086 + 2.507 + 2.997 = 31.881

DF = 3, P-Value = 0.000

17.2 - Test for Independence

17.2 - Test for IndependenceOne of the primary things that distinguishes the test for independence, that we'll be studying on this page, from the test for homogeneity is the way in which the data are collected. So, let's start by addressing the sampling schemes for each of the two situations.

The Sampling Schemes

For the sake of concreteness, suppose we're interested in comparing the proportions of high school freshmen and high school seniors falling into various driving categories — perhaps, those who don't drive at all, those who drive unsafely, and those who drive safely. We randomly select 100 freshmen and 100 seniors and then observe into which of the three driving categories each student falls:

| Driving Habits | Categories | |||||

|---|---|---|---|---|---|---|

| Samples | OBSERVED | \( j = 1\) | \(j = 2\) | \(\cdots\) | \(j = k\) | Total |

| Freshmen \(i = 1\) | \(n_1 = 100\) | |||||

| Seniors \(i = 2\) | \(n_2 = 100\) | |||||

| Total | ||||||

In this case, we are interested in conducting a test of homogeneity for testing the null hypothesis:

\(H_0 : p_{F1}=p_{S1} \text{ and }p_{F2}=p_{S2} \text{ and } ... p_{Fk}=p_{Sk}\)

against the alternative hypothesis:

\(H_A : p_{F1}\ne p_{S1} \text{ or }p_{F2}\ne p_{S2} \text{ or } ... p_{Fk}\ne p_{Sk}\).

For this example, the sampling scheme involves:

- Taking two random (and therefore independent) samples with n1 and n2 fixed in advance,

- Observing into which of the k categories the freshmen fall, and

- Observing into which of the k categories the seniors fall.

Now, lets consider a different example to illustrate an alternative sampling scheme. Suppose 395 people are randomly selected, and are "cross-classified" into one of eight cells, depending into which age category they fall and whether or not they support legalizing marijuana:

| Marijuana Support | Variable B (Age) | |||||

|---|---|---|---|---|---|---|

| Variable A | OBSERVED | (18-24) \(B_1\) | (25-34) \(B_12\) | (35-49) \(B_3\) | (50-64) \(B_4\) | Total |

| (YES) \(A_1\) | 60 | 54 | 46 | 41 | 201 | |

| (NO) \(A_2\) | 40 | 44 | 53 | 57 | 194 | |

| Total | 100 | 98 | 99 | 98 | \(n = 395\) | |

In this case, we are interested in conducting a test of independence for testing the null hypothesis:

\(H_0 \colon\) Variable A is independent of variable B, that is, \(P(A_i \cap B_j)=P(A_i) \times P(B_j)\) for all i and j.

against the alternative hypothesis \(H_A \colon\) Variable A is not independent of variable B.

For this example, the sampling scheme involves:

- Taking one random sample of size n, with n fixed in advance, and

- Then "cross-classifying" each subject into one and only one of the mutually exclusive and exhaustive \(A_i \cap B_j \) cells.

Note that, in this case, both the row totals and column totals are random... it is only the total number n sampled that is fixed in advance. It is this sampling scheme and the resulting test for independence that will be the focus of our attention on this page. Now, let's jump right to the punch line.

The Punch Line

The same chi-square test works! It doesn't matter how the sampling was done. But, it's traditional to still think of the two tests, the one for homogeneity and the one for independence, in different lights.

Just as we did before, let's start with clearly defining the notation we will use.

Notation

Suppose we have k (column) levels of Variable B indexed by the letter j, and h (row) levels of Variable A indexed by the letter i. Then, we can summarize the data and probability model in tabular format, as follows:

| Variable B | |||||

|---|---|---|---|---|---|

| Variable A | \(B_1 \left(j = 1\right)\) | \(B_2 \left(j = 2\right)\) | \(B_3 \left(j = 3\right)\) | \(B_4 \left(j = 4\right)\) | Total |

| \(A_1 \left(i = 1\right)\) | \(Y_{11} \left(p_{11}\right)\) | \(Y_{12} \left(p_{12}\right)\) | \(Y_{13} \left(p_{13}\right)\) | \(Y_{14} \left(p_{14}\right)\) | \(\left(p_{.1}\right)\) |

| \(A_2 \left(i = 2\right)\) | \(Y_{21} \left(p_{21}\right)\) | \(Y_{22} \left(p_{22}\right)\) | \(Y_{23} \left(p_{23}\right)\) | \(Y_{24} \left(p_{24}\right)\) | \(\left(p_{.2}\right)\) |

| Total |

\(\left(p_{.1}\right)\) |

\(\left(p_{.2}\right)\) | \(\left(p_{.3}\right)\) | \(\left(p_{.4}\right)\) | \(n\) |

where:

- \(Y_ij\) denotes the frequency of event \(A_i \cap B_j \)

- The probability that a randomly selected observation falls into the cell defined by \(A_i \cap B_j \) is \(p_{ij}=P(A_i \cap B_j)\) and is estimated by \(Y_{ij}/n\)

- The probability that a randomly selected observation falls into a row defined by Ai is \(p_{i.}=P(A_i )\) and is estimated by \(\sum_{j=1}^{k}p_{ij}\) ("dot notation")

- The probability that a randomly selected observation falls into a column defined by Bj is \(p_{.j}=P(B_j) \) and is estimated by \(\sum_{i=1}^{h}p_{ij}\) ("dot notation")

With the notation defined as such, we are now ready to formulate the chi-square test statistic for testing the independence of two categorical variables.

The Chi-Square Test Statistic

Theorem

The chi-square test statistic:

\(Q=\sum_{j=1}^{k}\sum_{i=1}^{h}\frac{(y_{ij}-\frac{y_{i.}y_{.j}}{n})^2}{\frac{y_{i.}y_{.j}}{n}} \)

for testing the independence of two categorical variables, one with h levels and the other with k levels, follows an approximate chi-square distribution with (h−1)(k−1) degrees of freedom.

Proof

We should be getting to be pros at deriving these chi-square tests. We'll do the proof in four steps.

- Step 1

We can think of the \(h \times k\) cells as arising from a multinomial distribution with \(h \times k\) categories. Then, in that case, as long as n is large, we know that:

\(Q_{kh-1}=\sum_{j=1}^{k}\sum_{i=1}^{h}\frac{(\text{observed }-\text{ expected})^2}{\text{ expected}} =\sum_{j=1}^{k}\sum_{i=1}^{h}\frac{(y_{ij}-np_{ij})^2}{np_{ij}}\)

follows an approximate chi-square distribution with \(kh−1\) degrees of freedom.

- Step 2

But the chi-square statistic, as defined in the first step, depends on some unknown parameters \(p_{ij}\). So, we'll estimate the \(p_{ij}\) assuming that the null hypothesis is true, that is, assuming independence:

\(p_{ij}=P(A_i \cap B_j)=P(A_i) \times P(B_j)=p_{i.}p_{.j} \)

Under the assumption of independence, it is therefore reasonable to estimate the \(p_{ij}\) with:

\(\hat{p}_{ij}=\hat{p}_{i.}\hat{p}_{.j}=\left(\frac{\sum_{j=1}^{k}y_{ij}}{n}\right) \left(\frac{\sum_{i=1}^{h}y_{ij}}{n}\right)=\frac{y_{i.}y_{.j}}{n^2}\)

- Step 3

Now, we have to determine how many parameters we estimated in the second step. Well, the fact that the row probabilities add to 1:

\(\sum_{i=1}^{h}p_{i.}=1 \)

implies that we've estimated \(h−1\) row parameters. And, the fact that the column probabilities add to 1:

\(\sum_{j=1}^{k}p_{.j}=1 \)

implies that we've estimated \(k−1\) column parameters. Therefore, we've estimated a total of \(h−1 + k − 1 = h + k − 2\) parameters.

- Step 4

Because we estimated \(h + k − 2\) parameters, we have to adjust the test statistic and degrees of freedom accordingly. Doing so, we get that:

\(Q=\sum_{j=1}^{k}\sum_{i=1}^{h}\frac{\left(y_{ij}-n\left(\frac{y_{i.}y_{.j}}{n^2}\right) \right)^2}{n\left(\frac{y_{i.}y_{.j}}{n^2}\right)} =\sum_{j=1}^{k}\sum_{i=1}^{h}\frac{\left(y_{ij}-\frac{y_{i.}y_{.j}}{n} \right)^2}{\left(\frac{y_{i.}y_{.j}}{n}\right)} \)

follows an approximate chi-square distribution with \((kh − 1)− ( h + k − 2)\) parameters, that is, upon simplification, \((h − 1)(k − 1)\) degrees of freedom.

By the way, I think I might have mumbled something up above about the equivalence of the chi-square statistic for homogeneity and the chi-square statistic for independence. In order to prove that the two statistics are indeed equivalent, we just have to show, for example, in the case when \(h = 2\), that:

\(\sum_{i=1}^{2}\sum_{j=1}^{k}\frac{\left(y_{ij}-n_i\left(\frac{y_{1j}+y_{2j}}{n_1+n_2}\right) \right)^2}{n_i\left(\frac{y_{1j}+y_{2j}}{n_1+n_2}\right)} =\sum_{i=1}^{2}\sum_{j=1}^{k}\frac{\left(y_{ij}-\frac{y_{i.}y_{.j}}{n} \right)^2}{\left(\frac{y_{i.}y_{.j}}{n}\right)} \)

Errrrrrr. That probably looks like a scarier proposition than it is, as showing that the above is true amounts to showing that:

\(n_i \binom{y_{1j}+y_{2j}}{n_1+n_2}=\binom{y_{i.}y_{.j}}{n} \)

Well, rewriting the left-side a bit using dot notation, we get:

\(n_i \binom{y_{.j}}{n}=\binom{y_{i.}y_{.j}}{n} \)

and doing some algebraic simplification, we get:

\(n_i= y_{i.}\)

which certainly holds true, as \(n_i\) and \(y_{i·}\) mean the same thing, that is, the number of experimental units in the \(i^{th}\) row.

Example 17-4

Is age independent of the desire to ride a bicycle? A random sample of 395 people were surveyed. Each person was asked their interest in riding a bicycle (Variable A) and their age (Variable B). The data that resulted from the survey is summarized in the following table:

| Bicycle Riding Interest | Variable B (Age) | |||||

|---|---|---|---|---|---|---|

| Variable A | OBSERVED | (18-24) | (25-34) | (35-49) | (50-64) | Total |

| YES | 60 | 54 | 46 | 41 | 201 | |

| NO | 40 | 44 | 53 | 57 | 194 | |

| Total | 100 | 98 | 99 | 98 | 395 | |

Is there evidence to conclude, at the 0.05 level, that the desire to ride a bicycle depends on age?

Answer

Here's the table of expected counts:

| Bicycle Riding Interest | Variable B (Age) | |||||

|---|---|---|---|---|---|---|

| Variable A | EXPECTED | 18-24 | 25-34 | 35-49 | 50-64 | Total |

| YES | 50.886 | 49.868 | 50.377 | 49.868 | 201 | |

| NO | 49.114 | 48.132 | 48.623 | 48.132 | 194 | |

| Total | 100 | 98 | 99 | 98 | 395 | |

which results in a chi-square statistic of 8.006:

\(Q=\frac{(60-50.886)^2}{50.886}+ ... +\frac{(57-48.132)^2}{48.132}=8.006 \)

The chi-square test tells us to reject the null hypothesis, at the 0.05 level, if Q is greater than a chi-square random variable with 3 degrees of freedom, that is, if Q > 7.815. Because Q = 8.006 > 7.815, we reject the null hypothesis. There is sufficient evidence at the 0.05 level to conclude that the desire to ride a bicycle depends on age.

Using Minitab

If you...

- Enter the data (in the inside of the observed frequency table only) into the columns of the worksheet

- Select Stat >> Tables >> Chi-square test

then Minitab will display typical chi-square test output that looks something like this:

| \(\color{white}\text{noheader}\) | 18-24 | 25-34 | 35-49 | 50-64 | Total |

|---|---|---|---|---|---|

| 1 | 60 50.89 |

54 49.87 |

46 50.38 |

41 49.87 |

201 |

| 2 | 40 49.11 |

44 48.13 |

53 48.62 |

57 48.13 |

194 |

| Total | 100 | 98 | 99 | 98 | 395 |

Chi- sq = 1.632 + 0.342 + 0.380 + 1.577 +

1.691 + 0.355 + 0.394 + 1.634 = 8.006

DF = 3, P-Value = 0.000

Lesson 18: Order Statistics

Lesson 18: Order StatisticsOverview

We typically don't pay particular attention to the order of a set of random variables \(X_1, X_2, \cdots, X_n\). But, what if we did? Suppose, for example, we needed to know the probability that the third largest value was less than 72. Or, suppose we needed to know the 80th percentile of a random sample of heart rates. In either case, we'd need to know something about how the order of the data behaved. That is, we'd need to know something about the probability density function of the order statistics \(Y_1, Y_2, \cdots, Y_n\). That's what we'll groove on in this lesson.

Objectives

- To learn the formal definition of order statistics.

- To derive the distribution function of the \(r^{th}\) order statistic.

- To derive the probability density function of the \(r^{th}\) order statistic.

- To derive a method for finding the \((100p)^{th}\) percentile of the sample.

18.1 - The Basics

18.1 - The BasicsExample 18-1

Let's motivate the definition of a set of order statistics by way of a simple example.

Suppose a random sample of five rats yields the following weights (in grams):

\(x_1=602 \qquad x_2=781\qquad x_3=709\qquad x_4=742\qquad x_5=633\)

What are the observed order statistics of this set of data?

Answer

Well, without even knowing the formal definition of an order statistic, we probably don't need a rocket scientist to tell us that, in order to find the order statistics, we should probably arrange the data in increasing numerical order. Doing so, the observed order statistics are:

\(y_1=602<y_2=633<y_3=709<y_4=742<y_5=781\)

The only thing that might have tripped us up a bit in such a trivial example is if two of the rats had shared the same weight, as observing ties is certainly a possibility. We'll wash our hands of the likelihood of that happening, though, by making an assumption that will hold throughout this lesson... and beyond. We will assume that the n independent observations come from a continuous distribution, thereby making the probability zero that any two observations are equal. Of course, ties are still possible in practice. Making the assumption, though, that there is a near zero chance of a tie happening allows us to develop the distribution theory of order statistics that hold at least approximately even in the presence of ties. That said, let's now formally provide a definition for a set of order statistics.

Definition. If \(X_1, X_2, \cdots, X_n\) are observations of a random sample of size \(n\) from a continuous distribution, we let the random variables:

\(Y_1<Y_2<\cdots<Y_n\)

denote the order statistics of the sample, with:

- \(Y_1\) being the smallest of the \(X_1, X_2, \cdots, X_n\) observations

- \(Y_2\) being the second smallest of the \(X_1, X_2, \cdots, X_n\) observations

- ....

- \(Y_{n-1}\) being the next-to-largest of the \(X_1, X_2, \cdots, X_n\) observations

- \(Y_n\) being the largest of the \(X_1, X_2, \cdots, X_n\) observations

Now, what we want to do is work our way up to find the probability density function of any of the \(n\) order statistics, the \(r^{th}\) order statistic \(Y_r\). That way, we'd know how the order statistics behave and therefore could use that knowledge to draw conclusions about something like the fastest automobile in a race or the heaviest mouse on a certain diet. In finding the probability density function, we'll use the distribution function technique to do so. It's probably been a mighty bit since we used the technique, so in case you need a reminder, our strategy will be to first find the distribution function \(G_r(y)\) of the \(r^{th}\) order statistic, and then take its derivative to find the probability density function \(g_r(y)\) of the \(r^{th}\) order statistic. We're getting a little bit ahead of ourselves though. That's what we'll do on the next page. To make our work there more understandable, let's first take a look at a concrete example here.

Example 18-2

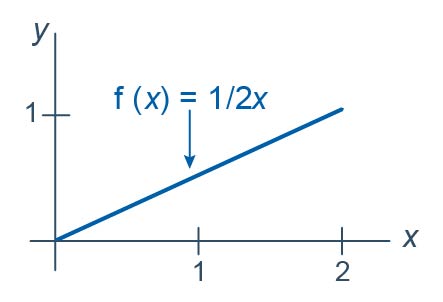

Let \(Y_1<Y_2<Y_3<Y_4<Y_5<Y_6\) be the order statistics associated with \(n=6\) independent observations each from the distribution with probability density function:

\(f(x)=\dfrac{1}{2}x\)

for \(0<x<2\). What is the probability that the next-to-largest order statistic, that is, \(Y_5\), is less than 1? That is, what is \(P(Y_5<1)\)?

Answer

The key to finding the desired probability is to recognize that the only way that the fifth order statistic, \(Y_5\), would be less than one is if at least 5 of the random variables \(X_1, X_2, X_3, X_4, X_5, \text{ and }X_6\) are less than one. For the sake of simplicity, let's suppose the first five observed values \(x_1, x_2, x_3, x_4, x_5\) are less than one, but the sixth \(x_6\) is not. In that case, the observed fifth-order statistic, \(y_5\), would be less than one:

The observed fifth order statistic, \(y_5\), would also be less than one if all six of the observed values \(x_1, x_2, x_3, x_4, x_5, x_6\) are less than one:

The observed fifth order statistic, \(y_5\), would not be less than one if the first four observed values \(x_1, x_2, x_3, x_4\) are less than one, but the fifth \(x_5\) and sixth \(x_6\) is not:

Again, the only way that the fifth order statistic, \(Y_5\), would be less than one is if 5 or 6... that is, at least 5... of the random variables \(X_1, X_2, X_3, X_4, X_5, \text{ and }X_6\) are less than one. For the sake of simplicity, we considered just the first five or six random variables, but in reality, any five or six random variables less than one would do. We just have to do some "choosing" to count the number of ways that we can get any five or six of the random variables to be less than one.

If you think about it, then, we have a binomial probability calculation here. If the event \(\{X_i<1\}\), \(i=1, 2, \cdots, 5\) is considered a "success," and we let \(Z\) = the number of successes in six mutually independent trials, then \(Z\) is a binomial random variable with \(n=6\) and \(p=0.25\):

\(P(X_i\le1)=\dfrac{1}{2}\int_{0}^{1}x dx=\dfrac{1}{2}\left[\dfrac{x^2}{2}\right]_{x=0}^{x=1}=\dfrac{1}{2}\left(\dfrac{1}{2}-0\right)=\dfrac{1}{4}\)

Finding the probability that the fifth order statistic, \(Y_5\), is less than one reduces to a binomial calculation then. That is:

\(P(Y_5<1)=P(Z=5)+P(Z=6)=\binom{6}{5}\left(\dfrac{1}{4} \right)^5\left(\dfrac{3}{4} \right)^1+\binom{6}{6}\left(\dfrac{1}{4} \right)^6\left(\dfrac{3}{4} \right)^0=0.0046\)

The fact that the calculated probability is so small should make sense in light of the given p.d.f. of \(X\). After all, each individual \(X_i\) has a greater chance of falling above, rather than below, one. For that reason, it would unusual to observe as many as five or six \(X\)'s less than one.

What is the cumulative distribution function \(G_5(y)\) of the fifth order statistic \(Y_5\)?

Answer

Recalling the definition of a cumulative distribution function, \(G_5(y)\) is defined to be the probability that the fifth order statistic \(Y_5\) is less than some value \(y\). That is:

\(G_5(y)=P(Y_5 < y)\)

Well, in our above work, we found the probability that the fifth order statistic \(Y_5\) is less than a specific value, namely 1. We just need to generalize our work there to allow for any value \(y\). Well, if the event \(\{X_i<y\}\), \(i=1, 2, \cdots, 5\) is considered a "success," and we let \(Z\) = the number of successes in six mutually independent trials, then \(Z\) is a binomial random variable with \(n=6\) and probability of success:

\(P(X_i\le y)=\dfrac{1}{2}\int_{0}^{y}x dx=\dfrac{1}{2}\left[\dfrac{x^2}{2}\right]_{x=0}^{x=y}=\dfrac{1}{2}\left(\dfrac{y^2}{2}-0\right)=\dfrac{y^2}{4}\)

Therefore, the cumulative distribution function \(G_5(y)\) of the fifth order statistic \(Y_5\) is:

\(G_5(y)=P(Y_5 < y)=P(Z=5)+P(Z=6)=\binom{6}{5}\left(\dfrac{y^2}{4}\right)^5\left(1-\dfrac{y^2}{4}\right)+\left(\dfrac{y^2}{4}\right)^6\)

for \(0<y<2\).

What is the probability density function \(g_5(y)\) of the fifth order statistic \(Y_5\)?

Answer

All we need to do to find the probability density function \(g_{5}(y)\) is to take the derivative of the distribution function \(G_5(y)\) with respect to \(y\). Doing so, we get:

\(g_5(y)=G_{5}^{'}(y)=\binom{6}{5}\left(\dfrac{y^2}{4}\right)^5\left(\dfrac{-2y}{4}\right)+\binom{6}{5}\left(1-\dfrac{y^2}{4}\right)5\left(\dfrac{y^2}{4}\right)^4\left(\dfrac{2y}{4}\right)+6\left(\dfrac{y^2}{4}\right)^5\left(\dfrac{2y}{4}\right)\)

Upon recognizing that:

\(\binom{6}{5}=6\) and \(\binom{6}{5}\times5=\dfrac{6!}{5!1!}\times5=\dfrac{6!}{4!1!}\)

we see that the middle term simplifies somewhat, and the first term is just the negative of the third term, therefore they cancel each other out:

\(g_{5}(y)=\left(\begin{array}{l}

6 \\

5

\end{array}\right)\color{red}\cancel {\color{black}\left(\frac{y^{2}}{4}\right)^{5}\left(\frac{-2 y}{4}\right)}\color{black}+\frac{6 !}{4 ! 1 !}\left(1-\frac{y^{2}}{4}\right)\left(\frac{y^{2}}{4}\right)^{4}\left(\frac{2 y}{4}\right)+\color{red}\cancel {\color{black}6\left(\frac{y^{2}}{4}\right)^{5}\left(\frac{2 y}{4}\right)}\)

Therefore, the probability density function of the fifth order statistic \(Y_5\) is:

\(g_5(y)=\dfrac{6!}{4!1!}\left(1-\dfrac{y^2}{4}\right)\left(\dfrac{y^2}{4}\right)^4\left(\dfrac{1}{2}y\right)\)

for \(0<y<2\). When we go on to generalize our work on the next page, it will benefit us to note that because the density function and distribution function of each \(X\) are:

\(f(x)=\dfrac{1}{2}x\) and \(F(x)=\dfrac{x^2}{4}\)

respectively, when \(0<x<2\), we can alternatively write the probability density function of the fifth order statistic \(Y_5\) as:

\(g_5(y)=\dfrac{6!}{4!1!}\left[F(y)\right]^4\left[1-F(y)\right]f(y)\)

Done!

Whew! Now, let's push on to the more general case of finding the probability density function of the \(r^{th}\) order statistic.

18.2 - The Probability Density Functions

18.2 - The Probability Density FunctionsOur work on the previous page with finding the probability density function of a specific order statistic, namely the fifth one of a certain set of six random variables, should help us here when we work on finding the probability density function of any old order statistic, that is, the \(r^{th}\) one.

Let \(Y_1<Y_2<\cdots, Y_n\) be the order statistics of n independent observations from a continuous distribution with cumulative distribution function \(F(x)\) and probability density function:

\( f(x)=F'(x) \)

where \(0<F(x)<1\) over the support \(a<x<b\). Then, the probability density function of the \(r^{th}\) order statistic is:

\(g_r(y)=\dfrac{n!}{(r-1)!(n-r)!}\left[F(y)\right]^{r-1}\left[1-F(y)\right]^{n-r}f(y)\)

over the support \(a<y<b\).

Proof

We'll again follow the strategy of first finding the cumulative distribution function \(G_r(y)\) of the \(r^{th}\) order statistic, and then differentiating it with respect to \(y\) to get the probability density function \(g_r(y)\). Now, if the event \(\{X_i\le y\},\;i=1, 2, \cdots, r\) is considered a "success," and we let \(Z\) = the number of such successes in \(n\) mutually independent trials, then \(Z\) is a binomial random variable with \(n\) trials and probability of success:

\(F(y)=P(X_i \le y)\)

Now, the \(r^{th}\) order statistic \(Y_r\) is less than or equal to \(y\) only if r or more of the \(n\) observations \(x_1, x_2, \cdots, x_n\) are less than or equal to \(y\), which implies:

\(G_r(y)=P(Y_r \le y)=P(Z=r)+P(Z=r+1)+ ... + P(Z=n)\)

which can be written using summation notation as:

\(G_r(y)=\sum_{k=r}^{n} P(Z=k)\)

Now, we can replace \(P(Z=k)\) with the probability mass function of a binomial random variable with parameters \(n\) and \(p=F(y)\). Doing so, we get:

\(G_r(y)=\sum_{k=r}^{n}\binom{n}{k}\left[F(y)\right]^{k}\left[1-F(y)\right]^{n-k}\)

Rewriting that slightly by pulling the \(n^{th}\) term out of the summation notation, we get:

\(G_r(y)=\sum_{k=r}^{n-1}\binom{n}{k}\left[F(y)\right]^{k}\left[1-F(y)\right]^{n-k}+\left[F(y)\right]^{n}\)

Now, it's just a matter of taking the derivative of \(G_r(y)\) with respect to \(y\). Using the product rule in conjunction with the chain rule on the terms in the summation, and the power rule in conjunction with the chain rule, we get:

\(g_r(y)=\sum_{k=r}^{n-1}{n\choose k}(k)[F(y)]^{k-1}f(y)[1-F(y)]^{n-k}\\ +\sum_{k=r}^{n-1}[F(y)]^k(n-k)[1-F(y)]^{n-k-1}(-f(y))\\+n[F(y)]^{n-1}f(y) \) (**)

Now, it's just a matter of recognizing that:

\(\left(\begin{array}{l}

n \\

k

\end{array}\right) k=\frac{n !}{\color{blue}\underbrace{\color{black}k !}_{\underset{\text{}}{{\color{blue}\color{red}\cancel {\color{blue}k}\color{blue}(k-1)!}}}\color{black}(n-k) !} \times \color{red}\cancel {\color{black}k}\color{black}=\frac{n !}{(k-1) !(n-k) !}\)

and

\(\left(\begin{array}{l}

n \\

k

\end{array}\right)(n-k)=\frac{n !}{k !\color{blue}\underbrace{\color{black}(n-k) !}_{\underset{\text{}}{{\color{blue}\color{red}\cancel {\color{blue}(n-k)}\color{blue}(n- k -1)!}}}\color{black}} \times \color{red}\cancel {\color{black}(n-k)}\color{black}=\frac{n !}{k !(n-k-1) !}\)

Once we do that, we see that the p.d.f. of the \(r^{th}\) order statistic \(Y_r\) is just the first term in the summation in \(g_r(y)\). That is:

\(g_r(y)=\dfrac{n!}{(r-1)!(n-r)!}\left[F(y)\right]^{r-1}\left[1-F(y)\right]^{n-r}f(y)\)

for \(a<y<b\). As was to be proved! Simple enough! Well, okay, that's a little unfair to say it's simple, as it's not all that obvious, is it? For homework, you'll be asked to write out, for the case when \(n=6\) and r = 3, the terms in the starred equation (**). In doing so, you should see that for all but the first of the positive terms in the starred equation, there is a corresponding negative term, so that everything but the first term cancels out. After you get a chance to work through that exercise, then perhaps it would be fair to say simple enough!

Example 18-2 (continued)

Let \(Y_1<Y_2<Y_3<Y_4<Y_5<Y_6\) be the order statistics associated with \(n=6\) independent observations each from the distribution with probability density function:

\(f(x)=\dfrac{1}{2}x \)

for \(0<x<2\). What is the probability density function of the first, fourth, and sixth order statistics?

Solution

When we worked with this example on the previous page, we showed that the cumulative distribution function of \(X\) is:

\(F(x)=\dfrac{x^2}{4} \)

for \(0<x<2\). Therefore, applying the above theorem with \(n=6\) and \(r=1\), the p.d.f. of \(Y_1\) is:

\(g_1(y)=\dfrac{6!}{0!(6-1)!}\left[\dfrac{y^2}{4}\right]^{1-1}\left[1-\dfrac{y^2}{4}\right]^{6-1}\left(\dfrac{1}{2}y\right)\)

for \(0<y<2\), which can be simplified to:

\(g_1(y)=3y\left(1-\dfrac{y^2}{4}\right)^{5}\)

Applying the theorem with \(n=6\) and \(r=4\), the p.d.f. of \(Y_4\) is:

\(g_4(y)=\dfrac{6!}{3!(6-4)!}\left[\dfrac{y^2}{4}\right]^{4-1}\left[1-\dfrac{y^2}{4}\right]^{6-4}\left(\dfrac{1}{2}y\right)\)

for \(0<y<2\), which can be simplified to:

\(g_4(y)=\dfrac{15}{32}y^7\left(1-\dfrac{y^2}{4}\right)^{2}\)

Applying the theorem with \(n=6\) and \(r=6\), the p.d.f. of \(Y_6\) is:

\(g_6(y)=\dfrac{6!}{5!(6-6)!}\left[\dfrac{y^2}{4}\right]^{6-1}\left[1-\dfrac{y^2}{4}\right]^{6-6}\left(\dfrac{1}{2}y\right)\)

for \(0<y<2\), which can be simplified to:

\(g_6(y)=\dfrac{3}{1024}y^{11}\)

Fortunately, when we graph the three functions on one plot:

we see something that makes intuitive sense, namely that as we increase the number of the order statistic, the p.d.f. "moves to the right" on the support interval.

18.3 - Sample Percentiles

18.3 - Sample PercentilesIt can be shown, as the authors of our textbook illustrate, that the order statistics \(Y_1<Y_2<\cdots<Y_n\) partition the support of \(X\) into \(n+1\) parts and thereby create \(n+1\) areas under \(f(x)\) and above the \(x\)-axis, with each of the \(n+1\) areas equaling, on average, \( \dfrac{1}{n+1} \):

Now, if we recall that the (100p)th percentile \(\pi_p\) is such that the area under \(f(x)\) to the left of \(\pi_p\) is \(p\), then the above plot suggests that we should let \(Y_r\) serve as an estimator of \(\pi_p\), where \( p = \dfrac{r}{n+1}\):

It's for this reason that we use the following formal definition of the sample percentile.

- (100p)th percentile of the sample

- The (100p)th percentile of the sample is defined to be \(Y_r\), the \(r^{th}\) order statistic, where \(r=(n+1)p\). For cases in which \((n+1)p\) is not an integer, we use a weighted average of the two adjacent order statistics \(Y_r\) and \(Y_{r+1}\).

Let's try this definition out an example.

Example 18-3

A report from the Texas Transportation Institute on Congestion Reduction Strategies highlighted the extra travel time (due to traffic congestion) for commute travel per traveler per year in hours for 13 different large urban areas in the United States:

| Urban Area | Extra Hours per Traveler Per Year |

|---|---|

| Philadelphia | 40 |

| Miami | 48 |

| Phoenix | 49 |

| New York | 50 |

| Boston | 53 |

| Detroit | 54 |

| Chicago | 55 |

| Dallas-Fort Worth | 61 |

| Atlanta | 64 |

| Houston | 65 |

| Washington, DC | 66 |

| San Fransisco | 75 |

| Los Angeles | 98 |

Find the first quartile, the 40th percentile, and the median of the sample of \(n=13\) extra travel times.

Answer

Because \(r=(13+1)(0.25)=3.5\), the first quartile, alternatively known as the 25th percentile, is:

\(\tilde{q}_1 =y_3+0.5(y_4-y_3) = 0.5y_3+0.5y_4=0.5(49)+0.5(50)=49.5\)

Because \(r=(13+1)(0.4)=5.6\), the 40th percentile is:

\(\tilde{\pi}_{0.40} = y_5 + 0.6(y_6-y_5) =0.4y_5 + 0.6y_6 =0.4(53)+0.6(54)=53.6\)

Because \(r=(13+1)(0.5)=7\), the median is:

\(\tilde{m} =y_7 = 55\)

Lesson 19: Distribution-Free Confidence Intervals for Percentiles

Lesson 19: Distribution-Free Confidence Intervals for PercentilesOverview

In the previous lesson, we learned how to calculate a sample percentile as a point estimate of a population (or distribution) percentile. Just as it is a good idea to calculate confidence intervals for other population parameters, such as means and variances, it would be a good idea to learn how to calculate a confidence interval for percentiles of a population. That's what we'll work on doing in this lesson. As the title of the lesson suggests, we won't make any assumptions about the distribution of the data, that is, other than it being continuous.

Objectives

- To learn how to calculate a confidence interval for a median using order statistics.

- To learn how to calculate a confidence interval for any population percentile using order statistics.

19.1 - For A Median

19.1 - For A MedianThe Method

As is generally the case, let's motivate the method for calculating a confidence interval for a population median \(m\) by way of a concrete example. Suppose \(Y_1<Y_2<Y_3<Y_4<Y_5\) are the order statistics of a random sample of size \(n=5\) from a continuous distribution. Our work from the previous lesson tells us that \(Y_3\) serves as a good point estimator of the median \(m\). Let's see what we can come up with for a confidence interval given we have these order statistics at our disposal. Well, suppose we suggested that the interval constrained by the first and fifth order statistics, that is, \((Y_1, Y_5)\) would serve as a good interval. How confident can we be that the interval \(Y_1, Y_5)\) would contain the unknown population median \(m\)? To answer that question, we simply need to calculate the following probability:

\(P(Y_1<m<Y_5)\)

Calculating the probability reduces to a simple binomial calculation once we figure out all the ways in which the population median \(m\) is sandwiched between \(Y_1\) and \(Y_5\). Well, the population median m is sandwiched between \(Y_1\) and \(Y_5\), if the first order statistic is the only order statistic less than the median \(m\):

The population median \(m\) is sandwiched between \(Y_1\) and \(Y_5\), if the first two order statistics are the only order statistics less than the median \(m\):

The population median \(m\) is sandwiched between \(Y_1\) and \(Y_5\), if the first three order statistics are less than the median \(m\), and the fourth and fifth order statistics are greater than \(m\):

And, the population median \(m\) is sandwiched between \(Y_1\) and \(Y_5\), if the fifth order statistic is the only order statistic greater than the median \(m\):

This means that in order to calculate the probability \(P(Y_1<m<Y_5)\), we need to calculate the probability of each of the above events. Now, if we let \(W\) denote the number of \(X_i<m\), then \(W\) is a binomial random variable with \(n\) mutually independent trials and probability of success \(p=P(X_i<m)=0.5\). And, reviewing the events as depicted above, the desired probability is calculated as:

\(P(Y_1<m<Y_5)=P(W=1)+P(W=2)+P(W=3)+P(W=4)\)

The binomial p.m.f. (or, alternatively, the binomial table) makes the calculation straightforward: