9 Poisson Regression

Overview

Poisson regression is also a special case of the generalized linear model, where the random component is specified by the Poisson distribution. This usually works well when the response variable is a count of some occurrence, such as the number of calls to a customer service number in an hour or the number of cars that pass through an intersection in a day. Unlike the binomial distribution, which counts the number of successes in a given number of trials, a Poisson count is not bounded above.

When all explanatory variables are discrete, the Poisson regression model is equivalent to the log-linear model, which we will see in the next lesson. For the present discussion, however, we’ll focus on model-building and interpretation. We’ll see that many of these techniques are very similar to those in the logistic regression model.

Objectives

Upon completion of this lesson, you should be able to:

- Explain the assumptions of the Poisson regression model and use software to fit it to sample data,

- Distinguish between a Poisson count and a rate,

- Interpret an offset and how it differs from a predictor in the Poisson rate regression model, and

- Recognize overdispersion when modeling count data and determine appropriate measures to account for it.

Lesson 9 Code Files

Data Files: (crab.txt), (cancer.txt)

R Files

SAS Files

Useful Links

- Poisson function in SAS : http://support.sas.com/documentation/cdl/en/lrdict/64316/HTML/default/viewer.htm#a000245925.htm

- Poisson regression via GENMOD: https://support.sas.com/documentation/cdl/en/statug/63033/HTML/default/viewer.htm#statug_genmod_sect006.htm

- Fitting GLMs in R : http://www.statmethods.net/advstats/glm.html

9.1 Model Properties

Interpretations

In Poisson regression, the response variable \(Y\) is an occurrence count recorded for a particular measurement window. Usually, this window is a length of time, but it can also be a distance, area, etc. For example, \(Y\) could count the number of flaws in a manufactured tabletop of a certain area. If the observations recorded correspond to different measurement windows, a scale adjustment has to be made to put them on equal terms, and we model the rate or count per measurement unit \(t\).

For the random component, we assume that the response \(Y\) has a Poisson distribution. That is, \(Y_i\sim Poisson(\mu_i)\), for \(i=1, \ldots, N\) where the expected count of \(Y_i\) is \(E(Y_i)=\mu_i\). The link function is usually the (natural) log, but sometimes the identity function may be used. The systematic component consists of a linear combination of explanatory variables \((\alpha+\beta_1x_1+\cdots+ \beta_kx_k\)); this is identical to that for logistic regression. Thus, in the case of a single explanatory, the model is written

\[ \log(\mu)=\alpha+\beta x \]

This is equivalent to

\[ \mu=\exp(\alpha+\beta x)=\exp(\alpha)\exp(\beta x). \]

Interpretations of these parameters are similar to those for logistic regression. \(\exp(\alpha)\) is the effect on the mean of \(Y\) when \(x = 0\), and \(\exp(\beta)\) is the multiplicative effect on the mean of \(Y\) for each 1-unit increase in \(x\).

- If \(\beta = 0\), then \(\exp(\beta) = 1\), and the expected count, \(\mu = E(Y) = \exp(\beta)\), and \(Y\) and \(x\) are not related.

- If \(\beta > 0\), then \(\exp(\beta) > 1\), and the expected count \(\mu = E(Y)\) is \(\exp(\beta)\) times larger than when \(x = 0\).

- If \(\beta< 0\), then \(\exp(\beta) < 1\), and the expected count \(\mu = E(Y)\) is \(\exp(\beta)\) times smaller than when \(x = 0\).

GLM Model for Rates

Compared with the model for count data above, we can alternatively model the expected rate of observations per unit of length, time, etc. to adjust for data collected over differently-sized measurement windows. For example, if \(Y\) is the count of flaws over a length of \(t\) units, then the expected value of the rate of flaws per unit is \(E(Y/t)=\mu/t\). For a single explanatory variable, the model would be written as

\[ \log(\mu/t)=\log\mu-\log t=\alpha+\beta x \]

The term \(\log t\) is referred to as an offset. It is an adjustment term and a group of observations may have the same offset, or each individual may have a different value of \(t\). The term \(\log(t)\) is an observation, and it will change the value of the estimated counts:

\[ \mu=\exp(\alpha+\beta x+\log(t))=(t) \exp(\alpha)\exp(\beta_x) \]

This means that the mean count is proportional to \(t\).

Parameter Estimation and Inference

Similar to the case of logistic regression, the maximum likelihood estimators (MLEs) for \(\beta_0, \beta_1 \dots\), etc.) are obtained by finding the values that maximize the log-likelihood. In general, there are no closed-form solutions, so the ML estimates are obtained by using iterative algorithms such as Newton-Raphson (NR), Iteratively re-weighted least squares (IRWLS), etc.

The usual tools from the basic statistical inference of GLMs are valid:

- Confidence Intervals and Hypothesis tests for parameters

- Wald statistics and asymptotic standard error (ASE)

- Likelihood ratio tests

- Distribution of probability estimates

In the next, we will take a look at an example using the Poisson regression model for count data with SAS and R. In SAS we can use PROC GENMOD which is a general procedure for fitting any GLM. Many parts of the input and output will be similar to what we saw with PROC LOGISTIC. In R we can still use glm(). The response counts are recorded for the same measurement windows (horseshoe crabs), so no scale adjustment for modeling rates is necessary.

9.2 Modeling Count Data

Example 9.1 (Horseshoe Crabs and Satellites)  This problem refers to data from a study of nesting horseshoe crabs (J. Brockmann, Ethology 1996). Each female horseshoe crab in the study had a male crab attached to her in her nest. The study investigated factors that affect whether the female crab had any other males, called satellites, residing near her. Explanatory variables that are thought to affect this included the female crab’s color, spine condition, and carapace width, and weight. The response outcome for each female crab is the number of satellites. There are 173 females in this study.

This problem refers to data from a study of nesting horseshoe crabs (J. Brockmann, Ethology 1996). Each female horseshoe crab in the study had a male crab attached to her in her nest. The study investigated factors that affect whether the female crab had any other males, called satellites, residing near her. Explanatory variables that are thought to affect this included the female crab’s color, spine condition, and carapace width, and weight. The response outcome for each female crab is the number of satellites. There are 173 females in this study.

Let’s first see if the carapace width can explain the number of satellites attached. We will start by fitting a Poisson regression model with carapace width as the only predictor.

proc genmod data=crab;

model Sa=w / dist=poi link=log obstats;

run;Model Sa=w specifies the response (Sa) and predictor width (W). Also, note that specifications of Poisson distribution are dist=pois and link=log. The obstats option as before will give us a table of observed and predicted values and residuals. We can use any additional options in GENMOD, e.g., TYPE3, etc.

Here is the output that we should get from running just this part:

Model Information

What do we learn from the “Model Information” section? How is this different from when we fitted logistic regression models? This section gives information on the GLM that’s fitted. More specifically, we see that the response is distributed via Poisson, the link function is log, and the dependent variable is Sa. The number of observations in the data set used is 173.

Does the model fit well? What does the Value/DF tell us? Given the value of deviance statistic of 567.879 with 171 df, the p-value is zero and the Value/DF is much bigger than 1, so the model does not fit well. The lack of fit may be due to missing data, predictors, or overdispersion. It should also be noted that the deviance and Pearson tests for lack of fit rely on reasonably large expected Poisson counts, which are mostly below five, in this case, so the test results are not entirely reliable. Still, we’d like to see a better-fitting model if possible.

Is width a significant predictor? From the “Analysis of Parameter Estimates” table, with Chi-Square stats of 67.51 (1df), the p-value is 0.0001 and this is significant evidence to reject the null hypothesis that \(\beta_W=0\). We can conclude that the carapace width is a significant predictor of the number of satellites.

The estimated model is: \(\log (\mu_i) = -3.3048 + 0.164W_i\)

The standard error of the estimated slope is 0.020, which is small, and the slope is statistically significant.

model = glm(Sa ~ W, family=poisson(link=log), data=hcrabs)

summary(model)

model$fitted.values

rstandard(model)Just as with logistic regression, the glm function specifies the response (Sa) and predictor width (W) separated by the “~” character. Also, note the specification of the Poisson distribution and link function. The fitted (predicted) values are the estimated Poisson counts, and rstandard reports the standardized deviance residuals.

Here is the output that we should get from the summary command:

Call:

glm(formula = Sa ~ W, family = poisson(link = log), data = hcrabs)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -3.30476 0.54224 -6.095 1.1e-09 ***

W 0.16405 0.01997 8.216 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for poisson family taken to be 1)

Null deviance: 632.79 on 172 degrees of freedom

Residual deviance: 567.88 on 171 degrees of freedom

AIC: 927.18

Number of Fisher Scoring iterations: 6Does the model fit well? Given the value of deviance statistic of 567.879 with 171 df, the p-value is zero and the Value/DF is much bigger than 1, so the model does not fit well. The lack of fit may be due to missing data, predictors, or overdispersion. It should also be noted that the deviance and Pearson tests for lack of fit rely on reasonably large expected Poisson counts, which are mostly below five, in this case, so the test results are not entirely reliable. Still, we’d like to see a better-fitting model if possible.

Is width a significant predictor? From the “Coefficients” table, with Chi-Square stat of \(8.216^2=67.50\) (1df), the p-value is 0.0001, and this is significant evidence to reject the null hypothesis that \(\beta_W=0\). We can conclude that the carapace width is a significant predictor of the number of satellites.

The estimated model is: \(\log (\mu_i) = -3.3048 + 0.164W_i\)

The standard error of the estimated slope is 0.020, which is small, and the slope is statistically significant.

Interpretation

Since the estimate of \(\beta > 0\), the wider the carapace is, the greater the number of male satellites (on average). Specifically, for each 1-cm increase in carapace width, the expected number of satellites is multiplied by \(\exp(0.1640) = 1.18\).

Looking at the standardized residuals, we may suspect some outliers (e.g., the 15th observation has a standardized deviance residual of almost 5!), but these seem less obvious in the scatterplot, given the overall variability. Also, with a sample size of 173, such extreme values are more likely to occur just by chance. Still, this is something we can address by adding additional predictors or with an adjustment for overdispersion. From the observations statistics, we can also see the predicted values (estimated mean counts) and the values of the linear predictor, which are the log of the expected counts. For example, for the first observation, the predicted value is \(\hat{\mu}_1=3.810\), and the linear predictor is \(\log(3.810)=1.3377\).

From the above output, we see that width is a significant predictor, but the model does not fit well. We can further assess the lack of fit by plotting residuals or influential points, but let us assume for now that we do not have any other covariates and try to adjust for overdispersion to see if we can improve the model fit.

Strategies to Improve the Fit

Adjusting for Overdispersion

In the above model, we detect a potential problem with overdispersion since the scale factor, e.g., Value/DF, is greater than 1.

What does overdispersion mean for Poisson Regression? What does it tell us about the relationship between the mean and the variance of the Poisson distribution for the number of satellites? Recall that one of the reasons for overdispersion is heterogeneity, where subjects within each predictor combination differ greatly (i.e., even crabs with similar width have a different number of satellites). If that’s the case, which assumption of the Poisson model is violated?

As we saw in logistic regression, if we want to test and adjust for overdispersion we can add a scale parameter by changing scale=none to scale=pearson; see the third part of the SAS program crab.sas labeled ’Adjust for overdispersion by scale=pearson. (As stated earlier we can also fit a negative binomial regression instead)

proc genmod data=crab;

model Sa=w / dist=poi link=log scale=pearson;

output out=data2 p=pred;

run;

proc print;

run;Below is the output when using scale=pearson. How does this compare to the output above from the earlier stage of the code? Do we have a better fit now? Consider the “Scaled Deviance” and “Scaled Pearson chi-square” statistics. The overall model seems to fit better when we account for possible overdispersion.

As we saw in logistic regression, if we want to test and adjust for overdispersion we can add a scale parameter with the family=quasipoisson option. The estimated scale parameter will be labeled as “Overdispersion parameter” in the output. (As stated earlier we can also fit a negative binomial regression instead)

model.disp = glm(Sa ~ W, family=quasipoisson(link=log), data=hcrabs)

summary.glm(model.disp)

summary.glm(model.disp)$dispersionBelow is the output when using the quasi-Poisson model. How does this compare to the output above from the earlier stage of the code? Do we have a better fit now?

Call:

glm(formula = Sa ~ W, family = quasipoisson(link = log), data = hcrabs)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -3.30476 0.96729 -3.417 0.000793 ***

W 0.16405 0.03562 4.606 7.99e-06 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for quasipoisson family taken to be 3.182205)

Null deviance: 632.79 on 172 degrees of freedom

Residual deviance: 567.88 on 171 degrees of freedom

AIC: NA

Number of Fisher Scoring iterations: 6With this model, the random component does not technically have a Poisson distribution any more (hence the term “quasi” Poisson) because that would require that the response has the same mean and variance. From the estimate given (Pearson \(X^2/171 = 3.1822\)), the variance of the number of satellites is roughly three times the size of the mean.

The new standard errors (in comparison to the model without the overdispersion parameter), are larger, (e.g., \(0.0356 = 1.7839( 0.02)\) which comes from the scaled SE (\(\sqrt{3.1822}=1.7839\)); the adjusted standard errors are multiplied by the square root of the estimated scale parameter. Thus, the Wald statistics will be smaller and less significant.

What could be another reason for poor fit besides overdispersion? Can we improve the fit by adding other variables?

Include color as a Qualitative Predictor

Since we did not use the $ sign in the input statement to specify that the variable “C” was categorical, we can now do it by using class c as seen below. The following change is reflected in the next section of the crab.sas program labeled ‘Add one more variable as a predictor, color’.

proc genmod data=crab;

class c;

model Sa=w c / dist=poi link=log scale=pearson type3;

run;Note the “Class level information” on color indicates that this variable has four levels, and thus are we are introducing three indicator variables into the model. From the “Analysis of Parameter Estimates” output below we see that the reference level is level 5. We continue to adjust for overdispersion with scale=pearson , although we could relax this if adding additional predictor(s) produced an insignificant lack of fit.

The estimated model is: \(\log{\hat{\mu_i}}= -3.0974 + 0.1493W_i + 0.4474C_{2i} + 0.2477C_{3i} + 0.0110C_{4i}\), using indicator variables for the first three colors.

There does not seem to be a difference in the number of satellites between any color class and the reference level 5 according to the chi-squared statistics for each row in the table above. Furthermore, by the Type 3 Analysis output below we see that color overall is not statistically significant after we consider the width.

To add the horseshoe crab color as a categorical predictor (in addition to width), we can use the following code. The change of baseline to the 5th color is arbitrary.

hcrabs$C = factor(hcrabs$C, levels=c('5','2','3','4'))

model = glm(Sa ~ W+C, family=quasipoisson(link=log), data=hcrabs)

summary(model)

anova(model, test='Chisq')Note in the output that there are three separate parameters estimated for color, corresponding to the three indicators included for colors 2, 3, and 4 (5 as the baseline). We continue to adjust for overdispersion withfamily=quasipoisson, although we could relax this if adding additional predictor(s) produced an insignificant lack of fit.

summary(model)

Call:

glm(formula = Sa ~ W + C, family = quasipoisson(link = log),

data = hcrabs)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -3.09740 1.00260 -3.089 0.00235 **

W 0.14934 0.03748 3.985 0.00010 ***

C2 0.44736 0.37604 1.190 0.23586

C3 0.24767 0.29339 0.844 0.39978

C4 0.01100 0.32442 0.034 0.97300

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for quasipoisson family taken to be 3.233628)

Null deviance: 632.79 on 172 degrees of freedom

Residual deviance: 559.34 on 168 degrees of freedom

AIC: NA

Number of Fisher Scoring iterations: 6The estimated model is: \(\log{\hat{\mu_i}}= -3.0974 + 0.1493W_i + 0.4474C_{2i}+ 0.2477C_{3i}+ 0.0110C_{4i}\), using indicator variables for the first three colors.

There does not seem to be a difference in the number of satellites between any color class and the reference level 5 according to the chi-squared statistics for each row in the table above. Furthermore, by the ANOVA output below we see that color overall is not statistically significant after we consider the width.

anova(model, test='Chisq')Analysis of Deviance Table

Model: quasipoisson, link: log

Response: Sa

Terms added sequentially (first to last)

Df Deviance Resid. Df Resid. Dev Pr(>Chi)

NULL 172 632.79

W 1 64.913 171 567.88 7.449e-06 ***

C 3 8.534 168 559.34 0.4507

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Is this model preferred to the one without color?

Including Color as a Numerical Predictor

As it turns out, the color variable was actually recorded as ordinal with values 2 through 5 representing increasing darkness and may be quantified as such. So, we next consider treating color as a quantitative variable, which has the advantage of allowing a single slope parameter (instead of multiple indicator slopes) to represent the relationship with the number of satellites.

We will run another part of the crab.sas program that does not include color as a categorical by removing the class statement for C:

proc genmod data=crab;

model Sa=w c / dist=poi link=log scale=pearson;

run;Compare these partial parts of the output with the output above where we used color as a categorical predictor. We are doing this to keep in mind that different coding of the same variable will give us different fits and estimates.

To add color as a quantitative predictor, we first define it as a numeric variable. Although the original values were 2, 3, 4, and 5, R will by default use 1 through 4 when converting from factor levels to numeric values. So, we add 1 after the conversion.

data(hcrabs)

colnames(hcrabs) = c("C","S","W","Sa","Wt")

hcrabs$C = as.numeric(hcrabs$C)+1

model = glm(Sa ~ W+C, family=quasipoisson(link=log), data=hcrabs)

summary(model)

anova(model, test='Chisq')Compare these partial parts of the output with the output above where we used color as a categorical predictor. We are doing this to keep in mind that different coding of the same variable will give us different fits and estimates.

summary(model)

Call:

glm(formula = Sa ~ W + C, family = quasipoisson(link = log),

data = hcrabs)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -2.35058 1.15156 -2.041 0.0428 *

W 0.14957 0.03716 4.025 8.55e-05 ***

C -0.16940 0.11112 -1.524 0.1292

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for quasipoisson family taken to be 3.228764)

Null deviance: 632.79 on 172 degrees of freedom

Residual deviance: 560.20 on 170 degrees of freedom

AIC: NA

Number of Fisher Scoring iterations: 6anova(model, test='Chisq')Analysis of Deviance Table

Model: quasipoisson, link: log

Response: Sa

Terms added sequentially (first to last)

Df Deviance Resid. Df Resid. Dev Pr(>Chi)

NULL 172 632.79

W 1 64.913 171 567.88 7.332e-06 ***

C 1 7.677 170 560.20 0.1231

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The estimated model is now

\[ \log{\hat{\mu_i}}= -2.3506 + 0.1496W_i - 0.1694C_i \]

And the interpretation of the single slope parameter for color is as follows: for each 1-unit increase in the color (darkness level), the expected number of satellites is multiplied by \(\exp(-.1694)=.8442\).

In terms of the fit, adding the numerical color predictor doesn’t seem to help; the overdispersion seems to be due to heterogeneity. Is there something else we can do with this data? We can either… 1. consider additional variables (if available), 2. collapse over levels of explanatory variables, or 3. transform the variables.

Let’s consider grouping the data by the widths and then fitting a Poisson regression model that models the rate of satellites per crab.

Collapsing over Explanatory Variable Width

In this approach, we create 8 width groups and use the average width for the crabs in that group as the single representative value. While width is still treated as quantitative, this approach simplifies the model and allows all crabs with widths in a given group to be combined. The disadvantage is that differences in widths within a group are ignored, which provides less information overall. However, another advantage of using the grouped widths is that the saturated model would have 8 parameters, and the goodness of fit tests, based on \(8-2\) degrees of freedom, are more reliable.

To account for the fact that width groups will include different numbers of crabs, we will model the mean rate \(\mu/t\) of satellites per crab, where \(t\) is the number of crabs for a particular width group. This variable is treated much like another predictor in the data set. The difference is that this value is part of the response being modeled and not assigned a slope parameter of its own. We will see more details on the Poisson rate regression model in the next section.

The data, after being grouped into 8 intervals, is shown in the table below. Two columns to note in particular are “Cases”, the number of crabs with carapace widths in that interval, and “Width”, which now represents the average width for the crabs in that interval.

Interval Cases Width AvgSa SDSa VarSa

=23.25 14 22.693 1.0000 1.6641 2.7692

23.25-24.25 14 23.843 1.4286 2.9798 8.8792

24.25-25.25 28 24.775 2.3929 2.5581 6.5439

25.25-26.25 39 25.838 2.6923 3.3729 11.3765

26.26-27.25 22 26.791 2.8636 2.6240 6.8854

27.25-28.25 24 27.738 3.8750 2.9681 8.8096

28.25-29.25 18 28.667 3.9444 4.1084 16.8790

>29.25 14 30.407 5.1429 2.8785 8.2858In this approach, each observation within a group is treated as if it has the same width.

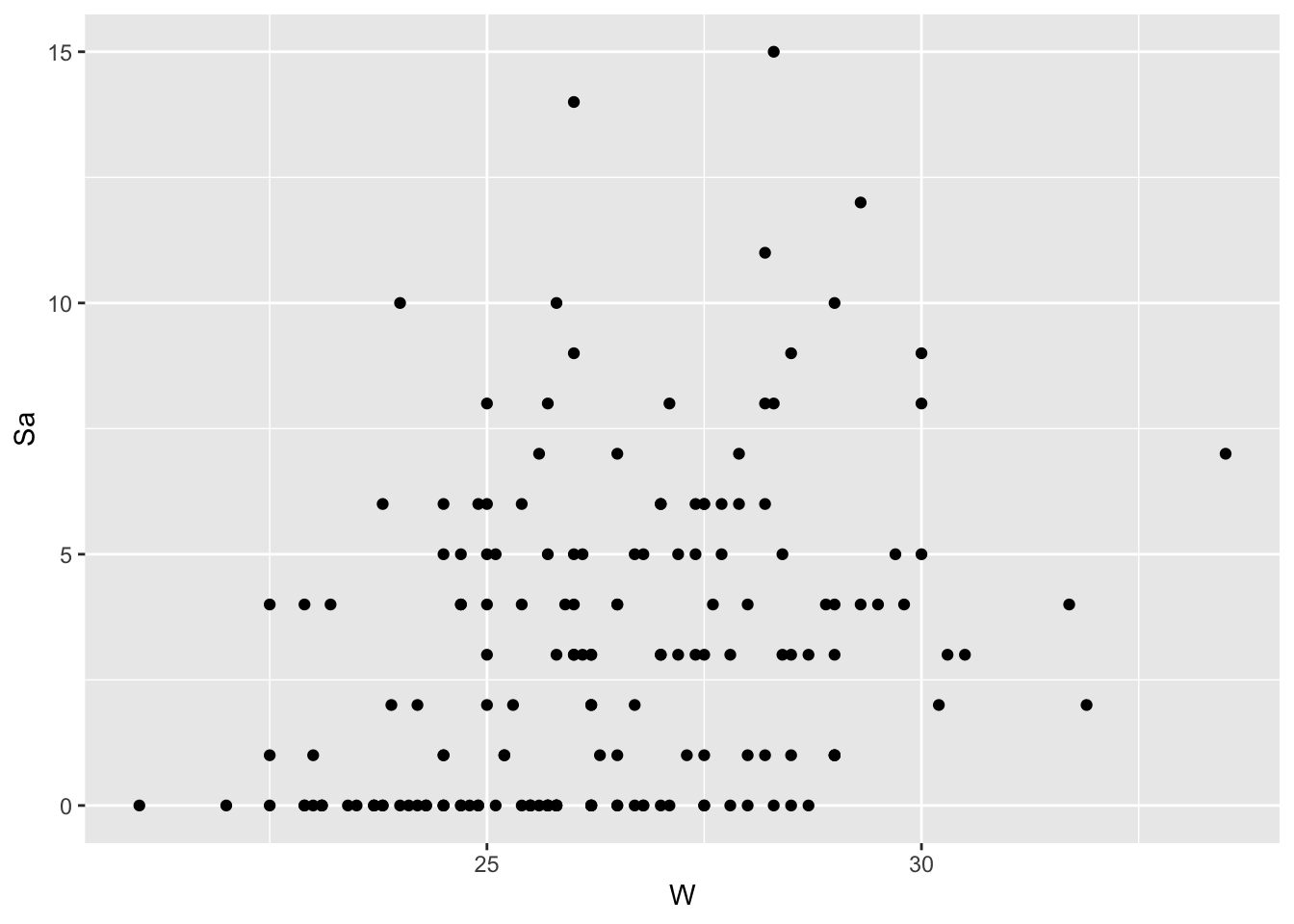

Plot of Number of Satellites by Width of Crab—All Data

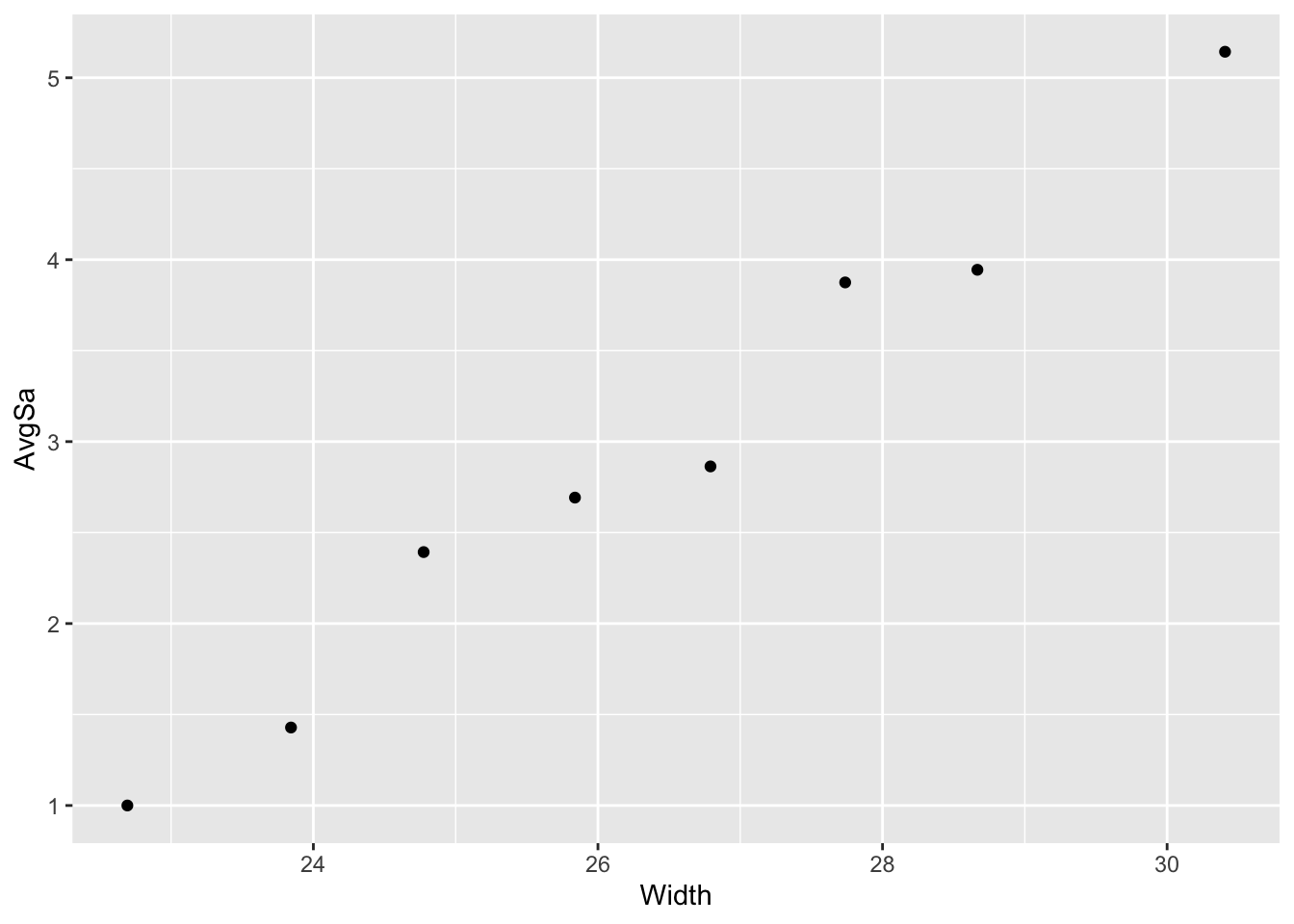

Plot of Average Number of Satellites by Width— Widths Grouped

In SAS, the Cases variable is input with the OFFSET option in the Model statement. We also create a variable LCASES=log(CASES) which takes the log of the number of cases within each grouping. This is our adjustment value \(t\) in the model that represents (abstractly) the measurement window, which in this case is the group of crabs with a similar width. So, \(t\) is effectively the number of crabs in the group, and we are fitting a model for the rate of satellites per crab, given carapace width.

data crabgrp;

input width cases SaTotal AvgSa;

lcases=log(cases);

cards;

22.69 14 14 1.00

23.84 14 20 1.428571

24.77 28 67 2.392857

25.84 39 105 2.692308

26.79 22 63 2.863636

27.74 24 93 3.875

28.67 18 71 3.944444

30.41 14 72 5.142857

;

proc genmod data=crabgrp order=data;

model SaTotal = width / dist=poisson link=log

offset=lcases obstats residuals;

output out=data3 p=pred;

run;Here is the output. Note “Offset variable” under the “Model Information”.

Based on the Pearson and deviance goodness of fit statistics, this model clearly fits better than the earlier ones before grouping width. For example, the Value/DF for the deviance statistic now is 1.0861.

The estimated model is: \(\log (\hat{\mu}_i/t) = -3.535 + 0.1727\mbox{width}_i\)

For each 1-cm increase in carapace width, the mean number of satellites per crab is multiplied by \(\exp(0.1727)=1.1885.\)

See the full table…

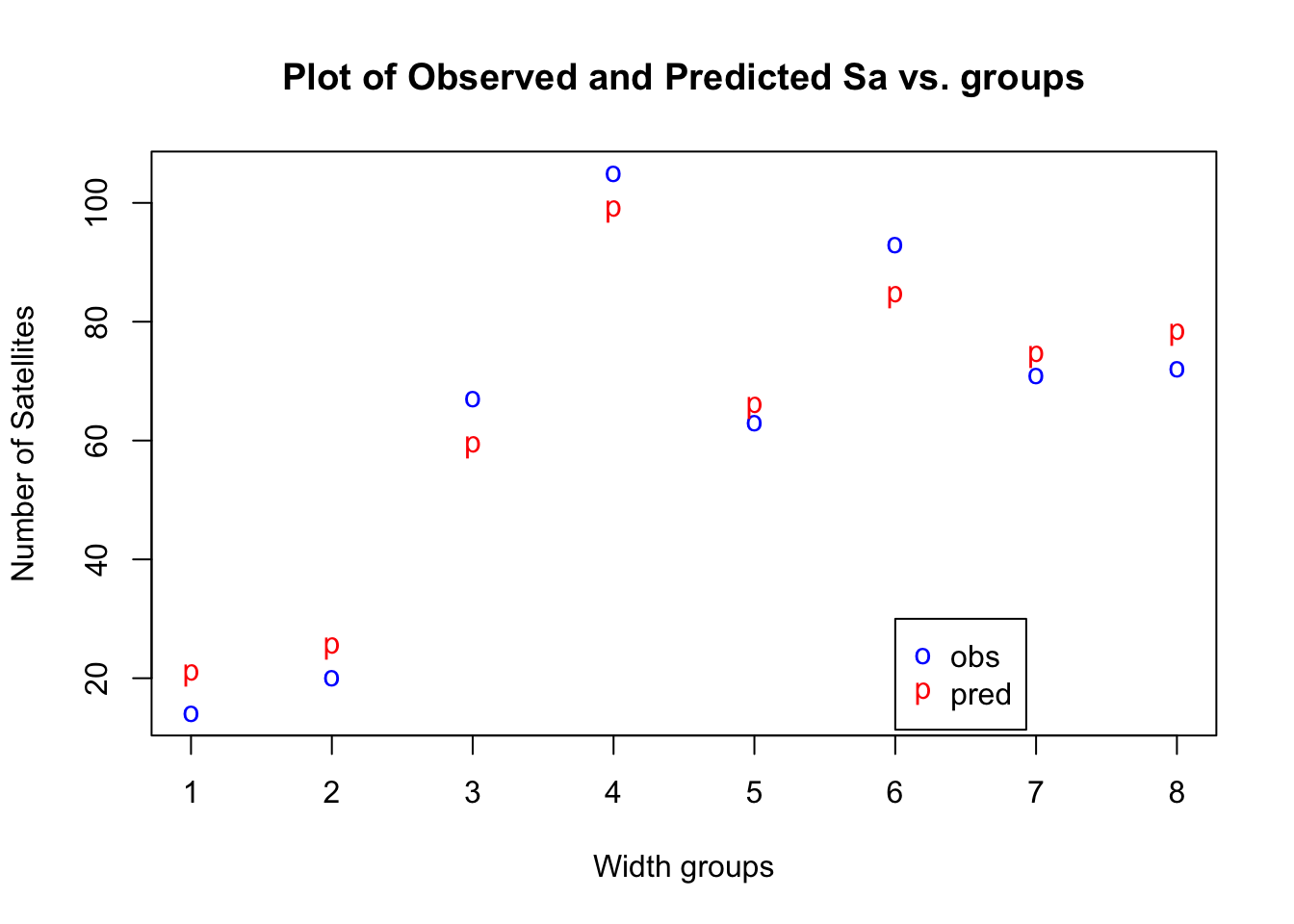

The residuals analysis indicates a good fit as well. From the table above we also see that the predicted values correspond a bit better to the observed counts in the SaTotal cells. Let’s compare the observed and fitted values in the plot below:

Scatter Plot of Sa Total, Observed vs Predicted

Plot of Number of Satellites by Width of Crab—All Data

Plot of Average Number of Satellites by Width— Widths Grouped

In R, the lcases variable is specified with the OFFSET option, which takes the log of the number of cases within each grouping. This is our adjustment value \(t\) in the model that represents (abstractly) the measurement window, which in this case is the group of crabs with similar width. So, \(t\) is effectively the number of crabs in the group, and we are fitting a model for the rate of satellites per crab, given carapace width.

# creating the 8 width groups

width.long = cut(hcrabs$W, breaks=c(0,seq(23.25, 29.25),Inf))

# summarizing per group

Cases = aggregate(hcrabs$W, by=list(W=width.long),length)$x

lcases = log(Cases)

Width = aggregate(hcrabs$W, by=list(W=width.long),mean)$x

AvgSa = aggregate(hcrabs$Sa, by=list(W=width.long),mean)$x

SdSa = aggregate(hcrabs$Sa, by=list(W=width.long),sd)$x

VarSa = aggregate(hcrabs$Sa, by=list(W=width.long),var)$x

SaTotal = aggregate(hcrabs$Sa, by=list(W=width.long),sum)$x

cbind(table(width.long), Width, AvgSa, SdSa, VarSa)

hcrabs.grp = data.frame(Width=Width, lcases=lcases, SaTotal=SaTotal)

model.grp = glm(SaTotal ~ Width, offset=lcases, family=poisson, data=hcrabs.grp)

summary(model.grp)Here is the output. Note the offset = lcases under the model expression.

summary(model.grp)

Call:

glm(formula = SaTotal ~ Width, family = poisson, data = hcrabs.grp,

offset = lcases)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -3.54018 0.57658 -6.140 8.26e-10 ***

Width 0.17290 0.02125 8.135 4.11e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for poisson family taken to be 1)

Null deviance: 72.3772 on 7 degrees of freedom

Residual deviance: 6.5164 on 6 degrees of freedom

AIC: 56.961

Number of Fisher Scoring iterations: 4Based on the Pearson and deviance goodness of fit statistics, this model clearly fits better than the earlier ones before grouping width. For example, the Value/DF for the deviance statistic now is 1.0861. The estimated model is:

\[ \log (\hat{\mu}_i/t)= -3.54 + 0.1729\mbox{width}_i \]

For each 1-cm increase in carapace width, the mean number of satellites per crab is multiplied by \(\exp(0.1729)=1.1887\).

rstandard(model.grp) 1 2 3 4 5 6 7

-1.7312682 -1.1474695 1.1980218 0.7447990 -0.3414285 1.0400246 -0.4260710

8

-1.0213629 predict.glm(model.grp, type="response", newdata=hcrabs.grp) 1 2 3 4 5 6 7 8

20.54097 25.05952 58.88382 98.57261 65.55897 84.23620 74.18733 77.96057 The residuals analysis indicates a good fit as well, and the predicted values correspond a bit better to the observed counts in the SaTotal cells. Let’s compare the observed and fitted values in the plot below:

9.3 Modeling Rate Data

Example 9.2 (Lung Cancer Incidence) The table below summarizes the lung cancer incident counts (cases) per age group for four Danish cities from 1968 to 19711. Since it’s reasonable to assume that the expected count of lung cancer incidents is proportional to the population size, we would prefer to model the rate of incidents per capita.

city age pop cases

Fredericia 40-54 3059 11

Horsens 40-54 2879 13

Kolding 40-54 3142 4

Vejle 40-54 2520 5

Fredericia 55-59 800 11

Horsens 55-59 1083 6

Kolding 55-59 1050 8

Vejle 55-59 878 7

Fredericia 60-64 710 11

Horsens 60-64 923 15

Kolding 60-64 895 7

Vejle 60-64 839 10

Fredericia 65-69 581 10

Horsens 65-69 834 10

Kolding 65-69 702 11

Vejle 65-69 631 14

Fredericia 70-74 509 11

Horsens 70-74 634 12

Kolding 70-74 535 9

Vejle 70-74 539 8

Fredericia 75+ 605 10

Horsens 75+ 782 2

Kolding 75+ 659 12

Vejle 75+ 619 7With \(Y_i\) the count of lung cancer incidents and \(t_i\) the population size for the \(i^{th}\) row in the data, the Poisson rate regression model would be

\[ \log \dfrac{\mu_i}{t_i}=\log \mu_i-\log t_i=\beta_0+\beta_1x_{1i}+\beta_2x_{2i}+\cdots \]

where \(Y_i\) has a Poisson distribution with mean \(E(Y_i)=\mu_i\), and \(x_1\), \(x_2\), etc. represent the (systematic) predictor set. Notice that by modeling the rate with population as the measurement size, population is not treated as another predictor, even though it is recorded in the data along with the other predictors. The main distinction the model is that no \(\beta\) coefficient is estimated for population size (it is assumed to be 1 by definition). Note also that population size is on the log scale to match the incident count.

By using an OFFSET option in the MODEL statement in GENMOD in SAS we specify an offset variable. The offset variable serves to normalize the fitted cell means per some space, grouping, or time interval to model the rates. In this case, population is the offset variable.

data cancer;

input city $ age $ pop cases;

lpop = log(pop);

cards;

Fredericia 40-54 3059 11

Horsens 40-54 2879 13

Kolding 40-54 3142 4

Vejle 40-54 2520 5

Fredericia 55-59 800 11

Horsens 55-59 1083 6

Kolding 55-59 1050 8

Vejle 55-59 878 7

Fredericia 60-64 710 11

Horsens 60-64 923 15

Kolding 60-64 895 7

Vejle 60-64 839 10

Fredericia 65-69 581 10

Horsens 65-69 834 10

Kolding 65-69 702 11

Vejle 65-69 631 14

Fredericia 70-74 509 11

Horsens 70-74 634 12

Kolding 70-74 535 9

Vejle 70-74 539 8

Fredericia 75+ 605 10

Horsens 75+ 782 2

Kolding 75+ 659 12

Vejle 75+ 619 7

; run;

proc genmod data=cancer;

class city(ref='Frederic') age(ref='40-54');

model cases = city age / dist=poi link=log offset=lpop type3;

run;And, here is the output from the GENMOD procedure:

By adding offset in the MODEL statement in GLM in R, we can specify an offset variable. The offset variable serves to normalize the fitted cell means per some space, grouping, or time interval to model the rates. In this case, population is the offset variable.

library(ISwR)

library(dplyr)

data(eba1977)

eba1977 = eba1977 %>% mutate(lpop = log(pop))

model = glm(cases ~ city + age, offset=lpop, family=poisson, data=eba1977)

summary(model)

anova(model, test='Chisq')Here is the output we get:

summary(model)

Call:

glm(formula = cases ~ city + age, family = poisson, data = eba1977,

offset = lpop)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -5.6321 0.2003 -28.125 < 2e-16 ***

cityHorsens -0.3301 0.1815 -1.818 0.0690 .

cityKolding -0.3715 0.1878 -1.978 0.0479 *

cityVejle -0.2723 0.1879 -1.450 0.1472

age55-59 1.1010 0.2483 4.434 9.23e-06 ***

age60-64 1.5186 0.2316 6.556 5.53e-11 ***

age65-69 1.7677 0.2294 7.704 1.31e-14 ***

age70-74 1.8569 0.2353 7.891 3.00e-15 ***

age75+ 1.4197 0.2503 5.672 1.41e-08 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for poisson family taken to be 1)

Null deviance: 129.908 on 23 degrees of freedom

Residual deviance: 23.447 on 15 degrees of freedom

AIC: 137.84

Number of Fisher Scoring iterations: 5The fitted model is

\[ \log\dfrac{\hat{\mu}}{t} = -5.6321-0.3301C_1-0.3715C_2-0.2723C_3 + 1.1010A_1+\cdots+1.4197A_5 \]

where \(C_1\), \(C_2\), and \(C_3\) are the indicators for cities Horsens, Kolding, and Vejle (Fredericia as baseline), and \(A_1,\ldots,A_5\) are the indicators for the last five age groups (40-54 as baseline).

Thus, for people in (baseline) age group 40-54 and in the city of Fredericia, the estimated average rate of lung cancer is

\[ \dfrac{\hat{\mu}}{t}=e^{-5.6321}=0.003581 \]

per person. For a group of 100 people in this category, the estimated average count of incidents would be \(100(0.003581)=0.3581\).

Does the overall model fit? From the deviance statistic 23.447 relative to a chi-square distribution with 15 degrees of freedom (the saturated model with city by age interactions would have 24 parameters), the p-value would be 0.0715, which is borderline. But the model with all interactions would require 24 parameters, which isn’t desirable either. Is there perhaps something else we can try?

Example 9.3 (Considering Age as Quantitative) Since age was originally recorded in six groups, we needed five separate indicator variables to model it as a categorical predictor. We may also consider treating it as quantitative variable if we assign a numeric value, say the midpoint, to each group.

The following code creates a quantitative variable for age from the midpoint of each age group. It also creates an empirical rate variable for use in plotting. Note that this empirical rate is the sample ratio of observed counts to population size \(Y/t\), not to be confused with the population rate \(\mu/t\), which is estimated from the model.

data cancer;

set cancer;

if age='40-54' then age_mid=47;

if age='55-59' then age_mid=57;

if age='60-64' then age_mid=62;

if age='65-69' then age_mid=67;

if age='70-74' then age_mid=72;

if age='75+' then age_mid=75;

e_rate = cases/pop;

run;

proc sgplot data=cancer;

reg y=e_rate x=age_mid / group=city;

run;With age treated as quantitative, we no longer include it in the CLASS statement.

proc genmod data=cancer;

class city;

model cases = age_mid / dist=poi link=log offset=lpop type3;

run;The interpretation of the slope for age is now the increase in the rate of lung cancer (per capita) for each 1-year increase in age, provided city is held fixed.

library(dplyr)

eba1977 = eba1977 %>% mutate(

age.mid = rep(c(47,57,62,67,72,75), each=4),

e.rate = cases/pop)

ggplot(eba1977, aes(x=age.mid, y=e.rate, color=city)) +

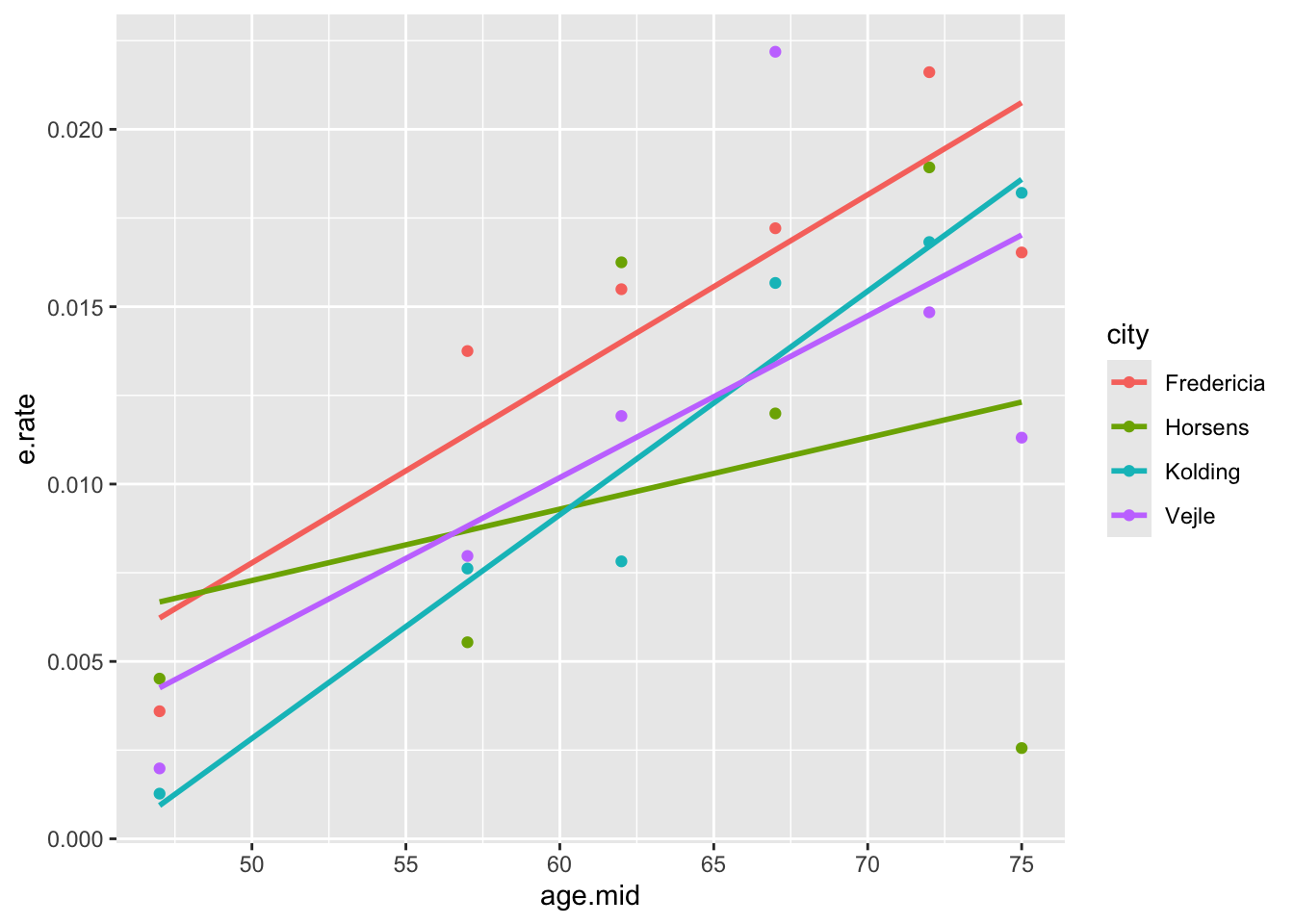

geom_point() + geom_smooth(method='lm', se=FALSE)The plot generated shows increasing trends between age and lung cancer rates for each city. There is also some evidence for a city effect as well as for city by age interaction, but the significance of these is doubtful, given the relatively small data set.

As we have seen before when comparing model fits with a predictor as categorical or quantitative, the benefit of treating age as quantitative is that only a single slope parameter is needed to model a linear relationship between age and the cancer rate. The tradeoff is that if this linear relationship is not accurate, the lack of fit overall may still increase.

model = glm(cases ~ city + age.mid, offset=lpop, family=poisson, data=eba1977)

summary(model)

anova(model, test='Chisq')The interpretation of the slope for age is now the increase in the log rate of lung cancer (per capita) for each 1-year increase in age, provided city is held fixed.

summary(model)

Call:

glm(formula = cases ~ city + age.mid, family = poisson, data = eba1977,

offset = lpop)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -7.982221 0.431070 -18.517 <2e-16 ***

cityHorsens -0.305279 0.181423 -1.683 0.0924 .

cityKolding -0.350278 0.187705 -1.866 0.0620 .

cityVejle -0.246016 0.187769 -1.310 0.1901

age.mid 0.056759 0.006527 8.696 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for poisson family taken to be 1)

Null deviance: 129.908 on 23 degrees of freedom

Residual deviance: 46.448 on 19 degrees of freedom

AIC: 152.84

Number of Fisher Scoring iterations: 59.4 Lesson Summary

In this lesson, we showed how the generalized linear model can be applied to count data, using the Poisson distribution with the log link. Compared with the logistic regression model, two differences we noted are the option to use the negative binomial distribution as an alternate random component when correcting for overdispersion and the use of an offset to adjust for observations collected over different windows of time, space, etc. Much of the properties otherwise are the same (parameter estimation, deviance tests for model comparisons, etc.).

One other common characteristic between logistic and Poisson regression that we change for the log-linear model coming up is the distinction between explanatory and response variables. The log-linear model makes no such distinction and instead treats all variables of interest together jointly. This allows greater flexibility in what types of associations can be fit and estimated, but one restriction in this model is that it applies only to categorical variables.

Source: E.B. Andersen (1977), Multiplicative Poisson models with unequal cell rates, Scandinavian Journal of Statistics, 4:153–158.↩︎