Lesson 12: Multicollinearity & Other Regression Pitfalls

Lesson 12: Multicollinearity & Other Regression PitfallsOverview

So far, in our study of multiple regression models, we have ignored something that we probably shouldn't have — and that's what is called multicollinearity. We're going to correct our blissful ignorance in this lesson.

Multicollinearity exists when two or more of the predictors in a regression model are moderately or highly correlated. Unfortunately, when it exists, it can wreak havoc on our analysis and thereby limit the research conclusions we can draw. As we will soon learn, when multicollinearity exists, any of the following pitfalls can be exacerbated:

- the estimated regression coefficient of any one variable depends on which other predictors are included in the model

- the precision of the estimated regression coefficients decreases as more predictors are added to the model

- the marginal contribution of any one predictor variable in reducing the error sum of squares depends on which other predictors are already in the model

- hypothesis tests for \(\beta_k = 0\) may yield different conclusions depending on which predictors are in the model

In this lesson, we'll take a look at an example or two that illustrates each of the above outcomes. Then, we'll spend some time learning how not only to detect multicollinearity but also how to reduce it once we've found it.

We'll also consider other regression pitfalls, including extrapolation, nonconstant variance, autocorrelation, overfitting, excluding important predictor variables, missing data, power, and sample size.

Objectives

- Distinguish between structural multicollinearity and data-based multicollinearity.

- Know what multicollinearity means.

- Understand the effects of multicollinearity on various aspects of regression analyses.

- Understand the effects of uncorrelated predictors on various aspects of regression analyses.

- Understand variance inflation factors, and how to use them to help detect multicollinearity.

- Know the two ways of reducing data-based multicollinearity.

- Understand how centering the predictors in a polynomial regression model helps to reduce structural multicollinearity.

- Know the main issues surrounding other regression pitfalls, including extrapolation, nonconstant variance, autocorrelation, overfitting, excluding important predictor variables, missing data, and power, and sample size.

Lesson 12 Code Files

Below is a zip file that contains all the data sets used in this lesson:

- allentest.txt

- allentestn23.txt

- bloodpress.txt

- cement.txt

- exerimmun.txt

- poverty.txt

- uncorrelated.txt

- uncorrpreds.txt

12.1 - What is Multicollinearity?

12.1 - What is Multicollinearity?As stated in the lesson overview, multicollinearity exists whenever two or more of the predictors in a regression model are moderately or highly correlated. Now, you might be wondering why can't a researcher just collect his data in such a way to ensure that the predictors aren't highly correlated. Then, multicollinearity wouldn't be a problem, and we wouldn't have to bother with this silly lesson.

Unfortunately, researchers often can't control the predictors. Obvious examples include a person's gender, race, grade point average, math SAT score, IQ, and starting salary. For each of these predictor examples, the researcher just observes the values as they occur for the people in her random sample.

Multicollinearity happens more often than not in such observational studies. And, unfortunately, regression analyses most often take place on data obtained from observational studies. If you aren't convinced, consider the example data sets for this course. Most of the data sets were obtained from observational studies, not experiments. It is for this reason that we need to fully understand the impact of multicollinearity on our regression analyses.

Types of multicollinearity

- Structural multicollinearity is a mathematical artifact caused by creating new predictors from other predictors — such as creating the predictor \(x^{2}\) from the predictor x.

- Data-based multicollinearity, on the other hand, is a result of a poorly designed experiment, reliance on purely observational data, or the inability to manipulate the system on which the data are collected.

In the case of structural multicollinearity, the multicollinearity is induced by what you have done. Data-based multicollinearity is the more troublesome of the two types of multicollinearity. Unfortunately, it is the type we encounter most often!

Example 12-1

Let's take a quick look at an example in which data-based multicollinearity exists. Some researchers observed — notice the choice of word! — the following Blood Pressure data on 20 individuals with high blood pressure:

- blood pressure (y = BP, in mm Hg)

- age (\(x_{1} = Age\), in years)

- weight (\(x_{2} = Weight\), in kg)

- body surface area (\(x_{3} = BSA\), in sq m)

- duration of hypertension (\(x_{4} = Dur\), in years)

- basal pulse (\(x_{5} = Pulse\), in beats per minute)

- stress index (\(x_{6} = Stress\))

The researchers were interested in determining if a relationship exists between blood pressure and age, weight, body surface area, duration, pulse rate, and/or stress level.

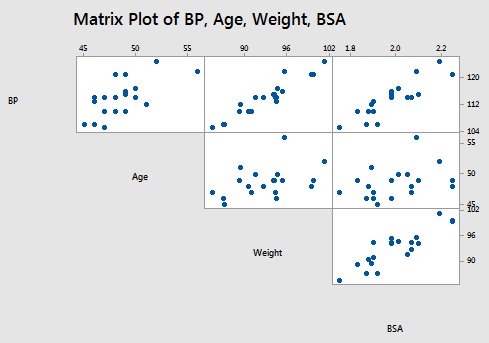

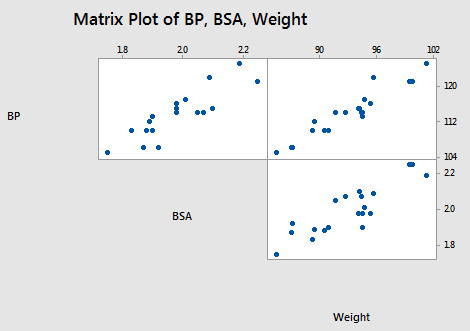

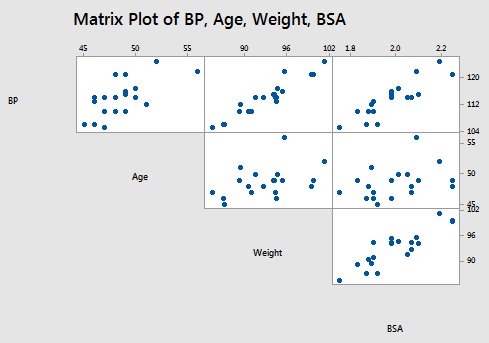

The matrix plot of BP, Age, Weight, and BSA:

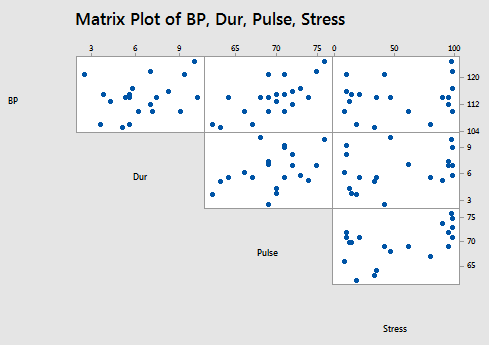

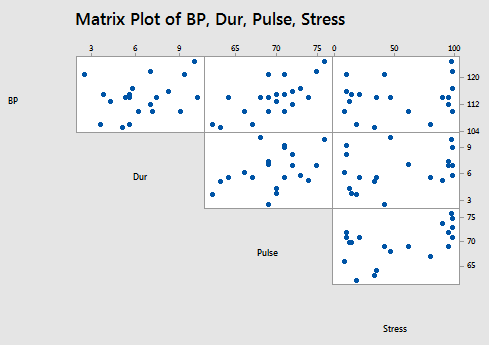

and the matrix plot of BP, Dur, Pulse, and Stress:

allow us to investigate the various marginal relationships between the response BP and the predictors. Blood pressure appears to be related fairly strongly to Weight and BSA, and hardly related at all to the Stress level.

The matrix plots also allow us to investigate whether or not relationships exist among the predictors. For example, Weight and BSA appear to be strongly related, while Stress and BSA appear to be hardly related at all.

The following correlation matrix:

Correlation: BP, Age, Weight, BSA, Dur, Pulse, Stress

| BP | Age | Weight | BSA | Dur | Pulse | |

|---|---|---|---|---|---|---|

| Age | 0.659 | |||||

| Weight | 0.950 | 0.407 | ||||

| BSA | 0.866 | 0.378 | 0.875 | |||

| Dur | 0.293 | 0.344 | 0.201 | 0.131 | ||

| Pulse | 0.721 | 0.619 | 0.659 | 0.465 | 0.402 | |

| Stress | 0.164 | 0.368 | 0.034 | 0.018 | 0.312 | 0.506 |

provides further evidence of the above claims. Blood pressure appears to be related fairly strongly to Weight (r = 0.950) and BSA (r = 0.866), and hardly related at all to Stress level (r = 0.164). And, Weight and BSA appear to be strongly related (r = 0.875), while Stress and BSA appear to be hardly related at all (r = 0.018). The high correlation among some of the predictors suggests that data-based multicollinearity exists.

Now, what we need to learn is the impact of multicollinearity on regression analysis. Let's go do it!

12.2 - Uncorrelated Predictors

12.2 - Uncorrelated PredictorsIn order to get a handle on this multicollinearity thing, let's first investigate the effects that uncorrelated predictors have on regression analyses. To do so, we'll investigate a "contrived" data set, in which the predictors are perfectly uncorrelated. Then, we'll investigate the second example of a "real" data set, in which the predictors are nearly uncorrelated. Our two investigations will allow us to summarize the effects that uncorrelated predictors have on regression analyses.

Then, on the next page, we'll investigate the effects that highly correlated predictors have on regression analyses. In doing so, we'll learn — and therefore be able to summarize — the various effects multicollinearity has on regression analyses.

What is the effect on regression analyses if the predictors are perfectly uncorrelated?

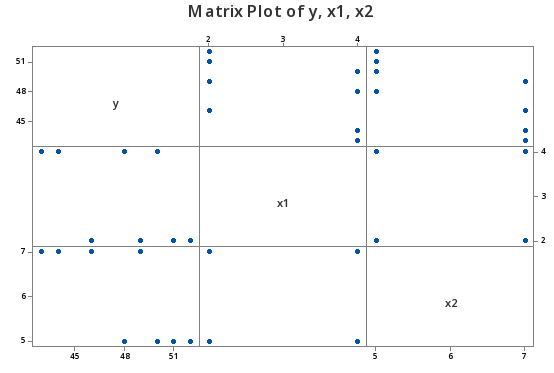

Consider the following matrix plot of the response y and two predictors \(x_{1}\) and \(x_{2}\), of a contrived data set (Uncorrelated Predictors data set), in which the predictors are perfectly uncorrelated:

As you can see there is no apparent relationship at all between the predictors \(x_{1}\) and \(x_{2}\). That is, the correlation between \(x_{1}\) and \(x_{2}\) is zero:

Pearson correlation of x1 and x2 = 0.000

suggesting the two predictors are perfectly uncorrelated.

Now, let's just proceed quickly through the output of a series of regression analyses collecting various pieces of information along the way. When we're done, we'll review what we learned by collating the various items in a summary table.

The regression of the response y on the predictor \(x_{1}\):

Regression Analysis: y versus x1

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 1 | 21.13 | 21.125 | 2.36 | 0.176 |

| x1 | 1 | 21.13 | 21.125 | 2.36 | 0.176 |

| Error | 6 | 53.75 | 8.958 | ||

| Total | 7 | 74.88 |

Model Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 2.99305 | 28.21% | 16.25% | 0.00% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 52.75 | 3.35 | 15.76 | 0.000 | |

| x1 | -1.62 | 1.06 | -1.54 | 0.176 | 1.00 |

Regression Equation

\(\widehat{y} = 52.75 - 1.62 x1\)

yields the estimated coefficient \(b_{1}\) = -1.62, the standard error se(\(b_{1}\)) = 1.06, and the regression sum of squares SSR(\(x_{1}\)) = 21.13.

The regression of the response y on the predictor \(x_{2}\):

Regression Analysis: y versus x2

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 1 | 45.13 | 45.125 | 9.10 | 0.023 |

| x2 | 1 | 45.13 | 45.125 | 9.10 | 0.023 |

| Error | 6 | 29.75 | 4.958 | ||

| Total | 7 | 74.88 |

Model Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 2.22673 | 60.27% | 53.64% | 29.36% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 62.13 | 4.79 | 12.97 | 0.000 | |

| x2 | -2.375 | 0.787 | -3.02 | 0.023 | 1.00 |

Regression Equation

\(\widehat{y} = 62.13 - 2.375 x2\)

yields the estimated coefficient \(b_{2}\) = -2.375, the standard error se(\(b_{2}\)) = 0.787, and the regression sum of squares SSR(\(x_{2}\)) = 45.13.

The regression of the response y on the predictors \(x_{1 }\) and \(x_{2}\) (in that order):

Regression Analysis: y versus x1, x2

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 2 | 66.250 | 33.125 | 19.20 | 0.005 |

| x1 | 1 | 21.125 | 21.125 | 12.25 | 0.017 |

| x2 | 1 | 45.125 | 45.125 | 26.16 | 0.004 |

| Error | 5 | 8.625 | 1.725 | ||

| Lack-of-Fit | 1 | 1.125 | 1.125 | 0.60 | 0.482 |

| Pure Error | 4 | 7.500 | 1.875 | ||

| Total | 7 | 74.875 |

Model Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 1.31339 | 88.48% | 83.87% | 70.51% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 67.00 | 3.15 | 21.27 | 0.000 | |

| x1 | -1.625 | 0.464 | -3.50 | 0.017 | 1.00 |

| x2 | -2.375 | 0.464 | -5.11 | 0.004 | 1.00 |

Regression Equation

\(\widehat{y} = 67.00 - 1.625 x1 - 2.375 x2\)

yields the estimated coefficients \(b_{1}\) = -1.625 and \(b_{2}\) = -2.375, the standard errors se(\(b_{1}\)) = 0.464 and se(\(b_{2}\)) = 0.464, and the sequential sum of squares SSR(\(x_{2}\)|\(x_{1}\)) = 45.125.

The regression of the response y on the predictors \(x_{2 }\) and \(x_{1}\) (in that order):

Regression Analysis: y versus x2, x1

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 2 | 66.250 | 33.125 | 19.20 | 0.005 |

| x2 | 1 | 45.125 | 45.125 | 26.16 | 0.004 |

| x1 | 1 | 21.125 | 21.125 | 12.25 | 0.017 |

| Error | 5 | 8.625 | 1.725 | ||

| Lack-of-Fit | 1 | 1.125 | 1.125 | 0.60 | 0.482 |

| Pure Error | 4 | 7.500 | 1.875 | ||

| Total | 7 | 74.875 |

Model Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 1.31339 | 88.48% | 83.87% | 70.51% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 67.00 | 3.15 | 21.27 | 0.000 | |

| x2 | -2.375 | 0.464 | -5.11 | 0.004 | 1.00 |

| x1 | -1.625 | 0.464 | -3.50 | 0.017 | 1.00 |

Regression Equation

\(\widehat{y} = 67.00 - 2.375 x2 - 1.625 x1\)

yields the estimated coefficients \(b_{1}\) = -1.625 and \(b_{2}\) = -2.375, the standard errors se(\(b_{1}\)) = 0.464 and se(\(b_{2}\)) = 0.464, and the sequential sum of squares SSR(\(x_{1}\)|\(x_{2}\)) = 21.125.

Okay — as promised — compiling the results in a summary table, we obtain:

|

Model |

\(b_{1}\) | se(\(b_{1}\)) | \(b_{2}\) | se(\(b_{2}\)) | Seq SS |

|---|---|---|---|---|---|

| \(x_{1}\) only | -1.62 | 1.06 | --- | --- | SSR(\(x_{1}\)) 21.13 |

| \(x_{2}\) only | --- | --- | -2.375 | 45.13 | SSR(\(x_{2}\)) 45.13 |

| \(x_{1}\), \(x_{2}\) (in order) |

-1.625 | 0.464 | -2.375 | 0.464 | SSR(\(x_{2}\)|\(x_{1}\)) 21.125 |

| \(x_{2}\), \(x_{1}\) (in order) |

-1.625 | 0.464 | -2.375 | 0.464 | SSR(\(x_{1}\)|\(x_{2}\)) 45.125 |

What do we observe?

- The estimated slope coefficients \(b_{1}\) and \(b_{2}\) are the same regardless of the model used.

- The standard errors se(\(b_{1}\)) and se(\(b_{2}\)) don't change much at all from model to model.

- The sum of squares SSR(\(x_{1}\)) is the same as the sequential sum of squares SSR(\(x_{1}\)|\(x_{2}\)). The sum of squares SSR(\(x_{2}\)) is the same as the sequential sum of squares SSR(\(x_{2}\)|\(x_{1}\)).

These all seem to be good things! Because the slope estimates stay the same, the effect on the response ascribed to a predictor doesn't depend on the other predictors in the model. Because SSR(\(x_{1}\)) = SSR(\(x_{1}\)|\(x_{2}\)), the marginal contribution that \(x_{1}\) has in reducing the variability in the response y doesn't depend on the predictor \(x_{2}\). Similarly, because SSR(\(x_{2}\)) = SSR(\(x_{2}\)|\(x_{1}\)), the marginal contribution that \(x_{2}\) has in reducing the variability in the response y doesn't depend on the predictor \(x_{1}\).

These are the things we can hope for in regression analysis — but, then reality sets in! Recall that we obtained the above results for a contrived data set, in which the predictors are perfectly uncorrelated. Do we get similar results for real data with only nearly uncorrelated predictors? Let's see!

What is the effect on regression analyses if the predictors are nearly uncorrelated?

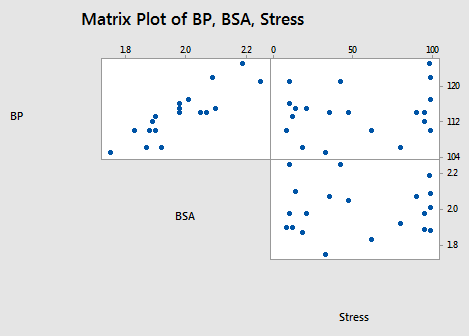

To investigate this question, let's go back and take a look at the blood pressure data set (Blood Pressure data set). In particular, let's focus on the relationships among the response y = BP and the predictors \(x_{3}\) = BSA and \(x_{6}\) = Stress:

As the above matrix plot and the following correlation matrix suggest:

Correlation: BP, Age, Weight, BSA, Dur, Pulse, Stress

| BP | Age | Weight | BSA | Dur | Pulse | |

|---|---|---|---|---|---|---|

| Age | 0.659 | |||||

| Weight | 0.950 | 0.407 | ||||

| BSA | 0.866 | 0.378 | 0.875 | |||

| Dur | 0.293 | 0.344 | 0.201 | 0.131 | ||

| Pulse | 0.721 | 0.619 | 0.659 | 0.465 | 0.402 | |

| Stress | 0.164 | 0.368 | 0.034 | 0.018 | 0.312 | 0.506 |

there appears to be a strong relationship between y = BP and the predictor \(x_{3}\) = BSA (r = 0.866), a weak relationship between y = BP and \(x_{6}\) = Stress (r = 0.164), and an almost non-existent relationship between \(x_{3}\) = BSA and \(x_{6}\) = Stress (r = 0.018). That is, the two predictors are nearly perfectly uncorrelated.

What effect do these nearly perfectly uncorrelated predictors have on regression analyses? Let's proceed similarly through the output of a series of regression analyses collecting various pieces of information along the way. When we're done, we'll review what we learned by collating the various items in a summary table.

The regression of the response y = BP on the predictor \(x_{6}\)= Stress:

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 1 | 15.04 | 15.04 | 0.50 | 0.490 |

| Stress | 1 | 15.04 | 15.04 | 0.50 | 0.490 |

| Error | 18 | 544.96 | 30.28 | ||

| Lack-of-Fit | 14 | 457.79 | 32.70 | 1.50 | 0.374 |

| Pure Error | 4 | 87.17 | 21.79 | ||

| Total | 19 | 560.00 |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 112.72 | 2.19 | 51.39 | 0.000 | |

| Stress | 0.0240 | 0.0340 | 0.70 | 0.490 | 1.00 |

Regression Equation

\(\widehat{BP} = 112.72 + 0.0240 Stress\)

yields the estimated coefficient \(b_{6}\) = 0.0240, the standard error se(\(b_{6}\)) = 0.0340, and the regression sum of squares SSR(\(x_{6}\)) = 15.04.

The regression of the response y = BP on the predictor \(x_{3 }\)= BSA:

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 1 | 419.858 | 419.858 | 53.93 | 0.000 |

| BSA | 1 | 419.858 | 419.858 | 53.93 | 0.000 |

| Error | 18 | 140.142 | 7.786 | ||

| Lack-of-Fit | 13 | 133.642 | 10.280 | 7.91 | 0.016 |

| Pure Error | 5 | 6.500 | 1.300 | ||

| Total | 19 | 560.000 |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 49.50 | 4.65 | 10.63 | 0.000 | |

| BSA | 34.44 | 4.69 | 7.34 | 0.000 | 1.00 |

Regression Equation

\(\widehat{BP} = 45.18 + 34.44 BSA\)

yields the estimated coefficient \(b_{3}\) = 34.44, the standard error se(\(b_{3}\)) = 4.69, and the regression sum of squares SSR(\(x_{3}\)) = 419.858.

The regression of the response y = BP on the predictors \(x_{6}\)= Stress and \(x_{3}\)= BSA (in that order):

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 2 | 432.12 | 216.058 | 28.72 | 0.000 |

| Stress | 1 | 15.04 | 15.044 | 2.00 | 0.175 |

| BSA | 1 | 417.07 | 417.073 | 55.44 | 0.000 |

| Error | 17 | 127.88 | 7.523 | ||

| Total | 19 | 560.00 |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 44.24 | 9.26 | 4.78 | 0.000 | |

| Stress | 0.0217 | 0.0170 | 1.28 | 0.219 | 1.00 |

| BSA | 34.33 | 4.61 | 7.45 | 0.000 | 1.00 |

Regression Equation

\(\widehat{y} = 44.24 + 0.0217 Stress + 34.33 BSA\)

yields the estimated coefficients \(b_{6}\) = 0.0217 and \(b_{3}\) = 34.33, the standard errors se(\(b_{6}\)) = 0.0170 and se(\(b_{2}\)) = 4.61, and the sequential sum of squares SSR(\(x_{3}\)|\(x_{6}\)) = 417.07.

Finally, the regression of the response y = BP on the predictors \(x_{3 }\)= BSA and \(x_{6}\)= Stress (in that order):

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 2 | 432.12 | 216.058 | 28.72 | 0.000 |

| BSA | 1 | 419.86 | 419.858 | 55.81 | 0.000 |

| Stress | 1 | 12.26 | 12.259 | 1.63 | 0.219 |

| Error | 6 | 104.000 | 17.333 | ||

| Total | 7 | 112.000 |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 44.24 | 9.26 | 4.78 | 0.000 | |

| BSA | 34.33 | 4.61 | 7.45 | 0.000 | 1.00 |

| Stress | 0.0217 | 0.0170 | 1.28 | 0.219 | 1.00 |

Regression Equation

\(\widehat{BP} = 44.24 + 34.33 BSA + 0.0217 Stress\)

yields the estimated coefficients \(b_{6}\) = 0.0217 and \(b_{3}\) = 34.33, the standard errors se(\(b_{6}\)) = 0.0170 and se(\(b_{2}\)) = 4.61, and the sequential sum of squares SSR(\(x_{6}\)|\(x_{3}\)) = 12.26.

Again — as promised — compiling the results in a summary table, we obtain:

| Model | \(b_{6}\) | se(\(b_{6}\)) | \(b_{3}\) | se(\(b_{3}\)) | Seq SS |

|---|---|---|---|---|---|

| \(x_{6}\) only | 0.0240 | 0.0340 | --- | --- | SSR(\(x_{6}\)) 15.04 |

| \(x_{3}\) only | --- | --- | 34.44 | 4.69 | SSR(\(x_{3}\)) 419.858 |

| \(x_{6}\), \(x_{3}\) (in order) |

0.0217 | 0.0170 | 34.33 | 4.61 | SSR(\(x_{3}\)|\(x_{6}\)) 417.07 |

| \(x_{3}\), \(x_{6}\) (in order) |

0.0217 | 0.0170 | 34.33 | 4.61 | SSR(\(x_{6}\)|\(x_{3}\)) 12.26 |

What do we observe? If the predictors are nearly perfectly uncorrelated:

- We don't get identical, but very similar slope estimates \(b_{3}\) and \(b_{6}\), regardless of the predictors in the model.

- The sum of squares SSR(\(x_{3}\)) is not the same, but very similar to the sequential sum of squares SSR(\(x_{3}\)|\(x_{6}\)).

- The sum of squares SSR(\(x_{6}\)) is not the same, but very similar to the sequential sum of squares SSR(\(x_{6}\)|\(x_{3}\)).

Again, these are all good things! In short, the effect on the response ascribed to a predictor is similar regardless of the other predictors in the model. And, the marginal contribution of a predictor doesn't appear to depend much on the other predictors in the model.

Try it!

Uncorrelated predictors

Effect of perfectly uncorrelated predictor variables.

This exercise reviews the benefits of having perfectly uncorrelated predictor variables. The results of this exercise demonstrate a strong argument for conducting "designed experiments" in which the researcher sets the levels of the predictor variables in advance, as opposed to conducting an "observational study" in which the researcher merely observes the levels of the predictor variables as they happen. Unfortunately, many regression analyses are conducted on observational data rather than experimental data, limiting the strength of the conclusions that can be drawn from the data. As this exercise demonstrates, you should conduct an experiment, whenever possible, not an observational study. Use the (contrived) data stored in the Uncorrelated Predictor data set to complete this lab exercise.

-

Using the Stat >> Basic Statistics >> Correlation... command in Minitab, calculate the correlation coefficient between \(X_{1}\) and \(X_{2}\). Are the two variables perfectly uncorrelated?Correlation = 0 so, yes, the two variables are perfectly uncorrelated

-

Fit the simple linear regression model with y as the response and \(x_{1}\) as the single predictor:

- What is the value of the estimated slope coefficient \(b_{1}\)?

- What is the regression sum of squares, SSR (\(X_{1}\)), when \(x_{1}\) is the only predictor in the model?

Estimated slope coefficient \(b_1 = -5.80\)

\(SSR(X_1) = 336.40\)

-

Now, fit the simple linear regression model with y as the response and \(x_{2}\) as the single predictor:

- What is the value of the estimated slope coefficient \(b_{2}\)?

- What is the regression sum of squares, SSR (\(X_{2}\)), when \(x_{2}\) is the only predictor in the model?

Estimated slope coefficient \(b_2 = 1.36\).

\(SSR(X_2) = 206.2\).

-

Now, fit the multiple linear regression model with y as the response and \(x_{1}\) as the first predictor and \(x_{2}\) as the second predictor:

- What is the value of the estimated slope coefficient \(b_{1}\)? Is the estimate \(b_{1}\) different than that obtained when \(x_{1}\) was the only predictor in the model?

- What is the value of the estimated slope coefficient \(b_{2}\)? Is the estimate \(b_{2}\) different than that obtained when \(x_{2}\) was the only predictor in the model?

- What is the sequential sum of squares, SSR (\(X_{2}\)|\(X_{1}\))? Does the reduction in the error sum of squares when x2}\) is added to the model depend on whether \(x_{1}\) is already in the model?

Estimated slope coefficient \(b_1 = -5.80\), the same as before.

Estimated slope coefficient \(b_2 = 1.36\), the same as before.

\(SSR(X_2|X_1) = 206.2 = SSR(X_2)\), so this doesn’t depend on whether \(X_1\) is already in the model.

-

Now, fit the multiple linear regression model with y as the response and \(x_{2}\) as the first predictor, and \(x_{1}\) as the second predictor:

- What is the sequential sum of squares, SSR (\(X_{1}\)|\(X_{2}\))? Does the reduction in the error sum of squares when \(x_{1}\) is added to the model depend on whether \(x_{2}\) is already in the model?

\(SSR(X_2|X_1) = 336.4 = SSR(X_1)\), so this doesn’t depend on whether \(X_2\) is already in the model.

-

When the predictor variables are perfectly uncorrelated, is it possible to quantify the effect a predictor has on the response without regard to the other predictors?

Yes -

In what way does this exercise demonstrate the benefits of conducting a designed experiment rather than an observational study?

It is possible to quantify the effect a predictor has on the response regardless of whether other (uncorrelated) predictors have been included

12.3 - Highly Correlated Predictors

12.3 - Highly Correlated PredictorsOkay, so we've learned about all the good things that can happen when predictors are perfectly or nearly perfectly uncorrelated. Now, let's discover the bad things that can happen when predictors are highly correlated.

What happens if the predictor variables are highly correlated?

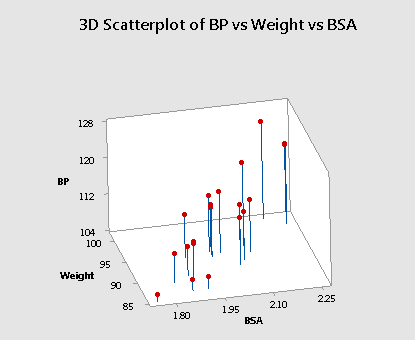

Let's return to the Blood Pressure data set. This time, let's focus, however, on the relationships among the response y = BP and the predictors \(x_2\) = Weight and \(x_3\) = BSA:

As the matrix plot and the following correlation matrix suggest:

Correlation: BP, Age, Weight, BSA, Dur, Pulse, Stress

| BP | Age | Weight | BSA | Dur | Pulse | |

|---|---|---|---|---|---|---|

| Age | 0.659 | |||||

| Weight | 0.950 | 0.407 | ||||

| BSA | 0.866 | 0.378 | 0.875 | |||

| Dur | 0.293 | 0.344 | 0.201 | 0.131 | ||

| Pulse | 0.721 | 0.619 | 0.659 | 0.465 | 0.402 | |

| Stress | 0.164 | 0.368 | 0.034 | 0.018 | 0.312 | 0.506 |

there appears to be not only a strong relationship between y = BP and \(x_2\) = Weight (r = 0.950) and a strong relationship between y = BP and the predictor \(x_3\) = BSA (r = 0.866), but also a strong relationship between the two predictors \(x_2\) = Weight and \(x_3\) = BSA (r = 0.875). Incidentally, it shouldn't be too surprising that a person's weight and body surface area are highly correlated.

What impact does the strong correlation between the two predictors have on the regression analysis and the subsequent conclusions we can draw? Let's proceed as before by reviewing the output of a series of regression analyses and collecting various pieces of information along the way. When we're done, we'll review what we learned by collating the various items in a summary table.

The regression of the response y = BP on the predictor \(x_2\)= Weight:

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 1 | 505.472 | 505.472 | 166.86 | 0.000 |

| Weight | 1 | 505.472 | 505.472 | 166.86 | 0.000 |

| Error | 18 | 54.528 | 3.029 | ||

| Lack-of-Fit | 17 | 54.028 | 3.178 | 6.36 | 0.303 |

| Pure Error | 1 | 0.500 | 0.500 | ||

| Total | 19 | 560.00 |

Modal Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 1.74050 | 90.23% | 89.72% | 88.53% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 2.21 | 8.66 | 0.25 | 0.802 | |

| Weight | 1.2009 | 0.0930 | 12.92 | 0.000 | 1.00 |

Regression Equation

\(\widehat{BP} = 2.21 + 1.2009 Weight\)

yields the estimated coefficient \(b_2\) = 1.2009, the standard error se(\(b_2\)) = 0.0930, and the regression sum of squares SSR(\(x_2\)) = 505.472.

The regression of the response y = BP on the predictor \(x_3\)= BSA:

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 1 | 419.858 | 419.858 | 53.93 | 0.000 |

| BSA | 1 | 419.858 | 419.858 | 53.93 | 0.000 |

| Error | 18 | 140.142 | 7.786 | ||

| Lack-of-Fit | 13 | 133.642 | 10.280 | 7.91 | 0.016 |

| Pure Error | 5 | 6.500 | 1.300 | ||

| Total | 19 | 560.00 |

Modal Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 2.79028 | 74.97% | 73.58% | 69.55% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 45.18 | 9.39 | 4.81 | 0.000 | |

| BSA | 34.44 | 4.69 | 7.34 | 0.000 | 1.00 |

Regression Equation

\(\widehat{BP} = 45.18 + 34.44 BSA\)

yields the estimated coefficient \(b_3\) = 34.44, the standard error se(\(b_3\)) = 4.69, and the regression sum of squares SSR(\(x_3\)) = 419.858.

The regression of the response y = BP on the predictors \(x_2\)= Weight and \(x_3 \)= BSA (in that order):

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 2 | 508.286 | 254.143 | 83.54 | 0.000 |

| Weight | 1 | 505.472 | 505.472 | 166.16 | 0.000 |

| BSA | 1 | 2.814 | 2.814 | 0.93 | 0.350 |

| Error | 17 | 51.714 | 3.042 | ||

| Total | 19 | 560.00 |

Modal Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 1.74413 | 90.77% | 89.68% | 87.78% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 5.65 | 9.39 | 0.60 | 0.555 | |

| Weight | 1.039 | 0.193 | 5.39 | 0.000 | 4.28 |

| BSA | 5.83 | 6.06 | 0.96 | 0.350 | 4.28 |

Regression Equation

\(\widehat{BP} = 5.65 + 1.039 Weight + 5.83 BSA\)

yields the estimated coefficients \(b_2\) = 1.039 and \(b_3\) = 5.83, the standard errors se(\(b_2\)) = 0.193 and se(\(b_3\)) = 6.06, and the sequential sum of squares SSR(\(x_3\)|\(x_2\)) = 2.814.

And finally, the regression of the response y = BP on the predictors \(x_3\)= BSA and \(x_2\)= Weight (in that order):

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 2 | 508.29 | 254.143 | 83.54 | 0.000 |

| BSA | 1 | 419.86 | 419.858 | 138.02 | 0.000 |

| Weight | 1 | 88.43 | 88.428 | 29.07 | 0.000 |

| Error | 17 | 51.71 | 3.042 | ||

| Total | 19 | 560.00 |

Modal Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 1.74413 | 90.77% | 89.68% | 87.78% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 5.65 | 9.39 | 0.60 | 0.555 | |

| BSA | 5.83 | 6.06 | 0.96 | 0.350 | 4.28 |

| Weight | 1.039 | 0.193 | 5.39 | 0.000 | 4.28 |

Regression Equation

\(\widehat{BP} = 5.65 + 5.83 BSA + 1.039 Weight\)

yields the estimated coefficients \(b_2\) = 1.039 and \(b_3\) = 5.83, the standard errors se(\(b_2\)) = 0.193 and se(\(b_3\)) = 6.06, and the sequential sum of squares SSR(\(x_2\)|\(x_3\)) = 88.43.

Compiling the results in a summary table, we obtain:

|

Model |

\(b_2\) | se(\(b_2\)) | \(b_3\) | se(\(b_3\)) | Seq SS |

|---|---|---|---|---|---|

| \(x_2\) only | 1.2009 | 0.0930 | --- | --- | SSR(\(x_2\)) 505.472 |

| \(x_3\) only | --- | --- | 34.44 | 4.69 | SSR(\(x_3\)) 419.858 |

| \(x_2\), \(x_3\) (in order) |

1.039 | 0.193 | 5.83 | 6.06 | SSR(\(x_3\)|\(x_2\)) 2.814 |

| \(x_3\), \(x_2\) (in order) |

1.039 | 0.193 | 5.83 | 6.06 | SSR(\(x_2\)|\(x_3\)) 88.43 |

Geez — things look a little different than before. It appears as if, when predictors are highly correlated, the answers you get depend on the predictors in the model. That's not good! Let's proceed through the table and in so doing carefully summarize the effects of multicollinearity on the regression analyses.

When predictor variables are correlated, the estimated regression coefficient of any one variable depends on which other predictor variables are included in the model.

Here's the relevant portion of the table:

|

Variables in model |

\(b_2\) | \(b_3\) |

|---|---|---|

| \(x_2\) | 1.20 | --- |

| \(x_3\) | --- | 34.4 |

| \(x_2\), \(x_3\) | 1.04 | 5.83 |

Note that, depending on which predictors we include in the model, we obtain wildly different estimates of the slope parameter for \(x_3\) = BSA!

- If \(x_3\) = BSA is the only predictor included in our model, we claim that for every additional one square meter increase in body surface area (BSA), blood pressure (BP) increases by 34.4 mm Hg.

- On the other hand, if \(x_2\) = Weight and \(x_3\) = BSA are both included in our model, we claim that for every additional one square meter increase in body surface area (BSA), holding weight constant, blood pressure (BP) increases by only 5.83 mm Hg.

This is a huge difference! Our hope would be, of course, that two regression analyses wouldn't lead us to such seemingly different scientific conclusions. The high correlation between the two predictors is what causes the large discrepancy. When interpreting \(b_3\) = 34.4 in the model that excludes \(x_2\) = Weight, keep in mind that when we increase \(x_3\) = BSA then \(x_2\) = Weight also increases and both factors are associated with increased blood pressure. However, when interpreting \(b_3\) = 5.83 in the model that includes \(x_2\) = Weight, we keep \(x_2\) = Weight fixed, so the resulting increase in blood pressure is much smaller.

The fantastic thing is that even predictors that are not included in the model, but are highly correlated with the predictors in our model, can have an impact! For example, consider a pharmaceutical company's regression of territory sales on territory population and per capita income. One would, of course, expect that as the population of the territory increases, so would the sales in the territory. But, contrary to this expectation, the pharmaceutical company's regression analysis deemed the estimated coefficient of territory population to be negative. That is, as the population of the territory increases, the territory sales were predicted to decrease. After further investigation, the pharmaceutical company determined that the larger the territory, the larger to the competitor's market penetration. That is, the competitor kept the sales down in territories with large populations.

In summary, the competitor's market penetration was not included in the original model. Yet, it was later deemed to be strongly positively correlated with territory population. Even though the competitor's market penetration was not included in the original model, its strong correlation with one of the predictors in the model, greatly affected the conclusions arising from the regression analysis.

The moral of the story is that if you get estimated coefficients that just don't make sense, there is probably a very good explanation. Rather than stopping your research and running off to report your unusual results, think long and hard about what might have caused the results. That is, think about the system you are studying and all of the extraneous variables that could influence the system.

When predictor variables are correlated, the precision of the estimated regression coefficients decreases as more predictor variables are added to the model.

Here's the relevant portion of the table:

|

Variables in model |

se(\(b_2\)) | se(\(b_3\)) |

|---|---|---|

| \(x_2\) | 0.093 | --- |

| \(x_3\) | --- | 4.69 |

| \(x_2\), \(x_3\) | 0.193 | 6.06 |

The standard error for the estimated slope \(b_2\) obtained from the model including both \(x_2\) = Weight and \(x_3\) = BSA is about double the standard error for the estimated slope \(b_2\) obtained from the model including only \(x_2\) = Weight. And, the standard error for the estimated slope \(b_3\) obtained from the model including both \(x_2\) = Weight and \(x_3\) = BSA is about 30% larger than the standard error for the estimated slope \(b_3\) obtained from the model including only \(x_3\) = BSA.

What is the major implication of these increased standard errors? Recall that the standard errors are used in the calculation of the confidence intervals for the slope parameters. That is, increased standard errors of the estimated slopes lead to wider confidence intervals and hence less precise estimates of the slope parameters.

Three plots to help clarify the second effect. Recall that the first Uncorrelated Predictors data set that we investigated in this lesson contained perfectly uncorrelated predictor variables (r = 0). Upon regressing the response y on the uncorrelated predictors \(x_1\) and \(x_2\), Minitab (or any other statistical software for that matter) will find the "best fitting" plane through the data points:

Click the Best Fitting Plane button to see the best-fitting plane for this particular set of responses. Now, here's where you have to turn on your imagination. The primary characteristic of the data — because the predictors are perfectly uncorrelated — is that the predictor values are spread out and anchored in each of the four corners, providing a solid base over which to draw the response plane. Now, even if the responses (y) varied somewhat from sample to sample, the plane couldn't change all that much because of the solid base. That is, the estimated coefficients, \(b_1\) and \(b_2\) couldn't change that much, and hence the standard errors of the estimated coefficients, se(\(b_1\)) and se(\(b_2\)) will necessarily be small.

Now, let's take a look at the second example (bloodpress.txt) that we investigated in this lesson, in which the predictors \(x_3\) = BSA and \(x_6\) = Stress were nearly perfectly uncorrelated (r = 0.018). Upon regressing the response y = BP on the nearly uncorrelated predictors \(x_3\) = BSA and \(x_6 \) = Stress, we will again find the "best fitting" plane through the data points. Move the slider at the bottom of the graph to see the plane of best fit.

Again, the primary characteristic of the data — because the predictors are nearly perfectly uncorrelated — is that the predictor values are spread out and just about anchored in each of the four corners, providing a solid base over which to draw the response plane. Again, even if the responses (y) varied somewhat from sample to sample, the plane couldn't change all that much because of the solid base. That is, the estimated coefficients, \(b_3\) and \(b_6\) couldn't change all that much. The standard errors of the estimated coefficients, se(\(b_3\)) and se(\(b_6\)), again will necessarily be small.

Now, let's see what happens when the predictors are highly correlated. Let's return to our most recent example (bloodpress.txt), in which the predictors \(x_2\) = Weight and \(x_3\) = BSA are very highly correlated (r = 0.875). Upon regressing the response y = BP on the predictors \(x_2\) = Weight and \(x_3\) = BSA, Minitab will again find the "best fitting" plane through the data points.

Do you see the difficulty in finding the best-fitting plane in this situation? The primary characteristic of the data — because the predictors are so highly correlated — is that the predictor values tend to fall in a straight line. That is, there are no anchors in two of the four corners. Therefore, the base over which the response plane is drawn is not very solid.

Let's put it this way — would you rather sit on a chair with four legs or one with just two legs? If the responses (y) varied somewhat from sample to sample, the position of the plane could change significantly. That is, the estimated coefficients, \(b_2\) and \(b_3\) could change substantially. The standard errors of the estimated coefficients, se(\(b_2\)) and se(\(b_3\)), will then be necessarily larger. Below is an animated view (no sound) of the problem that highly correlated predictors can cause with finding the best fitting plane.

When predictor variables are correlated, the marginal contribution of any one predictor variable in reducing the error sum of squares varies depending on which other variables are already in the model.

For example, regressing the response y = BP on the predictor \(x_2\) = Weight, we obtain SSR(\(x_2\)) = 505.472. But, regressing the response y = BP on the two predictors \(x_3\) = BSA and \(x_2\) = Weight (in that order), we obtain SSR(\(x_2\)|\(x_3\)) = 88.43. The first model suggests that weight reduces the error sum of squares substantially (by 505.472), but the second model suggests that weight doesn't reduce the error sum of squares all that much (by 88.43) once a person's body surface area is taken into account.

This should make intuitive sense. In essence, weight appears to explain some of the variations in blood pressure. However, because weight and body surface area are highly correlated, most of the variation in blood pressure explained by weight could just have easily been explained by body surface area. Therefore, once you take into account a person's body surface area, there's not much variation left in the blood pressure for weight to explain.

Incidentally, we see a similar phenomenon when we enter the predictors into the model in reverse order. That is, regressing the response y = BP on the predictor \(x_3\) = BSA, we obtain SSR(\(x_3\)) = 419.858. But, regressing the response y = BP on the two predictors \(x_2\) = Weight and \(x_3\) = BSA (in that order), we obtain SSR(\(x_3\)|\(x_2\)) = 2.814. The first model suggests that body surface area reduces the error sum of squares substantially (by 419.858), and the second model suggests that body surface area doesn't reduce the error sum of squares all that much (by only 2.814) once a person's weight is taken into account.

When predictor variables are correlated, hypothesis tests for \(\beta_k = 0\) may yield different conclusions depending on which predictor variables are in the model. (This effect is a direct consequence of the three previous effects.)

To illustrate this effect, let's once again quickly proceed through the output of a series of regression analyses, focusing primarily on the outcome of the t-tests for testing \(H_0 \colon \beta_{BSA} = 0 \) and \(H_0 \colon \beta_{Weight} = 0 \).

The regression of the response y = BP on the predictor \(x_3\)= BSA:

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 45.18 | 9.39 | 4.81 | 0.000 | |

| BSA | 34.44 | 4.69 | 7.34 | 0.000 | 1.00 |

Regression Equation

\(\widehat{BP} = 45.18 + 34.44 BSA\)

indicates that the P-value associated with the t-test for testing\(H_0 \colon \beta_{BSA} = 0 \) is 0.000... < 0.01. There is sufficient evidence at the 0.05 level to conclude that blood pressure is significantly related to body surface area.

The regression of the response y = BP on the predictor \(x_2\) = Weight:

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 2.21 | 8.66 | 0.25 | 0.802 | |

| Weight | 1.2009 | 0.0930 | 12.92 | 0.000 | 1.00 |

Regression Equation

\(\widehat{BP} = 2.21 + 1.2009 Weight\)

indicates that the P-value associated with the t-test for testing\(H_0 \colon \beta_{Weight} \) = 0 is 0.000... < 0.01. There is sufficient evidence at the 0.05 level to conclude that blood pressure is significantly related to weight.

And, the regression of the response y = BP on the predictors \(x_2\) = Weight and \(x_3\) = BSA:

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 5.65 | 9.39 | 0.60 | 0.555 | |

| Weight | 1.039 | 0.193 | 5.39 | 0.000 | 4.28 |

| BSA | 5.83 | 6.06 | 0.96 | 0.350 | 4.28 |

Regression Equation

\(\widehat{BP} = 112.72 + 0.0240 Stress\)

indicates that the P-value associated with the t-test for testing\(H_0 \colon \beta_{Weight} \) = 0 is 0.000... < 0.01. There is sufficient evidence at the 0.05 level to conclude that, after taking into account body surface area, blood pressure is significantly related to weight.

The regression also indicates that the P-value associated with the t-test for testing\(H_0 \colon \beta_{BSA} = 0 \) is 0.350. There is insufficient evidence at the 0.05 level to conclude that blood pressure is significantly related to body surface area after taking into account weight. This might sound contradictory to what we claimed earlier, namely that blood pressure is indeed significantly related to body surface area. Again, what is going on here, once you take into account a person's weight, body surface area doesn't explain much of the remaining variability in blood pressure readings.

High multicollinearity among predictor variables does not prevent good, precise predictions of the response within the scope of the model.

Well, okay, it's not an effect, and it's not bad news either! It is good news! If the primary purpose of your regression analysis is to estimate a mean response \(\mu_Y\) or to predict a new response y, you don't have to worry much about multicollinearity.

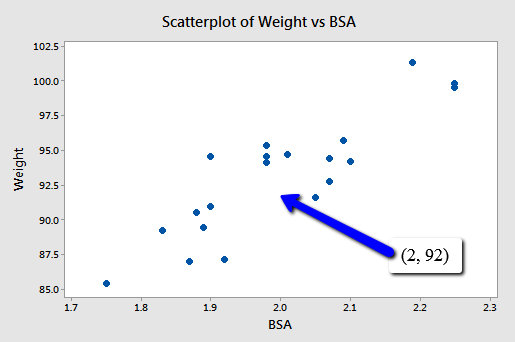

For example, suppose you are interested in predicting the blood pressure (y = BP) of an individual whose weight is 92 kg and whose body surface area is 2 square meters:

Because point (2, 92) falls within the scope of the model, you'll still get good, reliable predictions of the response y, regardless of the correlation that exists among the two predictors BSA and Weight. Geometrically, what is happening here is that the best-fitting plane through the responses may tilt from side to side from sample to sample (because of the correlation), but the center of the plane (in the scope of the model) won't change all that much.

The following output illustrates how the predictions don't change all that much from model to model:

| Weight | Fit | SE Fit | 95.0% CI | 95.0% PI |

|---|---|---|---|---|

| 92 | 112.7 | 0.402 | (111.85, 113.54) | (108.94, 116.44) |

| BSA | Fit | SE Fit | 95.0% CI | 95.0% PI |

|---|---|---|---|---|

| 2 | 114.1 | 0.624 | (112.76, 115.38) | (108.06, 120.08) |

| BSA | Weight | Fit | SE Fit | 95.0% CI | 95.0% PI |

|---|---|---|---|---|---|

| 2 | 92 | 112.8 | 0.448 | (111.93, 113.83) | (109.08, 116.68) |

The first output yields a predicted blood pressure of 112.7 mm Hg for a person whose weight is 92 kg based on the regression of blood pressure on weight. The second output yields a predicted blood pressure of 114.1 mm Hg for a person whose body surface area is 2 square meters based on the regression of blood pressure on body surface area. And the last output yields a predicted blood pressure of 112.8 mm Hg for a person whose body surface area is 2 square meters and whose weight is 92 kg based on the regression of blood pressure on body surface area and weight. Reviewing the confidence intervals and prediction intervals, you can see that they too yield similar results regardless of the model.

The bottom line

In short, what are the significant effects of multicollinearity on our use of a regression model to answer our research questions? In the presence of multicollinearity:

- It is okay to use an estimated regression model to predict y or estimate \(\mu_Y\) as long as you do so within the model's scope.

- We can no longer make much sense of the usual interpretation of a slope coefficient as the change in the mean response for each additional unit increases in the predictor \(x_k\) when all the other predictors are held constant.

The first point is, of course, addressed above. The second point is a direct consequence of the correlation among the predictors. It wouldn't make sense to talk about holding the values of correlated predictors constant since changing one predictor necessarily would change the values of the others.

Try it!

Correlated predictors

Effects of correlated predictor variables. This exercise reviews the impacts of multicollinearity on various aspects of regression analyses. The Allen Cognitive Level (ACL) test is designed to quantify one's cognitive abilities. David and Riley (1990) investigated the relationship of the ACL test to the level of psychopathology in a set of 69 patients from a general hospital psychiatry unit. The Allen Test data set contains the response y = ACL and three potential predictors:

- \(x_1\) = Vocab, scores on the vocabulary component of the Shipley Institute of Living Scale

- \(x_2\) = Abstract, scores on the abstraction component of the Shipley Institute of Living Scale

- \(x_3\) = SDMT, scores on the Symbol-Digit Modalities Test

-

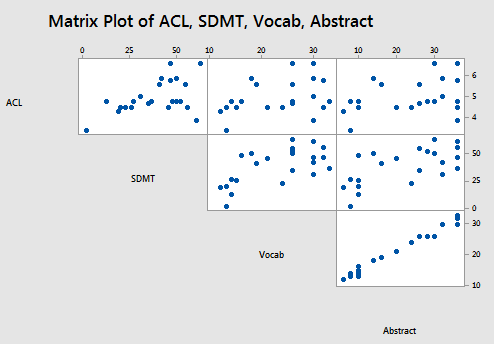

Determine the pairwise correlations among the predictor variables to get an idea of the extent to which the predictor variables are (pairwise) correlated. (See Minitab Help: Creating a correlation matrix). Also, create a matrix plot of the data to get a visual portrayal of the relationship between the response and predictor variables. (See Minitab Help: Creating a simple matrix of scatter plots)

There’s a moderate positive correlation between each pair of predictor variables, 0.698 for \(X_1\) and \(X_2\), 0.556 for \(X_1\) and \(X_3\), and 0.577 for \(X_2\) and \(X_3\)

-

Fit the simple linear regression model with y = ACL as the response and \(x_1\) = Vocab as the predictor. After fitting your model, request that Minitab predict the response y = ACL when \(x_1\) = 25. (See Minitab Help: Performing a multiple regression analysis — with options).

- What is the value of the estimated slope coefficient \(b_1\)?

- What is the value of the standard error of \(b_1\)?

- What is the regression sum of squares, SSR (\(x_1\)), when \(x_1\) is the only predictor in the model?

- What is the predicted response of y = ACL when \(x_1\) = 25?

Estimated slope coefficient \(b_1 = 0.0298\)

Standard error \(se(b_1) = 0.0141\).

\(SSR(X_1) = 2.691\).

Predicted response when \(x_1=25\) is 4.97025.

-

Now, fit the simple linear regression model with y = ACL as the response and \(x_3\) = SDMT as the predictor. After fitting your model, request that Minitab predict the response y = ACL when \(x_3\) = 40. (See Minitab Help: Performing a multiple regression analysis — with options).

- What is the value of the estimated slope coefficient \(b_3\)?

- What is the value of the standard error of \(b_3\)?

- What is the regression sum of squares, SSR (\(x_3\)), when \(x_3\) is the only predictor in the model?

- What is the predicted response of y = ACL when \(x_3\) = 40?

Estimated slope coefficient \(b_3 = 0.02807\).

Standard error \(se(b_3) = 0.00562\).

\(SSR(X_3) = 11.68\).

Predicted response when \(x_3=40\) is 4.87634.

-

Fit the multiple linear regression model with y = ACL as the response and \(x_3\) = SDMT as the first predictor and \(x_1\) = Vocab as the second predictor. After fitting your model, request that Minitab predict the response y = ACL when \(x_1\) = 25 and \(x_3\) = 40. (See Minitab Help: Performing a multiple regression analysis — with options).

- Now, what is the value of the estimated slope coefficient \(b_1\)? and \(b_3\)?

- What is the value of the standard error of \(b_1\)? and \(b_3\)?

- What is the sequential sum of squares, SSR (\(X_1|X_3\)?

- What is the predicted response of y = ACL when \(x_1\) = 25 and \(x_3\) = 40?

Estimated slope coefficient \(b_1 = -0.0068\) (but not remotely significant).

Estimated slope coefficient \(b_3 = 0.02979\).

Standard error \(se(b_1) = 0.0150\).

Standard error \(se(b_3) = 0.00680\).

\(SSR(X_1|X_3) = 0.0979\).

Predicted response when \(x_1=25\) and \(x_3=40\) is 4.86608.

-

Summarize the effects of multicollinearity on various aspects of the regression analyses.

The regression output concerning \(X_1=Vocab\) changes considerably depending on whether \(X_3=SDMT\) is included or not. However, since \(X_1\) is not remotely significant when \(X_3\) is included, it is probably best to exclude \(X_1\) from the model. The regression output concerning \(X_3\) changes little depending on whether \(X_1\) is included or not. Multicollinearity doesn’t have a particularly adverse effect in this example.

12.4 - Detecting Multicollinearity Using Variance Inflation Factors

12.4 - Detecting Multicollinearity Using Variance Inflation FactorsOkay, now that we know the effects that multicollinearity can have on our regression analyses and subsequent conclusions, how do we tell when it exists? That is, how can we tell if multicollinearity is present in our data?

Some of the common methods used for detecting multicollinearity include:

- The analysis exhibits the signs of multicollinearity — such as estimates of the coefficients varying from model to model.

- The t-tests for each of the individual slopes are non-significant (P > 0.05), but the overall F-test for testing all of the slopes is simultaneously 0 is significant (P < 0.05).

- The correlations among pairs of predictor variables are large.

Looking at correlations only among pairs of predictors, however, is limiting. It is possible that the pairwise correlations are small, and yet a linear dependence exists among three or even more variables, for example, \(X_3 = 2X_1 + 5X_2 + \text{error}\), say. That's why many regression analysts often rely on what is called variance inflation factors (VIF) to help detect multicollinearity.

What is a Variation Inflation Factor?

As the name suggests, a variance inflation factor (VIF) quantifies how much the variance is inflated. But what variance? Recall that we learned previously that the standard errors — and hence the variances — of the estimated coefficients are inflated when multicollinearity exists. So, the variance inflation factor for the estimated coefficient \(b_k\) — denoted \(VIF_k\) — is just the factor by which the variance is inflated.

Let's be a little more concrete. For the model in which \(x_k\) is the only predictor:

\(y_i=\beta_0+\beta_kx_{ik}+\epsilon_i\)

it can be shown that the variance of the estimated coefficient \(b_k\) is:

\(Var(b_k)_{min}=\dfrac{\sigma^2}{\sum_{i=1}^{n}(x_{ik}-\bar{x}_k)^2}\)

Note that we add the subscript "min" in order to denote that it is the smallest the variance can be. Don't worry about how this variance is derived — we just need to keep track of this baseline variance, so we can see how much the variance of \(b_k\) is inflated when we add correlated predictors to our regression model.

Let's consider such a model with correlated predictors:

\(y_i=\beta_0+\beta_1x_{i1}+ \cdots + \beta_kx_{ik}+\cdots +\beta_{p-1}x_{i, p-1} +\epsilon_i\)

Now, again, if some of the predictors are correlated with the predictor \(x_k\), then the variance of \(b_k\) is inflated. It can be shown that the variance of \(b_k\) is:

\(Var(b_k)=\dfrac{\sigma^2}{\sum_{i=1}^{n}(x_{ik}-\bar{x}_k)^2}\times \dfrac{1}{1-R_{k}^{2}}\)

where \(R_{k}^{2}\) is the \(R^{2} \text{-value}\) obtained by regressing the \(k^{th}\) predictor on the remaining predictors. Of course, the greater the linear dependence among the predictor \(x_k\) and the other predictors, the larger the \(R_{k}^{2}\) value. And, as the above formula suggests, the larger the \(R_{k}^{2}\) value, the larger the variance of \(b_k\).

How much larger? To answer this question, all we need to do is take the ratio of the two variances. By doing so, we obtain:

\(\dfrac{Var(b_k)}{Var(b_k)_{min}}=\dfrac{\left( \frac{\sigma^2}{\sum(x_{ik}-\bar{x}_k)^2}\times \dfrac{1}{1-R_{k}^{2}} \right)}{\left( \frac{\sigma^2}{\sum(x_{ik}-\bar{x}_k)^2} \right)}=\dfrac{1}{1-R_{k}^{2}}\)

The above quantity is deemed the variance inflation factor for the \(k^{th}\) predictor. That is:

\(VIF_k=\dfrac{1}{1-R_{k}^{2}}\)

where \(R_{k}^{2}\) is the \(R^{2} \text{-value}\) obtained by regressing the \(k^{th}\) predictor on the remaining predictors. Note that a variance inflation factor exists for each of the (p-1) predictors in a multiple regression model.

How do we interpret the variance inflation factors for a regression model? Again, it measures how much the variance of the estimated regression coefficient \(b_k\) is "inflated" by the existence of correlation among the predictor variables in the model. A VIF of 1 means that there is no correlation between the \(k^{th}\) predictor and the remaining predictor variables, and hence the variance of \(b_k\) is not inflated at all. The general rule of thumb is that VIFs exceeding 4 further warrant investigation, while VIFs exceeding 10 are signs of serious multicollinearity requiring correction.

Example 12-2

Let's return to the Blood Pressure data set in which researchers observed the following data on 20 individuals with high blood pressure:

- blood pressure (y = BP, in mm Hg)

- age (\(x_{1} \) = Age, in years)

- weight (\(x_2\) = Weight, in kg)

- body surface area (\(x_{3} \) = BSA, in sq m)

- duration of hypertension (\(x_{4} \) = Dur, in years)

- basal pulse (\(x_{5} \) = Pulse, in beats per minute)

- stress index (\(x_{6} \) = Stress)

As you may recall, the matrix plot of BP, Age, Weight, and BSA:

the matrix plot of BP, Dur, Pulse, and Stress:

and the correlation matrix:

Correlation: BP, Age, Weight, BSA, Dur, Pulse, Stress

| BP | Age | Weight | BSA | Due | Pulse | |

|---|---|---|---|---|---|---|

| Age | 0.659 | |||||

| Weight | 0.950 | 0.407 | ||||

| BSA | 0.866 | 0.378 | 0.875 | |||

| Dur | 0.293 | 0.344 | 0.201 | 0.131 | ||

| Pulse | 0.721 | 0.619 | 0.659 | 0.465 | 0.402 | |

| Stress | 0.164 | 0.368 | 0.034 | 0.018 | 0.312 | 0.506 |

suggest that some of the predictors are at least moderately marginally correlated. For example, body surface area (BSA) and weight are strongly correlated (r = 0.875), and weight and pulse are fairly strongly correlated (r = 0.659). On the other hand, none of the pairwise correlations among age, weight, duration, and stress are particularly strong (r < 0.40 in each case).

Regressing y = BP on all six of the predictors, we obtain:

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 6 | 557.844 | 92.974 | 56.64 | 0.000 |

| Age | 1 | 243.266 | 243.266 | 1466.91 | 0.000 |

| Weight | 1 | 311.910 | 311.910 | 1880.84 | 0.000 |

| BSA | 1 | 1.768 | 1.768 | 10.66 | 0.006 |

| Dur | 1 | 0.335 | 0.335 | 2.02 | 0.179 |

| Pulse | 1 | 0.123 | 0.123 | 0.74 | 0.405 |

| Stress | 1 | 0.442 | 0.442 | 2.67 | 0.126 |

| Error | 13 | 2.156 | 0.166 | ||

| Total | 19 | 560.00 |

Modal Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 0.407229 | 99.62% | 99.44% | 99.08% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | -12.87 | 2.56 | -5.03 | 0.000 | |

| Age | 0.7033 | 0.0496 | 14.18 | 0.000 | 1.76 |

| Weight | 0.9699 | 0.0631 | 15.37 | 0.000 | 8.42 |

| BSA | 3.78 | 1.58 | 2.39 | 0.033 | 5.33 |

| Dur | 0.0684 | 0.0484 | 1.41 | 0.182 | 1.24 |

| Pulse | -0.0845 | 0.0516 | -1.64 | 0.126 | 4.41 |

| Stress | 0.00557 | 0.00341 | 1.63 | 0.126 | 1.83 |

Minitab reports the variance inflation factors by default. As you can see, three of the variance inflation factors — 8.42, 5.33, and 4.41 — are fairly large. The VIF for the predictor Weight, for example, tells us that the variance of the estimated coefficient of Weight is inflated by a factor of 8.42 because Weight is highly correlated with at least one of the other predictors in the model.

For the sake of understanding, let's verify the calculation of the VIF for the predictor Weight. Regressing the predictor \(x_2\) = Weight on the remaining five predictors:

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 5 | 308.839 | 61.768 | 20.77 | 0.000 |

| Age | 1 | 15.04 | 15.04 | 0.50 | 0.490 |

| BSA | 1 | 212.734 | 212.734 | 71.53 | 0.000 |

| Dur | 1 | 1.442 | 1.442 | 0.48 | 0.498 |

| Pulse | 1 | 27.311 | 27.311 | 9.18 | 0.009 |

| Stress | 1 | 41.639 | 2.974 | ||

| Error | 14 | 41.639 | 2.974 | ||

| Total | 19 | 350.478 |

Modal Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 1.72459 | 88.12% | 83.88% | 74.77% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 19.67 | 9.46 | 2.08 | 0.057 | |

| Age | -0.145 | 0.206 | -0.70 | 0.495 | 1.70 |

| BSA | 21.42 | 3.46 | 6.18 | 0.000 | 1.43 |

| Dur | 0.009 | 0.205 | 0.04 | 0.967 | 1.24 |

| Pulse | 0.558 | 0.160 | 3.49 | 0.004 | 2.36 |

| Stress | -0.0230 | 0.0131 | -1.76 | 0.101 | 1.50 |

Minitab reports that \(R_{Weight}^{2}\) is 88.1% or, in decimal form, 0.881. Therefore, the variance inflation factor for the estimated coefficient Weight is by definition:

\(VIF_{Weight}=\dfrac{Var(b_{Weight})}{Var(b_{Weight})_{min}}=\dfrac{1}{1-R_{Weight}^{2}}=\dfrac{1}{1-0.881}=8.4\)

Again, this variance inflation factor tells us that the variance of the weight coefficient is inflated by a factor of 8.4 because Weight is highly correlated with at least one of the other predictors in the model.

So, what to do? One solution to dealing with multicollinearity is to remove some of the violating predictors from the model. If we review the pairwise correlations again:

Correlation: BP, Age, Weight, BSA, Dur, Pulse, Stress

| BP | Age | Weight | BSA | Dur | Pulse | |

|---|---|---|---|---|---|---|

| Age | 0.659 | |||||

| Weight | 0.950 | 0.407 | ||||

| BSA | 0.866 | 0.378 | 0.875 | |||

| Dur | 0.293 | 0.344 | 0.201 | 0.131 | ||

| Pulse | 0.721 | 0.619 | 0.659 | 0.465 | 0.402 | |

| Stress | 0.164 | 0.368 | 0.034 | 0.018 | 0.312 | 0.506 |

we see that the predictors Weight and BSA are highly correlated (r = 0.875). We can choose to remove either predictor from the model. The decision of which one to remove is often a scientific or practical one. For example, if the researchers here are interested in using their final model to predict the blood pressure of future individuals, their choice should be clear. Which of the two measurements — body surface area or weight — do you think would be easier to obtain?! If weight is an easier measurement to obtain than body surface area, then the researchers would be well-advised to remove BSA from the model and leave Weight in the model.

Reviewing again the above pairwise correlations, we see that the predictor Pulse also appears to exhibit fairly strong marginal correlations with several of the predictors, including Age (r = 0.619), Weight (r = 0.659), and Stress (r = 0.506). Therefore, the researchers could also consider removing the predictor Pulse from the model.

Let's see how the researchers would do. Regressing the response y = BP on the four remaining predictors age, weight, duration, and stress, we obtain:

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 4 | 555.455 | 138.864 | 458.28 | 0.000 |

| Age | 1 | 243.266 | 243.266 | 802.84 | 0.000 |

| Weight | 1 | 311.910 | 311.910 | 1029.38 | 0.000 |

| Dur | 1 | 0.178 | 0.178 | 0.59 | 0.455 |

| Stress | 1 | 0.100 | 0.100 | 0.33 | 0.573 |

| Error | 15 | 4.545 | 0.303 | ||

| Total | 19 | 560.000 |

Modal Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 0.550462 | 99.19% | 98.97% | 98.59% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | -15.87 | 3.20 | -4.97 | 0.000 | |

| Age | 0.6837 | 0.0612 | 11.17 | 0.000 | 1.47 |

| Weight | 1.0341 | 0.0327 | 31.65 | 0.000 | 1.23 |

| Dur | 0.0399 | 0.0645 | 0.62 | 0.545 | 1.20 |

| Stress | 0.00218 | 0.00379 | 0.58 | 0.573 | 1.24 |

Aha — the remaining variance inflation factors are quite satisfactory! That is, it appears as if hardly any variance in inflation remains. Incidentally, in terms of the adjusted \(R^{2} \text{-value}\), we did not seem to lose much by dropping the two predictors BSA and Pulse from our model. The adjusted \(R^{2} \text{-value}\) decreased to only 98.97% from the original adjusted \(R^{2} \text{-value}\) of 99.44%.

Try it!

Variance inflation factors

Detecting multicollinearity using \(VIF_k\).

We’ll use the Cement data set to explore variance inflation factors. The response y measures the heat evolved in calories during the hardening of cement on a per gram basis. The four predictors are the percentages of four ingredients: tricalcium aluminate (\(x_{1} \)), tricalcium silicate (\(x_2\)), tetracalcium alumino ferrite (\(x_{3} \)), and dicalcium silicate (\(x_{4} \)). It’s not hard to imagine that such predictors would be correlated in some way.

-

Use the Stat >> Basic Statistics >> Correlation ... command in Minitab to get an idea of the extent to which the predictor variables are (pairwise) correlated. Also, use the Graph >> Matrix Plot ... command in Minitab to get a visual portrayal of the (pairwise) relationships among the response and predictor variables.

There’s a strong negative correlation between \(X_2\) and \(X_4\) (-0.973) and between \(X_1\) and \(X_3\) (-0.824). The remaining pairwise correlations are all quite low.

-

Regress the fourth predictor, \(x_4\), on the remaining three predictors, \(x_{1} \), \(x_2\), and \(x_{3} \). That is, fit the linear regression model treating \(x_4\) as the response and \(x_{1} \), \(x_2\), and \(x_{3} \) as the predictors. What is the \({R^2}_4\) value? (Note that Minitab rounds the \(R^{2} \) value it reports to three decimal places. For the purposes of the next question, you’ll want a more accurate \(R^{2} \) value. Calculate the \(R^{2} \) value SSR using its definition, \(\dfrac{SSR}{SSTO}\). Use your calculated value, carried out to 5 decimal places, in answering the next question.)

\(R^2 = SSR/SSTO = 3350.10/3362.00 = 0.99646\)

-

Using your calculated \(R^{2} \) value carried out to 5 decimal places, determine by what factor the variance of \(b_4\) is inflated. That is, what is \(VIF_4\)?

\(VIF_4 = 1/(1-0.99646) - 282.5\) -

Minitab will actually calculate the variance inflation factors for you. Fit the multiple linear regression model with y as the response and \(x_{1} \),\(x_2\),\(x_{3} \) and \(x_{4} \) as the predictors. The \(VIF_k\) will be reported as a column of the estimated coefficients table. Is the \(VIF_4\) that you calculated consistent with what Minitab reports?

\(VIF_4 = 282.51\)

-

Note that all of the \(VIF_k\) are larger than 10, suggesting that a high degree of multicollinearity is present. (It should seem logical that multicollinearity is present here, given that the predictors are measuring the percentage of ingredients in the cement.) Do you notice anything odd about the results of the t-tests for testing the individual \(H_0 \colon \beta_i = 0\) and the result of the overall F-test for testing \(H_0 \colon \beta_1 = \beta_2 = \beta_3 = \beta_4 = 0\)? Why does this happen?

The individual t-tests indicate that none of the predictors are significant given the presence of all the others, but the overall F-test indicates that at least one of the predictors is useful. This is a result of the high degree of multicollinearity between all the predictors.

-

We learned that one way of reducing data-based multicollinearity is to remove some of the violating predictors from the model. Fit the linear regression model with y as the response and \(X_1\) and \(X_2\) as the only predictors. Are the variance inflation factors for this model acceptable?

The VIFs for the model with just \(X_1\) and \(X_2\) are just 1.06 and so are acceptable.

12.5 - Reducing Data-based Multicollinearity

12.5 - Reducing Data-based MulticollinearityRecall that data-based multicollinearity is multicollinearity that results from a poorly designed experiment, reliance on purely observational data, or the inability to manipulate the system on which the data are collected. We now know all the bad things that can happen in the presence of multicollinearity. And, we've learned how to detect multicollinearity. Now, let's learn how to reduce multicollinearity once we've discovered that it exists.

As the example in the previous section illustrated, one way of reducing data-based multicollinearity is to remove one or more of the violating predictors from the regression model. Another way is to collect additional data under different experimental or observational conditions. We'll investigate this alternative method in this section.

Before we do, let's quickly remind ourselves why we should care about reducing multicollinearity. It all comes down to drawing conclusions about the population slope parameters. If the variances of the estimated coefficients are inflated by multicollinearity, then our confidence intervals for the slope parameters are wider and therefore less useful. Eliminating or even reducing the multicollinearity, therefore, yields narrower, more useful confidence intervals for the slopes.

Example 12-3

Researchers running the Allen Cognitive Level (ACL) Study were interested in the relationship of ACL test scores to the level of psychopathology. They, therefore, collected the following data on a set of 69 patients in a hospital psychiatry unit:

- Response \(y\) = ACL test score

- Predictor \(x_1\) = vocabulary (Vocab) score on the Shipley Institute of Living Scale

- Predictor \(x_2\) = abstraction (Abstract) score on the Shipley Institute of Living Scale

- Predictor \(x_3\) = score on the Symbol-Digit Modalities Test (SDMT)

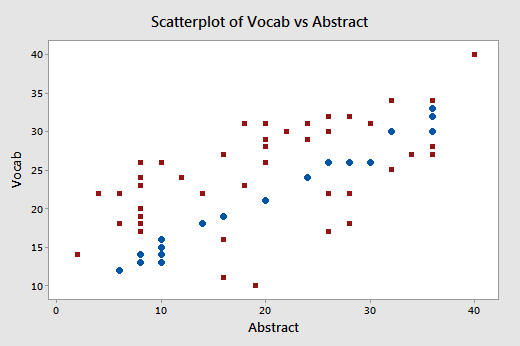

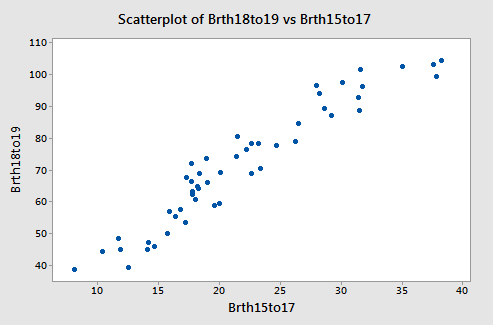

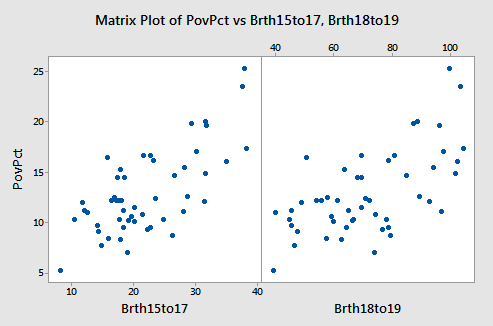

For the sake of this example, I sampled 23 patients from the original data set in such a way as to ensure that a very high correlation exists between the two predictors Vocab and Abstract. A matrix plot of the resulting Allen Test n=23 data set:

suggests that, indeed, a strong correlation exists between Vocab and Abstract. The correlations among the remaining pairs of predictors do not appear to be particularly strong.

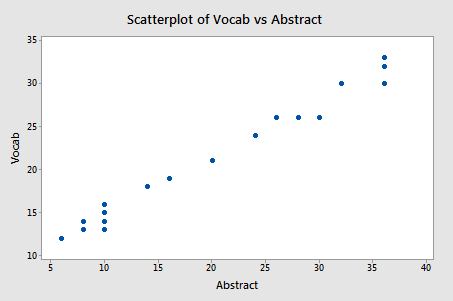

Focusing only on the relationship between the two predictors Vocab and Abstract:

Pearson correlation of Vocab and Abstraction = 0.990

we do indeed see that a very strong relationship (r = 0.99) exists between the two predictors.

Let's see what havoc this high correlation wreaks on our regression analysis! Regressing the response y = ACL on the predictors SDMT, Vocab, and Abstract, we obtain:

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 3 | 3.6854 | 1.22847 | 2.28 | 0.112 |

| SDMT | 1 | 3.6346 | 3.63465 | 6.74 | 0.018 |

| Vocab | 1 | 0.0406 | 0.04064 | 0.08 | 0.787 |

| Abstract | 1 | 0.0101 | 0.01014 | 0.02 | 0.892 |

| Error | 19 | 10.2476 | 0.53935 | ||

| Total | 22 | 13.9330 |

Modal Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 0.734404 | 26.45% | 14.84% | 0.00% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 3.75 | 1.34 | 2.79 | 0.012 | |

| SDMT | 0.0233 | 0.0127 | 1.83 | 0.083 | 1.73 |

| Vocab | 0.028 | 0.152 | 0.19 | 0.855 | 49.29 |

| Abstract | -0.014 | 0.101 | -0.14 | 0.892 | 50.60 |

Yikes — the variance inflation factors for Vocab and Abstract are very large — 49.3 and 50.6, respectively!

What should we do about this? We could opt to remove one of the two predictors from the model. Alternatively, if we have a good scientific reason for needing both of the predictors to remain in the model, we could go out and collect more data. Let's try this second approach here.

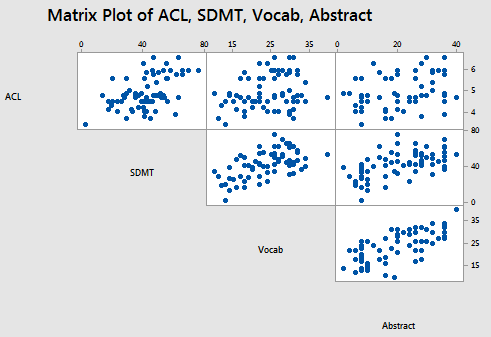

For the sake of this example, let's imagine that we went out and collected more data, and in so doing, obtained the actual data collected on all 69 patients enrolled in the Allen Cognitive Level (ACL) Study. A matrix plot of the resulting Allen Test data set:

Pearson correlation of Vocab and Abstraction = 0.698

suggests that a correlation still exists between Vocab and Abstract — it is just a weaker correlation now.

Again, focusing only on the relationship between the two predictors Vocab and Abstract:

we do indeed see that the relationship between Abstract and Vocab is now much weaker (r = 0.698) than before. The round data points in blue represent the 23 data points in the original data set, while the square red data points represent the 46 newly collected data points. As you can see from the plot, collecting the additional data has expanded the "base" over which the "best fitting plane" will sit. The existence of this larger base allows less room for the plane to tilt from sample to sample, and thereby reduces the variance of the estimated slope coefficients.

Let's see if the addition of the new data helps to reduce the multicollinearity here. Regressing the response y = ACL on the predictors SDMT, Vocab, and Abstract:

Analysis of Variance

| Source | DF | Seq SS | Seq MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 3 | 12.3009 | 4.1003 | 8.67 | 0.000 |

| SDMT | 1 | 11.6799 | 11.6799 | 24.69 | 0.000 |

| Vocab | 1 | 0.0979 | 0.0979 | 0.21 | 0.651 |

| Abstract | 1 | 0.5230 | 0.5230 | 1.11 | 0.297 |

| Error | 65 | 30.7487 | 0.4731 | ||

| Total | 68 | 43.0496 |

Modal Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 0.687791 | 28.57% | 25.28% | 19.93% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 3.946 | 0.338 | 11.67 | 0.000 | |

| SDMT | 0.02740 | 0.00717 | 3.82 | 0.000 | 1.61 |

| Vocab | -0.0174 | 0.0181 | -0.96 | 0.339 | 2.09 |

| Abstract | 0.0122 | 0.0116 | 1.05 | 0.297 | 2.17 |

we find that the variance inflation factors are reduced significantly and satisfactorily. The researchers could now feel comfortable proceeding with drawing conclusions about the effects of the vocabulary and abstraction scores on the level of psychopathology.

Note!

One thing to keep in mind... In order to reduce the multicollinearity that exists, it is not sufficient to go out and just collect any ol' data. The data have to be collected in such a way as to ensure that the correlations among the violating predictors are actually reduced. That is, collecting more of the same kind of data won't help to reduce the multicollinearity. The data have to be collected to ensure that the "base" is sufficiently enlarged. Doing so, of course, changes the characteristics of the studied population, and therefore should be reported accordingly.

12.6 - Reducing Structural Multicollinearity

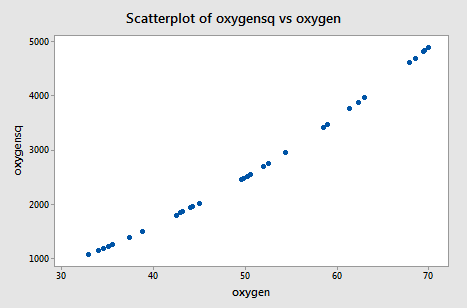

12.6 - Reducing Structural MulticollinearityRecall that structural multicollinearity is multicollinearity that is a mathematical artifact caused by creating new predictors from other predictors, such as, creating the predictor \(x^{2}\) from the predictor x. Because of this, at the same time that we learn here about reducing structural multicollinearity, we learn more about polynomial regression models.

Example 12-4

What is the impact of exercise on the human immune system? To answer this very global and general research question, one has to first quantify what "exercise" means and what "immunity" means. Of course, there are several ways of doing so. For example, we might quantify one's level of exercise by measuring his or her "maximal oxygen uptake." And, we might quantify the quality of one's immune system by measuring the amount of "immunoglobin in his or her blood." In doing so, the general research question is translated into a much more specific research question: "How is the amount of immunoglobin in the blood (y) related to maximal oxygen uptake (x)?"

Because some researchers were interested in answering the above research question, they collected the following Exercise and Immunity data set on a sample of 30 individuals:

- \(y_i\) = amount of immunoglobin in the blood (mg) of individual i

- \(x_i\) = maximal oxygen uptake (ml/kg) of individual i

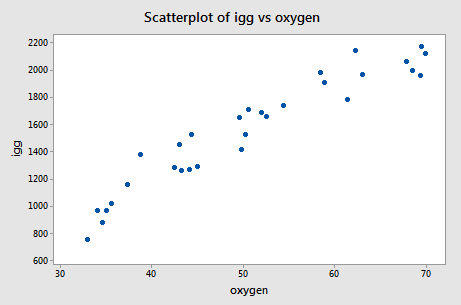

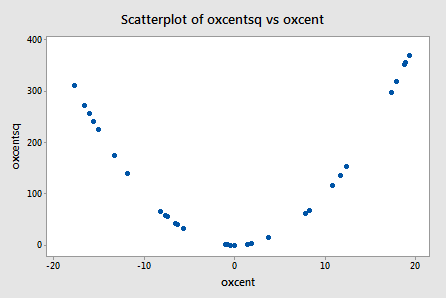

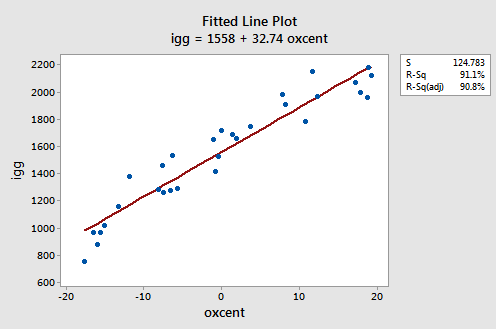

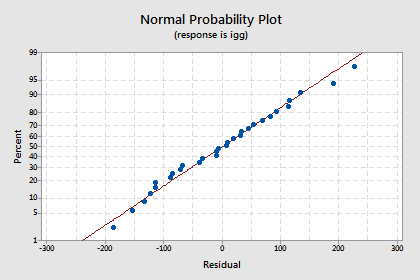

The scatter plot of the resulting data:

suggests that there might be some curvature to the trend in the data. To allow for the apparent curvature — rather than formulating a linear regression function — the researchers formulated the following quadratic (polynomial) regression function:

\(y_i=\beta_0+\beta_1x_i+\beta_{11}x_{i}^{2}+\epsilon_i\)

where:

- \(y_i\) = amount of immunoglobin in the blood (mg) of individual i

- \(x_i\) = maximal oxygen uptake (ml/kg) of individual i

As usual, the error terms \(\epsilon_i\) are assumed to be independent, normally distributed, and have equal variance \(\sigma^{2}\).

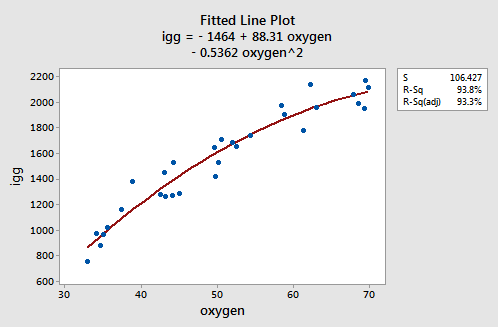

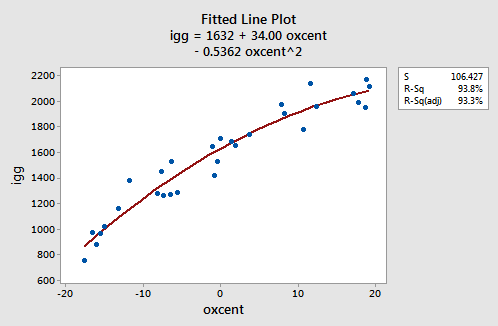

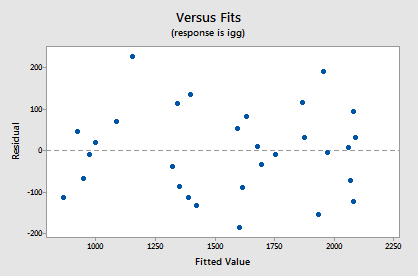

As the following plot of the estimated quadratic function suggests:

the formulated regression function appears to describe the trend in the data well. The adjusted \(R^{2} \text{-value}\) is 93.3%.

But, now what do the estimated coefficients tell us? The interpretation of the regression coefficients is mostly geometric in nature. That is, the coefficients tell us a little bit about what the picture looks like:

- If 0 is a possible x value, then \(b_0\) is the predicted response when x = 0. Otherwise, the interpretation of \(b_0\) is meaningless.

- The estimated coefficient \(b_1\) is the estimated slope of the tangent line at x = 0.

- The estimated coefficient \(b_2\) indicates the up/down direction of the curve. That is:

- if \(b_2\) < 0, then the curve is concave down

- if \(b_2\) > 0, then the curve is concave up