Lesson 10: Model Building

Lesson 10: Model BuildingOverview

For all of the regression analyses that we performed so far in this course, it has been obvious which of the major predictors we should include in our regression model. Unfortunately, this is typically not the case. More often than not, a researcher has a large set of candidate predictor variables from which he tries to identify the most appropriate predictors to include in his regression model.

Of course, the larger the number of candidate predictor variables, the larger the number of possible regression models. For example, if a researcher has (only) 10 candidate predictor variables, he has \(2^{10} = 1024\) possible regression models from which to choose. Clearly, some assistance would be needed in evaluating all of the possible regression models. That's where the two variable selection methods — stepwise regression and best subsets regression — come in handy.

In this lesson, we'll learn about the above two variable selection methods. Our goal throughout will be to choose a small subset of predictors from the larger set of candidate predictors so that the resulting regression model is simple yet useful. That is, as always, our resulting regression model should:

- provide a good summary of the trend in the response, and/or

- provide good predictions of the response, and/or

- provide good estimates of the slope coefficients.

Objectives

- Understand the impact of the four different models concerning their "correctness" — correctly specified, underspecified, overspecified, and correct but with extraneous predictors.

- To ensure that you understand the general idea behind stepwise regression, be able to conduct stepwise regression "by hand."

- Know the limitations of stepwise regression.

- Know the general idea behind best subsets regression.

- Know how to choose an optimal model based on the \(R^{2}\) value, the adjusted \(R^{2}\) value, MSE and the \(C_p\) criterion.

- Know the limitations of best subsets regression.

- Know the seven steps of an excellent model-building strategy.

Lesson 10 Code Files

Below is a zip file that contains all the data sets used in this lesson:

- bloodpress.txt

- cement.txt

- iqsize.txt

- martian.txt

- peru.txt

- Physical.txt

10.1 - What if the Regression Equation Contains "Wrong" Predictors?

10.1 - What if the Regression Equation Contains "Wrong" Predictors?Before we can go off and learn about the two variable selection methods, we first need to understand the consequences of a regression equation containing the "wrong" or "inappropriate" variables. Let's do that now!

There are four possible outcomes when formulating a regression model for a set of data:

- The regression model is "correctly specified."

- The regression model is "underspecified."

- The regression model contains one or more "extraneous variables."

- The regression model is "overspecified."

Let's consider the consequence of each of these outcomes on the regression model. Before we do, we need to take a brief aside to learn what it means for an estimate to have the good characteristic of being unbiased.

Unbiased estimates

An estimate is unbiased if the average of the values of the statistics determined from all possible random samples equals the parameter you're trying to estimate. That is, if you take a random sample from a population and calculate the mean of the sample, then take another random sample and calculate its mean, and take another random sample and calculate its mean, and so on — the average of the means from all of the samples that you have taken should equal the true population mean. If that happens, the sample mean is considered an unbiased estimate of the population mean \(\mu\).

An estimated regression coefficient \(b_i\) is an unbiased estimate of the population slope \(\beta_i\) if the mean of all of the possible estimates \(b_i\) equals \(\beta_i\). And, the predicted response \(\hat{y}_i\) is an unbiased estimate of \(\mu_Y\) if the mean of all of the possible predicted responses \(\hat{y}_i\) equals \(\mu_Y\).

So far, this has probably sounded pretty technical. Here's an easy way to think about it. If you hop on a scale every morning, you can't expect that the scale will be perfectly accurate every day —some days it might run a little high, and some days a little low. That you can probably live with. You certainly don't want the scale, however, to consistently report that you weigh five pounds more than you actually do — your scale would be biased upward. Nor do you want it to consistently report that you weigh five pounds less than you actually do — or..., scratch that, maybe you do — in this case, your scale would be biased downward. What you do want is for the scale to be correct on average — in this case, your scale would be unbiased. And, that's what we want!

The four possible outcomes

Outcome 1

A regression model is correctly specified if the regression equation contains all of the relevant predictors, including any necessary transformations and interaction terms. That is, there are no missing, redundant, or extraneous predictors in the model. Of course, this is the best possible outcome and the one we hope to achieve!

The good thing is that a correctly specified regression model yields unbiased regression coefficients and unbiased predictions of the response. And, the mean squared error (MSE) — which appears in some form in every hypothesis test we conduct or confidence interval we calculate — is an unbiased estimate of the error variance \(\sigma^{2}\).

Outcome 2

A regression model is underspecified if the regression equation is missing one or more important predictor variables. This situation is perhaps the worst-case scenario because an underspecified model yields biased regression coefficients and biased predictions of the response. That is, in using the model, we would consistently underestimate or overestimate the population slopes and the population means. To make already bad matters even worse, the mean square error MSE tends to overestimate \(\sigma^{2}\), thereby yielding wider confidence intervals than it should.

Let's take a look at an example of a model that is likely underspecified. It involves an analysis of the height and weight of Martians. The Martian dataset — which was obviously contrived just for the sake of this example — contains the weights (in g), heights (in cm), and amount of daily water consumption (0, 10, or 20 cups per day) of 12 Martians.

If we regress \(y = \text{ weight}\) on the predictors \(x_1 = \text{ height}\) and \(x_2 = \text{ water}\), we obtain the following estimated regression equation:

Regression Equation

\(\widehat{weight} = -1.220 + 0.28344 height + 0.11121 water\)

and the following estimate of the error variance \(\sigma^{2}\):

MSE = 0.017

If we regress \(y = \text{ weight}\) on only the one predictor \(x_1 = \text{ height}\), we obtain the following estimated regression equation:

Regression Equation

\(\widehat{weight} = -4.14 + 0.3889 height\)

and the following estimate of the error variance \(\sigma^{2}\):

MSE = 0.653

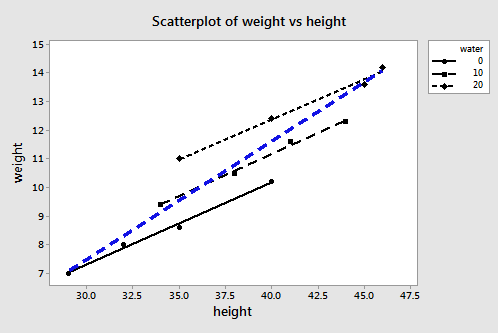

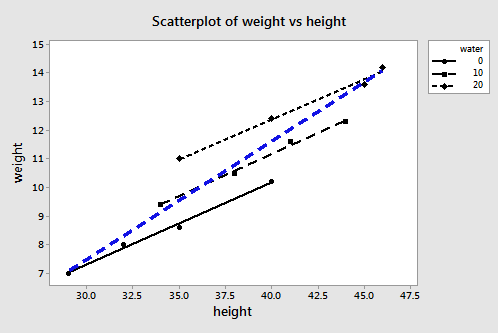

Plotting the two estimated regression equations, we obtain:

The three black lines represent the estimated regression equation when the amount of water consumption is taken into account — the first line for 0 cups per day, the second line for 10 cups per day, and the third line for 20 cups per day. The blue dashed line represents the estimated regression equation when we leave the amount of water consumed out of the regression model.

The second model — in which water is left out of the model — is likely an underspecified model. Now, what is the effect of leaving water consumption out of the regression model?

- The slope of the line (0.3889) obtained when height is the only predictor variable is much steeper than the slopes of the three parallel lines (0.28344) obtained by including the effect of water consumption, as well as height, on martian weight. That is, the slope likely overestimates the actual slope.

- The intercept of the line (-4.14) obtained when height is the only predictor variable is smaller than the intercepts of the three parallel lines (-1.220, -1.220 + 0.11121(10) = -0.108, and -1.220 + 0.11121(20) = 1.004) obtained by including the effect of water consumption, as well as height, on martian weight. That is, the intercept likely underestimates the actual intercepts.

- The estimate of the error variance \(\sigma^{2}\) (MSE = 0.653) obtained when height is the only predictor variable is about 38 times larger than the estimate obtained (MSE = 0.017) by including the effect of water consumption, as well as height, on martian weight. That is, MSE likely overestimates the actual error variance \(\sigma^{2}\).

This contrived example is nice in that it allows us to visualize how an underspecified model can yield biased estimates of important regression parameters. Unfortunately, in reality, we don't know the correct model. After all, if we did we wouldn't need to conduct the regression analysis! Because we don't know the correct form of the regression model, we have no way of knowing the exact nature of the biases.

Outcome 3

Another possible outcome is that the regression model contains one or more extraneous variables. That is, the regression equation contains extraneous variables that are neither related to the response nor to any of the other predictors. It is as if we went overboard and included extra predictors in the model that we didn't need!

The good news is that such a model does yield unbiased regression coefficients, unbiased predictions of the response, and an unbiased SSE. The bad news is that — because we have more parameters in our model — MSE has fewer degrees of freedom associated with it. When this happens, our confidence intervals tend to be wider and our hypothesis tests tend to have lower power. It's not the worst thing that can happen, but it's not too great either. By including extraneous variables, we've also made our model more complicated and hard to understand than necessary.

Outcome 4

If the regression model is overspecified, then the regression equation contains one or more redundant predictor variables. That is, part of the model is correct, but we have gone overboard by adding predictors that are redundant. Redundant predictors lead to problems such as inflated standard errors for the regression coefficients. (Such problems are also associated with multicollinearity, which we'll cover in Lesson 12).

Regression models that are overspecified yield unbiased regression coefficients, unbiased predictions of the response, and an unbiased SSE. Such a regression model can be used, with caution, for prediction of the response, but should not be used to describe the effect of a predictor on the response. Also, as with including extraneous variables, we've also made our model more complicated and hard to understand than necessary.

A goal and a strategy

Okay, so now we know the consequences of having the "wrong" variables in our regression model. The challenge, of course, is that we can never really be sure which variables are "wrong" and which variables are "right." All we can do is use the statistical methods at our fingertips and our knowledge of the situation to help build our regression model.

Here's my recommended approach to building a good and useful model:

- Know your goal, and know your research question. Knowing how you plan to use your regression model can assist greatly in the model-building stage. Do you have a few particular predictors of interest? If so, you should make sure your final model includes them. Are you just interested in predicting the response? If so, then multicollinearity should worry you less. Are you interested in the effects that specific predictors have on the response? If so, multicollinearity should be a serious concern. Are you just interested in a summary description? What is it that you are trying to accomplish?

- Identify all of the possible candidate predictors. This may sound easier than it actually is to accomplish. Don't worry about interactions or the appropriate functional form — such as \(x^{2}\) and log x — just yet. Just make sure you identify all the possible important predictors. If you don't consider them, there is no chance for them to appear in your final model.

- Use variable selection procedures to find the middle ground between an underspecified model and a model with extraneous or redundant variables. Two possible variable selection procedures are stepwise regression and best subsets regression. We'll learn about both methods here in this lesson.

- Fine-tune the model to get a correctly specified model. If necessary, change the functional form of the predictors and/or add interactions. Check the behavior of the residuals. If the residuals suggest problems with the model, try a different functional form of the predictors or remove some of the interaction terms. Iterate back and forth between formulating different regression models and checking the behavior of the residuals until you are satisfied with the model.

10.2 - Stepwise Regression

10.2 - Stepwise RegressionIn this section, we learn about the stepwise regression procedure. While we will soon learn the finer details, the general idea behind the stepwise regression procedure is that we build our regression model from a set of candidate predictor variables by entering and removing predictors — in a stepwise manner — into our model until there is no justifiable reason to enter or remove any more.

Our hope is, of course, that we end up with a reasonable and useful regression model. There is one sure way of ending up with a model that is certain to be underspecified — and that's if the set of candidate predictor variables doesn't include all of the variables that actually predict the response. This leads us to a fundamental rule of the stepwise regression procedure — the list of candidate predictor variables must include all of the variables that actually predict the response. Otherwise, we are sure to end up with a regression model that is underspecified and therefore misleading.

Example 10-1: Cement Data

Let's learn how the stepwise regression procedure works by considering a data set that concerns the hardening of cement. Sounds interesting, eh? In particular, the researchers were interested in learning how the composition of the cement affected the heat that evolved during the hardening of the cement. Therefore, they measured and recorded the following data (Cement dataset) on 13 batches of cement:

- Response \(y \colon \) heat evolved in calories during the hardening of cement on a per gram basis

- Predictor \(x_1 \colon \) % of tricalcium aluminate

- Predictor \(x_2 \colon \) % of tricalcium silicate

- Predictor \(x_3 \colon \) % of tetracalcium alumino ferrite

- Predictor \(x_4 \colon \) % of dicalcium silicate

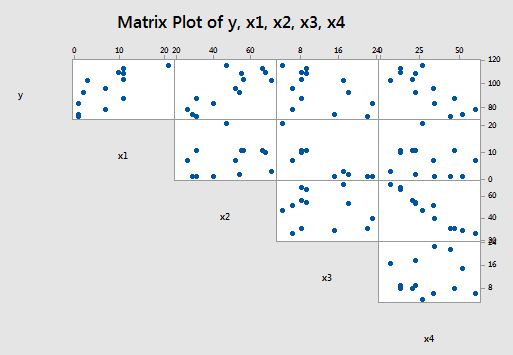

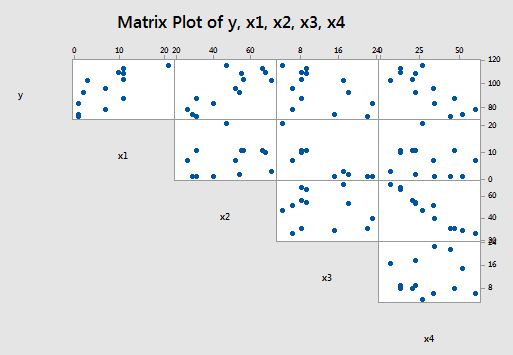

Now, if you study the scatter plot matrix of the data:

you can get a hunch of which predictors are good candidates for being the first to enter the stepwise model. It looks as if the strongest relationship exists between either \(y\) and \(x_{2} \) or between \(y\) and \(x_{4} \) — and therefore, perhaps either \(x_{2} \) or \(x_{4} \) should enter the stepwise model first. Did you notice what else is going on in this data set though? A strong correlation also exists between the predictors \(x_{2} \) and \(x_{4} \) ! How does this correlation among the predictor variables play out in the stepwise procedure? Let's see what happens when we use the stepwise regression method to find a model that is appropriate for these data.

The procedure

Again, before we learn the finer details, let me again provide a broad overview of the steps involved. First, we start with no predictors in our "stepwise model." Then, at each step along the way we either enter or remove a predictor based on the partial F-tests — that is, the t-tests for the slope parameters — that are obtained. We stop when no more predictors can be justifiably entered or removed from our stepwise model, thereby leading us to a "final model."

Now, let's make this process a bit more concrete. Here goes:

Starting the procedure

The first thing we need to do is set a significance level for deciding when to enter a predictor into the stepwise model. We'll call this the Alpha-to-Enter significance level and will denote it as \(\alpha_{E} \). Of course, we also need to set a significance level for deciding when to remove a predictor from the stepwise model. We'll call this the Alpha-to-Remove significance level and will denote it as \(\alpha_{R} \). That is, first:

- Specify an Alpha-to-Enter significance level. This will typically be greater than the usual 0.05 level so that it is not too difficult to enter predictors into the model. Many software packages — Minitab included — set this significance level by default to \(\alpha_E = 0.15\).

- Specify an Alpha-to-Remove significance level. This will typically be greater than the usual 0.05 level so that it is not too easy to remove predictors from the model. Again, many software packages — Minitab included — set this significance level by default to \(\alpha_{R} = 0.15\).

Step #1:

Once we've specified the starting significance levels, then we:

- Fit each of the one-predictor models — that is, regress \(y\) on \(x_{1} \), regress \(y\) on \(x_{2} \), ..., and regress \(y\) on \(x_{p-1} \).

- Of those predictors whose t-test P-value is less than \(\alpha_E = 0.15\), the first predictor put in the stepwise model is the predictor that has the smallest t-test P-value.

- If no predictor has a t-test P-value less than \(\alpha_E = 0.15\), stop.

Step #2:

Then:

- Suppose \(x_{1} \) had the smallest t-test P-value below \(\alpha_{E} = 0.15\) and therefore was deemed the "best" single predictor arising from the first step.

- Now, fit each of the two-predictor models that include \(x_{1} \) as a predictor — that is, regress \(y\) on \(x_{1} \) and \(x_{2} \) , regress \(y\) on \(x_{1} \) and \(x_{3} \) , ..., and regress \(y\) on \(x_{1} \) and \(x_{p-1} \) .

- Of those predictors whose t-test P-value is less than \(\alpha_E = 0.15\), the second predictor put in the stepwise model is the predictor that has the smallest t-test P-value.

- If no predictor has a t-test P-value less than \(\alpha_E = 0.15\), stop. The model with the one predictor obtained from the first step is your final model.

- But, suppose instead that \(x_{2} \) was deemed the "best" second predictor and it is therefore entered into the stepwise model.

- Now, since \(x_{1} \) was the first predictor in the model, step back and see if entering \(x_{2} \) into the stepwise model somehow affected the significance of the \(x_{1} \) predictor. That is, check the t-test P-value for testing \(\beta_{1}= 0\). If the t-test P-value for \(\beta_{1}= 0\) has become not significant — that is, the P-value is greater than \(\alpha_{R} \) = 0.15 — remove \(x_{1} \) from the stepwise model.

Step #3

Then:

- Suppose both \(x_{1} \) and \(x_{2} \) made it into the two-predictor stepwise model and remained there.

- Now, fit each of the three-predictor models that include \(x_{1} \) and \(x_{2} \) as predictors — that is, regress \(y\) on \(x_{1} \) , \(x_{2} \) , and \(x_{3} \) , regress \(y\) on \(x_{1} \) , \(x_{2} \) , and \(x_{4} \) , ..., and regress \(y\) on \(x_{1} \) , \(x_{2} \) , and \(x_{p-1} \) .

- Of those predictors whose t-test P-value is less than \(\alpha_E = 0.15\), the third predictor put in the stepwise model is the predictor that has the smallest t-test P-value.

- If no predictor has a t-test P-value less than \(\alpha_E = 0.15\), stop. The model containing the two predictors obtained from the second step is your final model.

- But, suppose instead that \(x_{3} \) was deemed the "best" third predictor and it is therefore entered into the stepwise model.

- Now, since \(x_{1} \) and \(x_{2} \) were the first predictors in the model, step back and see if entering \(x_{3} \) into the stepwise model somehow affected the significance of the \(x_{1 } \) and \(x_{2} \) predictors. That is, check the t-test P-values for testing \(\beta_{1} = 0\) and \(\beta_{2} = 0\). If the t-test P-value for either \(\beta_{1} = 0\) or \(\beta_{2} = 0\) has become not significant — that is, the P-value is greater than \(\alpha_{R} = 0.15\) — remove the predictor from the stepwise model.

Stopping the procedure

Continue the steps as described above until adding an additional predictor does not yield a t-test P-value below \(\alpha_E = 0.15\).

Whew! Let's return to our cement data example so we can try out the stepwise procedure as described above.

Back to the example...

To start our stepwise regression procedure, let's set our Alpha-to-Enter significance level at \(\alpha_{E} \) = 0.15, and let's set our Alpha-to-Remove significance level at \(\alpha_{R} = 0.15\). Now, regressing \(y\) on \(x_{1} \) , regressing \(y\) on \(x_{2} \) , regressing \(y\) on \(x_{3} \) , and regressing \(y\) on \(x_{4} \) , we obtain:

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 81.479 | 4.927 | 16.54 | 0.000 |

x1 | 1.8687 | 0.5264 | 3.55 | 0.005 |

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 57.424 | 8.491 | 6.76 | 0.000 |

x2 | 0.7891 | 0.1684 | 4.69 | 0.001 |

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 110.203 | 7.948 | 13.87 | 0.000 |

x3 | -1.2558 | 0.5984 | -2.10 | 0.060 |

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 117.568 | 5.262 | 22.34 | 0.000 |

x4 | -0.7382 | 0.1546 | -4.77 | 0.001 |

Each of the predictors is a candidate to be entered into the stepwise model because each t-test P-value is less than \(\alpha_E = 0.15\). The predictors \(x_{2} \) and \(x_{4} \) tie for having the smallest t-test P-value — it is 0.001 in each case. But note the tie is an artifact of Minitab rounding to three decimal places. The t-statistic for \(x_{4} \) is larger in absolute value than the t-statistic for \(x_{2} \) — 4.77 versus 4.69 — and therefore the P-value for \(x_{4} \) must be smaller. As a result of the first step, we enter \(x_{4} \) into our stepwise model.

Now, following step #2, we fit each of the two-predictor models that include \(x_{4} \) as a predictor — that is, we regress \(y\) on \(x_{4} \) and \(x_{1} \), regress \(y\) on \(x_{4} \) and \(x_{2} \), and regress \(y\) on \(x_{4} \) and \(x_{3} \), obtaining:

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 103.097 | 2.124 | 48.54 | 0.000 |

x4 | -0.61395 | 0.04864 | -12.62 | 0.000 |

x1 | 1.4400 | 0.1384 | 10.40 | 0.000 |

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 94.16 | 56.63 | 1.66 | 0.127 |

x4 | -0.4569 | 0.6960 | -0.66 | 0.526 |

x2 | 0.3109 | 0.7486 | 0.42 | 0.687 |

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 131.282 | 3.275 | 40.09 | 0.000 |

x4 | -0.72460 | 0.07233 | -10.02 | 0.000 |

x3 | -1.1999 | 0.1890 | -6.35 | 0.000 |

The predictor \(x_{2} \) is not eligible for entry into the stepwise model because its t-test P-value (0.687) is greater than \(\alpha_E = 0.15\). The predictors \(x_{1} \) and \(x_{3} \) are candidates because each t-test P-value is less than \(\alpha_{E} \) = 0.15. The predictors \(x_{1} \) and \(x_{3} \) tie for having the smallest t-test P-value — it is < 0.001 in each case. But, again the tie is an artifact of Minitab rounding to three decimal places. The t-statistic for \(x_{1} \) is larger in absolute value than the t-statistic for \(x_{3} \) — 10.40 versus 6.3 5— and therefore the P-value for \(x_{1} \) must be smaller. As a result of the second step, we enter \(x_{1} \) into our stepwise model.

Now, since \(x_{4} \) was the first predictor in the model, we must step back and see if entering \(x_{1} \) into the stepwise model affected the significance of the \(x_{4} \) predictor. It did not — the t-test P-value for testing \(\beta_{1} = 0\) is less than 0.001, and thus smaller than \(\alpha_{R} \) = 0.15. Therefore, we proceed to the third step with both \(x_{1} \) and \(x_{4} \) as predictors in our stepwise model.

Now, following step #3, we fit each of the three-predictor models that include x1 and \(x_{4} \) as predictors — that is, we regress \(y\) on \(x_{4} \), \(x_{1} \), and \(x_{2} \); and we regress \(y\) on \(x_{4} \), \(x_{1} \), and \(x_{3} \), obtaining:

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 71.65 | 14.14 | 5.07 | 0.001 |

x4 | -0.2365 | 0.1733 | -1.37 | 0.205 |

x1 | 1.4519 | 0.1170 | 12.41 | 0.000 |

x2 | 0.4161 | 0.1856 | 2.24 | 0.052 |

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 111.684 | 4.562 | 24.48 | 0.000 |

x4 | -0.64280 | 0.04454 | -14.43 | 0.000 |

x1 | 1.0519 | 0.2237 | 4.70 | 0.001 |

x3 | -0.4100 | 0.1992 | -2.06 | 0.070 |

Both of the remaining predictors — \(x_{2} \) and \(x_{3} \) — are candidates to be entered into the stepwise model because each t-test P-value is less than \(\alpha_E = 0.15\). The predictor \(x_{2} \) has the smallest t-test P-value (0.052). Therefore, as a result of the third step, we enter \(x_{2} \) into our stepwise model.

Now, since \(x_{1} \) and \(x_{4} \) were the first predictors in the model, we must step back and see if entering \(x_{2} \) into the stepwise model affected the significance of the \(x_{1} \) and \(x_{4} \) predictors. Indeed, it did — the t-test P-value for testing \(\beta_{4} \) = 0 is 0.205, which is greater than \(α_{R} = 0.15\). Therefore, we remove the predictor \(x_{4} \) from the stepwise model, leaving us with the predictors \(x_{1} \) and \(x_{2} \) in our stepwise model:

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 52.577 | 2.286 | 23.00 | 0.000 |

x1 | 1.4683 | 0.1213 | 12.10 | 0.000 |

x2 | 0.66225 | 0.04585 | 14.44 | 0.000 |

Now, we proceed to fit each of the three-predictor models that include \(x_{1} \) and \(x_{2} \) as predictors — that is, we regress \(y\) on \(x_{1} \), \(x_{2} \), and \(x_{3} \); and we regress \(y\) on \(x_{1} \), \(x_{2} \), and \(x_{4} \), obtaining:

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 71.65 | 14.14 | 5.07 | 0.001 |

x1 | 1.4519 | 0.1170 | 12.41 | 0.000 |

x2 | 0.4161 | 0.1856 | 2.24 | 0.052 |

x4 | -0.2365 | 0.1733 | -1.37 | 0.205 |

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 48.194 | 3.913 | 12.32 | 0.000 |

x1 | 1.6959 | 0.2046 | 8.29 | 0.000 |

x2 | 0.65691 | 0.04423 | 14.85 | 0.000 |

x3 | 0.2500 | 0.1847 | 1.35 | 0.209 |

Neither of the remaining predictors — \(x_{3} \) and \(x_{4} \) — are eligible for entry into our stepwise model, because each t-test P-value — 0.209 and 0.205, respectively — is greater than \(\alpha_{E} \) = 0.15. That is, we stop our stepwise regression procedure. Our final regression model, based on the stepwise procedure contains only the predictors \(x_1 \text{ and } x_2 \colon \)

Predictor | Coef | SE Coef | T | P |

|---|---|---|---|---|

Constant | 52.577 | 2.286 | 23.00 | 0.000 |

x1 | 1.4683 | 0.1213 | 12.10 | 0.000 |

x2 | 0.66225 | 0.04585 | 14.44 | 0.000 |

Whew! That took a lot of work! The good news is that most statistical software — including Minitab — provides a stepwise regression procedure that does all of the dirty work for us. For example in Minitab, select Stat > Regression > Regression > Fit Regression Model, click the Stepwise button in the resulting Regression Dialog, select Stepwise for Method, and select Include details for each step under Display the table of model selection details. Here's what the Minitab stepwise regression output looks like for our cement data example:

Stepwise Selection of Terms

Candidate terms: x1, x2, x3, x4

Terms | -----Step 1----- | -----Step 2----- | -----Step 3----- | -----Step 4----- | ||||

|---|---|---|---|---|---|---|---|---|

Coef | P | Coef | P | Coef | P | Coef | P | |

Constant | 117.57 | 103.10 | 71.6 | 52.58 | ||||

x4 | -0.738 | 0.001 | -0.6140 | 0.000 | -0.237 | 0.205 | ||

x1 | 1.440 | 0.000 | 1.452 | 0.000 | 1.468 | 0.000 | ||

x2 | 0.416 | 0.052 | 0.6623 | 0.000 | ||||

S | 8.96390 | 2.73427 | 2.30874 | 2.40634 | ||||

R-sq | 67.45% | 97.25% | 98.23% | 97.44% | ||||

R-sq(adj) | 64.50% | 96.70% | 97.64% | 97.44% | ||||

R-sq(pred) | 56.03% | 95.54% | 96.86% | 96.54% | ||||

Mallows' Cp | 138.73 | 5.50 | 3.02 | 2.68 | ||||

\(\alpha\) to enter =0.15, \(\alpha\) to remove 0.15

Minitab tells us that :

- a stepwise regression procedure was conducted on the response \(y\) and four predictors \(x_{1} \) , \(x_{2} \) , \(x_{3} \) , and \(x_{4} \)

- the Alpha-to-Enter significance level was set at \(\alpha_E = 0.15\) and the Alpha-to-Remove significance level was set at \(\alpha_{R} = 0.15\)

The remaining portion of the output contains the results of the various steps of Minitab's stepwise regression procedure. One thing to keep in mind is that Minitab numbers the steps a little differently than described above. Minitab considers a step any addition or removal of a predictor from the stepwise model, whereas our steps — step #3, for example — consider the addition of one predictor and the removal of another as one step.

The results of each of Minitab's steps are reported in a column labeled by the step number. It took Minitab 4 steps before the procedure was stopped. Here's what the output tells us:

- Just as our work above showed, as a result of Minitab's first step, the predictor \(x_{4} \) is entered into the stepwise model. Minitab tells us that the estimated intercept ("Constant") \(b_{0} \) = 117.57 and the estimated slope \(b_{4} = -0.738\). The P-value for testing \(\beta_{4} \) = 0 is 0.001. The estimate S, which equals the square root of MSE, is 8.96. The \(R^{2} \text{-value}\) is 67.45% and the adjusted \(R^{2} \text{-value}\) is 64.50%. Mallows' Cp-statistic, which we learn about in the next section, is 138.73. The output also includes a predicted \(R^{2} \text{-value}\), which we'll come back to in Section 10.5.

- As a result of Minitab's second step, the predictor \(x_{1} \) is entered into the stepwise model already containing the predictor \(x_{4} \). Minitab tells us that the estimated intercept \(b_{0} = 103.10\), the estimated slope \(b_{4} = -0.614\), and the estimated slope \(b_{1} = 1.44\). The P-value for testing \(\beta_{4} = 0\) is < 0.001. The P-value for testing \(\beta_{1} = 0\) is < 0.001. The estimate S is 2.73. The \(R^{2} \text{-value}\) is 97.25% and the adjusted \(R^{2} \text{-value}\) is 96.70%. Mallows' Cp-statistic is 5.5.

- As a result of Minitab's third step, the predictor \(x_{2} \) is entered into the stepwise model already containing the predictors \(x_{1} \) and \(x_{4} \). Minitab tells us that the estimated intercept \(b_{0} = 71.6\), the estimated slope \(b_{4} \) = -0.237, the estimated slope \(b_{1} = 1.452\), and the estimated slope \(b_{2} = 0.416\). The P-value for testing \(\beta_{4} = 0\) is 0.205. The P-value for testing \(\beta_{1} = 0\) is < 0.001. The P-value for testing \(\beta_{2} = 0\) is 0.052. The estimate S is 2.31. The \(R^{2} \text{-value}\) is 98.23% and the adjusted \(R^{2} \text{-value}\) is 97.64%. Mallows' Cp-statistic is 3.02.

- As a result of Minitab's fourth and final step, the predictor \(x_{4} \) is removed from the stepwise model containing the predictors \(x_{1} \), \(x_{2} \), and \(x_{4} \), leaving us with the final model containing only the predictors \(x_{1} \) and \(x_{2} \). Minitab tells us that the estimated intercept \(b_{0} = 52.58\), the estimated slope \(b_{1} = 1.468\), and the estimated slope \(b_{2} = 0.6623\). The P-value for testing \(\beta_{1} = 0\) is < 0.001. The P-value for testing \(\beta_{2} \) = 0 is < 0.001. The estimate S is 2.41. The \(R^{2} \text{-value}\) is 97.87% and the adjusted \(R^{2} \text{-value}\) is 97.44%. Mallows' Cp-statistic is 2.68.

Does the stepwise regression procedure lead us to the "best" model? No, not at all! Nothing occurs in the stepwise regression procedure to guarantee that we have found the optimal model. Case in point! Suppose we defined the best model to be the model with the largest adjusted \(R^{2} \text{-value}\). Then, here, we would prefer the model containing the three predictors \(x_{1} \), \(x_{2} \), and \(x_{4} \), because its adjusted \(R^{2} \text{-value}\) is 97.64%, which is higher than the adjusted \(R^{2} \text{-value}\) of 97.44% for the final stepwise model containing just the two predictors \(x_{1} \) and \(x_{2} \).

Again, nothing occurs in the stepwise regression procedure to guarantee that we have found the optimal model. This, and other cautions of the stepwise regression procedure, are delineated in the next section.

Cautions!

Here are some things to keep in mind concerning the stepwise regression procedure:

- The final model is not guaranteed to be optimal in any specified sense.

- The procedure yields a single final model, although there are often several equally good models.

- Stepwise regression does not take into account a researcher's knowledge about the predictors. It may be necessary to force the procedure to include important predictors.

- One should not over-interpret the order in which predictors are entered into the model.

- One should not jump to the conclusion that all the important predictor variables for predicting \(y\) have been identified, or that all the unimportant predictor variables have been eliminated. It is, of course, possible that we may have committed a Type I or Type II error along the way.

- Many t-tests for testing \(\beta_{k} = 0\) are conducted in a stepwise regression procedure. The probability is therefore high that we included some unimportant predictors or excluded some important predictors.

It's for all of these reasons that one should be careful not to overuse or overstate the results of any stepwise regression procedure.

Example 10-2: Blood Pressure

Some researchers observed the following data (Blood pressure dataset) on 20 individuals with high blood pressure:

- blood pressure (\(y = BP \), in mm Hg)

- age (\(x_{1} = \text{Age} \), in years)

- weight (\(x_{2} = \text{Weight} \), in kg)

- body surface area (\(x_{3} = \text{BSA} \), in sq m)

- duration of hypertension ( \(x_{4} = \text{Dur} \), in years)

- basal pulse (\(x_{5} = \text{Pulse} \), in beats per minute)

- stress index (\(x_{6} = \text{Stress} \) )

The researchers were interested in determining if a relationship exists between blood pressure and age, weight, body surface area, duration, pulse rate, and/or stress level.

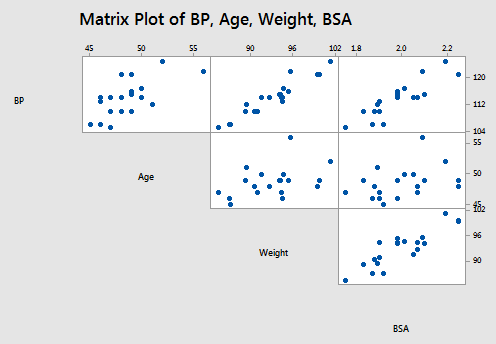

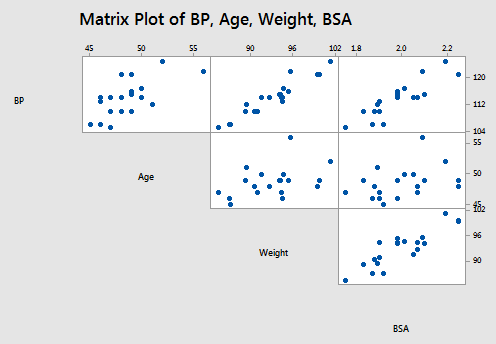

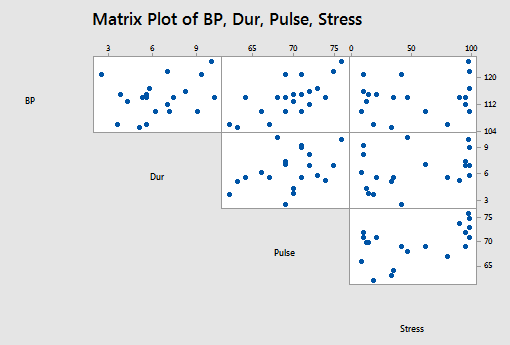

The matrix plot of BP, Age, Weight, and BSA looks like this:

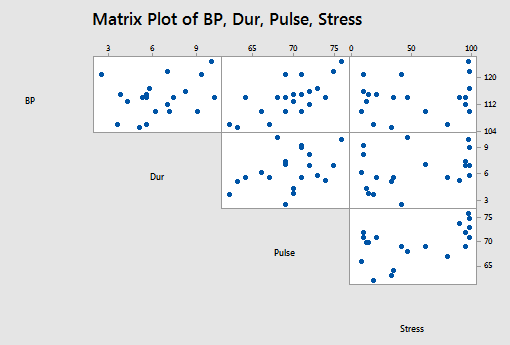

and the matrix plot of BP, Dur, Pulse, and Stress looks like this:

Using Minitab to perform the stepwise regression procedure, we obtain:

Stepwise Selection of Terms

Candidate terms: Age, Weight, BSA, Pulse, Stress

Terms | -----Step 1----- | -----Step 2----- | -----Step 3----- | |||

|---|---|---|---|---|---|---|

Coef | P | Coef | P | Coef | P | |

Constant | 2.21 | -16.58 | -13.67 | |||

Weight | 1.2009 | 0.000 | 1.0330 | 0.000 | 0.9058 | 0.000 |

Age | 0.7083 | 0.000 | 0.7016 | 0.008 | ||

BSA | 4.63 | 0.008 | ||||

S | 1.74050 | 0.532692 | 0.437046 | |||

R-sq | 90.26% | 99.14% | 99.45% | |||

R-sq(adj) | 89.72% | 99.04% | 99.35% | |||

R-sq(pred) | 88.53% | 98.89% | 99.22% | |||

Mallows' Cp | 312.81 | 15.09 | 6.43 | |||

\(\alpha\) to enter =0.15, \(\alpha\) to remove 0.15

When \( \alpha_{E} = \alpha_{R} = 0.15\), the final stepwise regression model contains the predictors of Weight, Age, and BSA.

The following video will walk through this example in Minitab.

Try it!

Stepwise regression

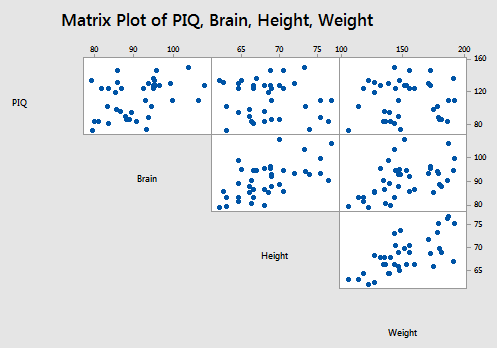

Brain size and body size. Imagine that you do not have automated stepwise regression software at your disposal, and conduct the stepwise regression procedure on the IQ size data set. Setting Alpha-to-Remove and Alpha-to-Enter at 0.15, verify the final model obtained above by Minitab.

That is:

- First, fit each of the three possible simple linear regression models. That is, regress PIQ on Brain, regress PIQ on Height, and regress PIQ on Weight. (See Minitab Help: Performing a basic regression analysis). The first predictor that should be entered into the stepwise model is the predictor with the smallest P-value (or equivalently the largest t-statistic in absolute value) for testing \(\beta_{k} = 0\), providing the P-value is smaller than 0.15. What is the first predictor that should be entered into the stepwise model?

a. Fit individual models.

PIQ vs Brain, PIQ vs Height, and PIG vs Weight.

b. Include the predictor with the smallest p-value < \(\alpha_E = 0.15\) and largest |T| value.

Include Brain as the first predictor since its p-value = 0.019 is the smallest.

Term

Coef

SE Coef

T-Value

P-Value

Constant

4.7

43.7

0.11

0.916

Brain

1.177

0.481

2.45

0.019

Term

Coef

SE Coef

T-Value

P-Value

Constant

147.4

64.3

2.29

0.028

Height

-0.527

0.939

-0.56

0.578

Term

Coef

SE Coef

T-Value

P-Value

Constant

111.0

24.5

4.53

0.000

Weight

0.002

0.160

0.02

0.988

- Now, fit each of the possible two-predictor multiple linear regression models which include the first predictor identified above and each of the remaining two predictors. (See Minitab Help: Performing a basic regression analysis). Which predictor should be entered into the model next?

a. Fit two predictor models by adding each remaining predictor one at a time.

Fit PIQ vs Brain, Height, and PIQ vs Brain, Weight.

b. Add to the model the 2nd predictor with smallest p-value < \(\alpha_E = 0.15\) and largest |T| value.

Add Height since its p-value = 0.009 is the smallest.

c. Omit any previously added predictors if their p-value exceeded \(\alpha_R = 0.15\)

The previously added predictor Brain is retained since its p-value is still below \(\alpha_R\).

FINAL RESULT of step 2: The model includes the two predictors Brain and Height.

Term

Coef

SE Coef

T-Value

P-Value

Constant

111.3

55.9

1.99

0.054

Brain

2.061

0.547

3.77

0.001

Height

-2.730

0.993

-2.75

0.009

Term

Coef

SE Coef

T-Value

P-Value

Constant

4.8

43.0

0.11

0.913

Brain

1.592

0.551

2.89

0.007

Weight

-0.250

0.170

-1.47

0.151

Term

Coef

SE Coef

T-Value

P-Value

Constant

111.0

24.5

4.53

0.000

Weight

0.002

0.160

0.02

0.988

- Continue the stepwise regression procedure until you can not justify entering or removing any more predictors. What is the final model identified by your stepwise regression procedure?

Fit three predictor models by adding each remaining predictor one at a time.

Run PIQ vs Brain, Height, Weight - weight is the only 3rd predictor.

Add to the model the 3rd predictor with the smallest p-value < \( \alpha_E\) and largest |T| value.

Do not add weight since its p-value \(p = 0.998 > \alpha_E = 0.15\)

Term

Coef

SE Coef

T-Value

P-Value

Constant

111.4

63.0

1.77

0.086

Brain

2.060

0.563

3.66

0.001

Height

-2.73

1.23

-2.22

0.033

Weight

0.001

0.197

0.00

0.998

Omit any previously added predictors if their p-value exceeded \(\alpha_R\).

The previously added predictors Brain and Height are retained since their p-values are both still below \(\alpha_R\).

Term

Coef

SE Coef

T-Value

P-Value

Constant

111.3

55.9

1.99

0.054

Brain

2.061

0.547

3.77

0.001

Height

-2.730

0.993

-2.75

0.009

The final model contains the two predictors, Brain and Height.

10.3 - Best Subsets Regression, Adjusted R-Sq, Mallows Cp

10.3 - Best Subsets Regression, Adjusted R-Sq, Mallows CpIn this section, we learn about the best subsets regression procedure (also known as the all possible subsets regression procedure). While we will soon learn the finer details, the general idea behind best subsets regression is that we select the subset of predictors that do the best at meeting some well-defined objective criterion, such as having the largest \(R^{2} \text{-value}\) or the smallest MSE.

Again, our hope is that we end up with a reasonable and useful regression model. There is one sure way of ending up with a model that is certain to be underspecified — and that's if the set of candidate predictor variables doesn't include all of the variables that actually predict the response. Therefore, just as is the case for the stepwise regression procedure, a fundamental rule of the best subsets regression procedure is that the list of candidate predictor variables must include all of the variables that actually predict the response. Otherwise, we are sure to end up with a regression model that is underspecified and therefore misleading.

The procedure

A regression analysis utilizing the best subsets regression procedure involves the following steps:

Step #1

First, identify all of the possible regression models derived from all of the possible combinations of the candidate predictors. Unfortunately, this can be a huge number of possible models.

For the sake of example, suppose we have three (3) candidate predictors — \(x_{1}\), \(x_{2}\), and \(x_{3}\)— for our final regression model. Then, there are eight (8) possible regression models we can consider:

- the one (1) model with no predictors

- the three (3) models with only one predictor each — the model with \(x_{1}\) alone; the model with \(x_{2}\) alone; and the model with \(x_{3}\) alone

- the three (3) models with two predictors each — the model with \(x_{1}\) and \(x_{2}\); the model with \(x_{1}\) and \(x_{3}\); and the model with \(x_{2}\) and \(x_{3}\)

- and the one (1) model with all three predictors — that is, the model with \(x_{1}\), \(x_{2}\) and \(x_{3}\)

That's 1 + 3 + 3 + 1 = 8 possible models to consider. It can be shown that when there are four candidate predictors — \(x_{1}\), \(x_{2}\), \(x_{3}\) and \(x_{4}\) — there are 16 possible regression models to consider. In general, if there are p-1 possible candidate predictors, then there are \(2^{p-1}\) possible regression models containing the predictors. For example, 10 predictors yield \(2^{10} = 1024\) possible regression models.

That's a heck of a lot of models to consider! The good news is that statistical software, such as Minitab, does all of the dirty work for us.

Step #2

From the possible models identified in the first step, determine the one-predictor models that do the "best" at meeting some well-defined criteria, the two-predictor models that do the "best," the three-predictor models that do the "best," and so on. For example, suppose we have three candidate predictors — \(x_{1}\), \(x_{2}\), and \(x_{3}\) — for our final regression model. Of the three possible models with one predictor, identify the one or two that do "best." Of the three possible two-predictor models, identify the one or two that do "best." By doing this, it cuts down considerably the number of possible regression models to consider!

But, have you noticed that we have not yet even defined what we mean by "best"? What do you think "best" means? Of course, you'll probably define it differently than me or than your neighbor. Therein lies the rub — we might not be able to agree on what's best! In thinking about what "best" means, you might have thought of any of the following:

- the model with the largest \(R^{2}\)

- the model with the largest adjusted \(R^{2}\)

- the model with the smallest MSE (or S = square root of MSE)

There are other criteria you probably didn't think of, but we could consider, too, for example, Mallows' \(C_{p}\)-statistic, the PRESS statistic, and Predicted \(R^{2}\) (which is calculated from the PRESS statistic). We'll learn about Mallows' \(C_{p}\)-statistic in this section and about the PRESS statistic and Predicted \(R^{2}\) in Section 10.5.

To make matters even worse — the different criteria quantify different aspects of the regression model, and therefore often yield different choices for the best set of predictors. That's okay — as long as we don't misuse best subsets regression by claiming that it yields the best model. Rather, we should use best subsets regression as a screening tool — that is, as a way to reduce a large number of possible regression models to just a handful of models that we can evaluate further before arriving at one final model.

Step #3

Further, evaluate and refine the handful of models identified in the last step. This might entail performing residual analyses, transforming the predictors and/or responses, adding interaction terms, and so on. Do this until you are satisfied that you have found a model that meets the model conditions, does a good job of summarizing the trend in the data, and most importantly allows you to answer your research question.

Example 10-3: Cement Data

For the remainder of this section, we discuss how the criteria identified above can help us reduce a large number of possible regression models to just a handful of models suitable for further evaluation.

For the sake of an example, we will use the cement data to illustrate the use of the criteria. Therefore, let's quickly review — the researchers measured and recorded the following data (Cement data) on 13 batches of cement:

- Response y: heat evolved in calories during the hardening of cement on a per-gram basis

- Predictor \(x_{1}\): % of tricalcium aluminate

- Predictor \(x_{2}\): % of tricalcium silicate

- Predictor \(x_{3}\): % of tetra calcium alumino ferrite

- Predictor \(x_{4}\): % of dicalcium silicate

And, the matrix plot of the data looks like this:

The \(R^{2} \text{-values}\)

As you may recall, the \(R^{2} \text{-value}\), which is defined as:

\(R^2=\dfrac{SSR}{SSTO}=1-\dfrac{SSE}{SSTO}\)

can only increase as more variables are added. Therefore, it makes no sense to define the "best" model as the model with the largest \(R^{2} \text{-value}\). After all, if we did, the model with the largest number of predictors would always win.

All is not lost, however. We can instead use the \(R^{2} \text{-values}\) to find the point where adding more predictors is not worthwhile, because it yields a very small increase in the \(R^{2}\)-value. In other words, we look at the size of the increase in \(R^{2}\), not just its magnitude alone. Because this is such a "wishy-washy" criterion, it is used most often in combination with the other criteria.

Let's see how this criterion works in the cement data example. In doing so, you get your first glimpse of Minitab's best subsets regression output. For Minitab, select Stat > Regression > Regression > Best Subsets to do a best subsets regression.

Best Subsets Regressions: y versus x1, x2, x3, x4

Response is y

Vars | R-Sq | R-Sq | R-Sq | Mallows | S | x | x | x | x |

|---|---|---|---|---|---|---|---|---|---|

1 | 2 | 3 | 4 | ||||||

1 | 67.5 | 64.5 | 56.0 | 138.7 | 8.9639 | X | |||

1 | 66.6 | 63.6 | 55.7 | 142.5 | 9.0771 | X | |||

2 | 97.9 | 97.4 | 96.5 | 2.7 | 2.4063 | X | X | ||

2 | 97.2 | 96.7 | 95.5 | 5.5 | 2.7343 | X | X | ||

3 | 98.2 | 97.6 | 96.9 | 3.0 | 2.3087 | X | X | X | |

3 | 98.2 | 97.6 | 96.7 | 3.0 | 2.3232 | X | X | X | |

4 | 98.2 | 97.4 | 95.9 | 5.0 | 2.4460 | X | X | X | X |

Each row in the table represents information about one of the possible regression models. The first column — labeled Vars — tells us how many predictors are in the model. The last four columns — labeled downward x1, x2, x3, and x4 — tell us which predictors are in the model. If an "X" is present in the column, then that predictor is in the model. Otherwise, it is not. For example, the first row in the table contains information about the model in which \(x_{4}\) is the only predictor, whereas the fourth row contains information about the model in which \(x_{1}\) and \(x_{4}\) are the two predictors in the model. The other five columns — labeled R-sq, R-sq(adj), R-sq(pred), Cp, and S — pertain to the criteria that we use in deciding which models are "best."

As you can see, by default Minitab reports only the two best models for each number of predictors based on the size of the \(R^{2} \text{-value}\) that is, Minitab reports the two one-predictor models with the largest \(R^{2} \text{-values}\), followed by the two two-predictor models with the largest \(R^{2} \text{-values}\), and so on.

So, using the \(R^{2} \text{-value}\) criterion, which model (or models) should we consider for further evaluation? Hmmm — going from the "best" one-predictor model to the "best" two-predictor model, the \(R^{2} \text{-value}\) jumps from 67.5 to 97.9. That is a jump worth making! That is, with such a substantial increase in the \(R^{2} \text{-value}\), one could probably not justify — with a straight face at least — using the one-predictor model over the two-predictor model. Now, should we instead consider the "best" three-predictor model? Probably not! The increase in the \(R^{2} \text{-value}\) is very small — from 97.9 to 98.2 — and therefore, we probably can't justify using the larger three-predictor model over the simpler, smaller two-predictor model. Get it? Based on the \(R^{2} \text{-value}\) criterion, the "best" model is the model with the two predictors \(x_{1}\) and \(x_{2}\).

The adjusted \(R^{2} \text{-value}\) and MSE

The adjusted \(R^{2} \text{-value}\), which is defined as:

\begin{align} R_{a}^{2}&=1-\left(\dfrac{n-1}{n-p}\right)\left(\dfrac{SSE}{SSTO}\right)\\&=1-\left(\dfrac{n-1}{SSTO}\right)MSE\\&=\dfrac{\dfrac{SSTO}{n-1}-\frac{SSE}{n-p}}{\dfrac{SSTO}{n-1}}\end{align}

makes us pay a penalty for adding more predictors to the model. Therefore, we can just use the adjusted \(R^{2} \text{-value}\) outright. That is, according to the adjusted \(R^{2} \text{-value}\) criterion, the best regression model is the one with the largest adjusted \(R^{2}\)-value.

Now, you might have noticed that the adjusted \(R^{2} \text{-value}\) is a function of the mean square error (MSE). And, you may — or may not — recall that MSE is defined as:

\(MSE=\dfrac{SSE}{n-p}=\dfrac{\sum(y_i-\hat{y}_i)^2}{n-p}\)

That is, MSE quantifies how far away our predicted responses are from our observed responses. Naturally, we want this distance to be small. Therefore, according to the MSE criterion, the best regression model is the one with the smallest MSE.

But, aha — the two criteria are equivalent! If you look at the formula again for the adjusted \(R^{2} \text{-value}\):

\(R_{a}^{2}=1-\left(\dfrac{n-1}{SSTO}\right)MSE\)

you can see that the adjusted \(R^{2} \text{-value}\) increases only if MSE decreases. That is, the adjusted \(R^{2} \text{-value}\) and MSE criteria always yield the same "best" models.

Back to the cement data example! One thing to note is that S is the square root of MSE. Therefore, finding the model with the smallest MSE is equivalent to finding the model with the smallest S:

Best Subsets Regressions: y versus x1 ,x2, x3, x4

Response is y

Vars | R-Sq | R-Sq | R-Sq | Mallows | S | x | x | x | x |

|---|---|---|---|---|---|---|---|---|---|

1 | 2 | 3 | 4 | ||||||

1 | 67.5 | 64.5 | 56.0 | 138.7 | 8.9639 | X | |||

1 | 66.6 | 63.6 | 55.7 | 142.5 | 9.0771 | X | |||

2 | 97.9 | 97.4 | 96.5 | 2.7 | 2.4063 | X | X | ||

2 | 97.2 | 96.7 | 95.5 | 5.5 | 2.7343 | X | X | ||

3 | 98.2 | 97.6 | 96.9 | 3.0 | 2.3087 | X | X | X | |

3 | 98.2 | 97.6 | 96.7 | 3.0 | 2.3232 | X | X | X | |

4 | 98.2 | 97.4 | 95.9 | 5.0 | 2.4460 | X | X | X | X |

The model with the largest adjusted \(R^{2} \text{-value}\) (97.6) and the smallest S (2.3087) is the model with the three predictors \(x_{1}\), \(x_{2}\), and \(x_{4}\).

See?! Different criteria can indeed lead us to different "best" models. Based on the \(R^{2} \text{-value}\) criterion, the "best" model is the model with the two predictors \(x_{1}\) and \(x_{2}\). But, based on the adjusted \(R^{2} \text{-value}\) and the smallest MSE criteria, the "best" model is the model with the three predictors \(x_{1}\), \(x_{2}\), and \(x_{4}\).

Mallows' \(C_{p}\)-statistic

Recall that an underspecified model is a model in which important predictors are missing. And, an underspecified model yields biased regression coefficients and biased predictions of the response. Well, in short, Mallows' \(C_{p}\)-statistic estimates the size of the bias that is introduced into the predicted responses by having an underspecified model.

Now, we could just jump right in and be told how to use Mallows' \(C_{p}\)-statistic as a way of choosing a "best" model. But, then it wouldn't make any sense to you — and therefore it wouldn't stick to your craw. So, we'll start by justifying the use of the Mallows' \(C_{p}\)-statistic. The problem is it's kind of complicated. So, be patient, don't be frightened by the scary-looking formulas, and before you know it, we'll get to the moral of the story. Oh, and by the way, in case you're wondering — it's called Mallows' \(C_{p}\)-statistic, because a guy named Mallows thought of it!

Here goes! At issue in any regression model are two things, namely:

- The bias in the predicted responses.

- The variation in the predicted responses.

Bias in predicted responses

Recall that, in fitting a regression model to data, we attempt to estimate the average — or expected value — of the observed responses \(E \left( y_i \right) \) at any given predictor value x. That is, \(E \left( y_i \right) \) is the population regression function. Because the average of the observed responses depends on the value of x, we might also denote this population average or population regression function as \(\mu_{Y|x}\).

Now, if there is no bias in the predicted responses, then the average of the observed responses \(E \left( y_i \right) \) and the average of the predicted responses E(\(\hat{y}_i\)) both equal the thing we are trying to estimate, namely the average of the responses in the population \(\mu_{Y|x}\). On the other hand, if there is bias in the predicted responses, then \(E \left( y_i \right) \) = \(\mu_{Y|x}\) and E(\(\hat{y}_i\)) do not equal each other. The difference between \(E \left( y_i \right) \) and E(\(\hat{y}_i\)) is the bias \(B_i\) in the predicted response. That is, the bias:

\(B_i=E(\hat{y}_i) - E(y_i)\)

We can picture this bias as follows:

The quantity \(E \left( y_i \right) \) is the value of the population regression line at a given x. Recall that we assume that the error terms \(\epsilon_i\) are normally distributed. That's why there is a normal curve — in red — drawn around the population regression line \(E \left( y_i \right) \). You can think of the quantity E(\(\hat{y}_i\)) as being the predicted regression line — well, technically, it's the average of all of the predicted regression lines you could obtain based on your formulated regression model. Again, since we always assume the error terms \(\epsilon_i\) are normally distributed, we've drawn a normal curve — in blue— around the average predicted regression line E(\(\hat{y}_i\)). The difference between the population regression line \(E \left( y_i \right) \) and the average predicted regression line E(\(\hat{y}_i\)) is the bias \(B_i=E(\hat{y}_i)-E(y_i)\) .

Earlier in this lesson, we saw an example in which bias was likely introduced into the predicted responses because of an underspecified model. The data concerned the heights and weights of Martians. The Martian data set — don't laugh — contains the weights (in g), heights (in cm), and amount of daily water consumption (0, 10, or 20 cups per day) of 12 Martians.

If we regress y = weight on the predictors \(x_{1}\) = height and \(x_{2}\) = water, we obtain the following estimated regression equation:

Regression Equation

\(\widehat{weight} = -1.220 + -.28344 height + 0.11121 water\)

But, if we regress y = weight on only the one predictor \(x_{1}\) = height, we obtain the following estimated regression equation:

Regression Equation

\(\widehat{weight} = -4.14 + 0.2889 height\)

A plot of the data containing the two estimated regression equations looks like this:

The three black lines represent the estimated regression equation when the amount of water consumption is taken into account — the first line for 0 cups per day, the second line for 10 cups per day, and the third line for 20 cups per day. The dashed blue line represents the estimated regression equation when we leave the amount of water consumed out of the regression model.

As you can see, if we use the blue line to predict the weight of a randomly selected martian, we would consistently overestimate the weight of Martians who drink 0 cups of water a day, and we would consistently underestimate the weight of Martians who drink 20 cups of water a day. That is, our predicted responses would be biased.

Variation in predicted responses

When a bias exists in the predicted responses, the variance in the predicted responses for a data point i is due to two things:

- the ever-present random sampling variation, that is \((\sigma_{\hat{y}_i}^{2})\)

- the variance associated with the bias, that is \((B_{i}^{2})\)

Now, if our regression model is biased, it doesn't make sense to consider the bias at just one data point i. We need to consider the bias that exists for all n data points. Looking at the plot of the two estimated regression equations for the martian data, we see that the predictions for the underspecified model are more biased for certain data points than for others. And, we can't just consider the variation in the predicted responses at one data point i. We need to consider the total variation in the predicted responses.

To quantify the total variation in the predicted responses, we just sum the two variance components — \((\sigma_{\hat{y}_i}^{2})\) and \((B_{i}^{2})\) — over all n data points to obtain a (standardized) measure of the total variation in the predicted responses \(\Gamma_p\) (that's the greek letter "gamma"):

\(\Gamma_p=\dfrac{1}{\sigma^2} \left\{ \sum_{i=1}^{n}\sigma_{\hat{y}_i}^{2}+\sum_{i=1}^{n}\left( E(\hat{y}_i)-E(y_i) \right) ^2 \right\}\)

I warned you about not being overwhelmed by scary-looking formulas! The first term in the brackets quantifies the random sampling variation summed over all n data points, while the second term in the brackets quantifies the amount of bias (squared) summed over all n data points. Because the size of the bias depends on the measurement units used, we divide by \(\sigma^{2}\) to get a standardized unitless measure.

Now, we don't have the tools to prove it, but it can be shown that if there is no bias in the predicted responses — that is, if the bias = 0 — then \(\Gamma_p\) achieves its smallest possible value, namely p, the number of parameters:

\(\Gamma_p=\dfrac{1}{\sigma^2} \left\{ \sum_{i=1}^{n}\sigma_{\hat{y}_i}^{2}+0 \right\} =p\)

Now, because it quantifies the amount of bias and variance in the predicted responses, \(\Gamma_p\) seems to be a good measure of an underspecified model:

\(\Gamma_p=\dfrac{1}{\sigma^2} \left\{ \sum_{i=1}^{n}\sigma_{\hat{y}_i}^{2}+\sum_{i=1}^{n} \left( E(\hat{y}_i)-E(y_i) \right) ^2 \right\}\)

The best model is simply the model with the smallest value of \(\Gamma_p\). We even know that the theoretical minimum of \(\Gamma_p\) is the number of parameters p.

Well, it's not quite that simple — we still have a problem. Did you notice all of those greek parameters — \(\sigma^{2}\), \((\sigma_{\hat{y}_i}^{2})\), and \(\gamma_p\)? As you know, greek parameters are generally used to denote unknown population quantities. That is, we don't or can't know the value of \(\Gamma_p\) — we must estimate it. That's where Mallows' \(C_{p}\)-statistic comes into play!

\(C_{p}\) as an estimate of \(\Gamma_p\)

If we know the population variance \(\sigma^{2}\), we can estimate \(\Gamma_{p}\):

\(C_p=p+\dfrac{(MSE_p-\sigma^2)(n-p)}{\sigma^2}\)

where \(MSE_{p}\) is the mean squared error from fitting the model containing the subset of p-1 predictors (which with the intercept contains p parameters).

But we don't know \(\sigma^{2}\). So, we estimate it using \(MSE_{all}\), the mean squared error obtained from fitting the model containing all of the candidate predictors. That is:

\(C_p=p+\dfrac{(MSE_p-MSE_{all})(n-p)}{MSE_{all}}=\dfrac{SSE_p}{MSE_{all}}-(n-2p)\)

A couple of things to note though. Estimating \(\sigma^{2}\) using \(MSE_{all}\):

- assumes that there are no biases in the full model with all of the predictors, an assumption that may or may not be valid, but can't be tested without additional information (at the very least you have to have all of the important predictors involved)

- guarantees that \(C_{p}\) = p for the full model because in that case \(MSE_{p}\) - \(MSE_{all}\) = 0.

Using the \(C_{p}\) criterion to identify "best" models

Finally — we're getting to the moral of the story! Just a few final facts about Mallows' \(C_{p}\)-statistic will get us on our way. Recalling that p denotes the number of parameters in the model:

- Subset models with small \(C_{p}\) values have a small total (standardized) variance of prediction.

- When the \(C_{p}\) value is ...

- ... near p, the bias is small (next to none)

- ... much greater than p, the bias is substantial

- ... below p, it is due to sampling error; interpret as no bias

- For the largest model containing all of the candidate predictors, \(C_{p}\) = p (always). Therefore, you shouldn't use \(C_{p}\) to evaluate the fullest model.

That all said, here's a reasonable strategy for using \(C_{p}\) to identify "best" models:

- Identify subsets of predictors for which the \(C_{p}\) value is near p (if possible).

- The full model always yields \(C_{p}\)= p, so don't select the full model based on \(C_{p}\).

- If all models, except the full model, yield a large \(C_{p}\) not near p, it suggests some important predictor(s) are missing from the analysis. In this case, we are well-advised to identify the predictors that are missing!

- If several models have \(C_{p}\) near p, choose the model with the smallest \(C_{p}\) value, thereby insuring that the combination of the bias and the variance is at a minimum.

- When more than one model has a small value of \(C_{p}\) value near p, in general, choose the simpler model or the model that meets your research needs.

Example 10-3: Cement Data Continued

Ahhh—an example! Let's see what model the \(C_{p}\) criterion leads us to for the cement data:

Best Subsets Regressions: y versus x1, x2, x3, x4

Response is y

Vars | R-Sq | R-Sq | R-Sq | Mallows | S | x | x | x | x |

|---|---|---|---|---|---|---|---|---|---|

1 | 2 | 3 | 4 | ||||||

1 | 67.5 | 64.5 | 56.0 | 138.7 | 8.9639 | X | |||

1 | 66.6 | 63.6 | 55.7 | 142.5 | 9.0771 | X | |||

2 | 97.9 | 97.4 | 96.5 | 2.7 | 2.4063 | X | X | ||

2 | 97.2 | 96.7 | 95.5 | 5.5 | 2.7343 | X | X | ||

3 | 98.2 | 97.6 | 96.9 | 3.0 | 2.3087 | X | X | X | |

3 | 98.2 | 97.6 | 96.7 | 3.0 | 2.3232 | X | X | X | |

4 | 98.2 | 97.4 | 95.9 | 5.0 | 2.4460 | X | X | X | X |

The first thing you might want to do here is "pencil in" a column to the left of the Vars column. Recall that this column tells us the number of predictors (p-1) that are in the model. But, we need to compare \(C_{p}\) to the number of parameters (p). There is always one more parameter—the intercept parameter—than predictors. So, you might want to add—at least mentally—a column containing the number of parameters—here, 2, 2, 3, 3, 4, 4, and 5.

Here, the model in the third row (containing predictors \(x_{1}\) and \(x_{2}\)), the model in the fifth row (containing predictors \(x_{1}\), \(x_{2}\) and \(x_{4}\)), and the model in the sixth row (containing predictors \(x_{1}\), \(x_{2}\) and \(x_{3}\)) are all unbiased models, because their \(C_{p}\) values equal (or are below) the number of parameters p. For example:

- the model containing the predictors \(x_{1}\) and \(x_{2}\) contains 3 parameters and its \(C_{p}\) value is 2.7. When \(C_{p}\) is less than p, it suggests the model is unbiased;

- the model containing the predictors \(x_{1}\), \(x_{2}\) and \(x_{4}\) contains 4 parameters and its \(C_{p}\) value is 3.0. When \(C_{p}\) is less than p, it suggests the model is unbiased;

- the model containing the predictors \(x_{1}\), \(x_{2}\) and \(x_{3}\) contains 4 parameters and its \(C_{p}\) value is 3.0. When \(C_{p}\) is less than p, it suggests the model is unbiased.

So, in this case, based on the \(C_{p}\) criterion, the researcher has three legitimate models from which to choose with respect to bias. Of these three, the model containing the predictors \(x_{1}\) and \(x_{2}\) has the smallest \(C_{p}\) value, but the \(C_{p}\) values for the other two models are similar and so there is little to separate these models based on this criterion.

Incidentally, you might also want to conclude that the last model — the model containing all four predictors — is a legitimate contender because \(C_{p}\) = 5.0 equals p = 5. Don't forget that the model with all of the predictors is assumed to be unbiased. Therefore, you should not use the \(C_{p}\) criterion as a way of evaluating the fullest model with all of the predictors.

Incidentally, how did Minitab determine that the \(C_{p}\) value for the third model is 2.7, while for the fourth model the \(C_{p}\) value is 5.5? We can verify these calculated \(C_{p}\) values!

The following output was obtained by first regressing y on the predictors \(x_{1}\), \(x_{2}\), \(x_{3}\) and \(x_{4}\) and then by regressing y on the predictors \(x_{1}\) and \(x_{2}\):

Regression Equation

\(\widehat{y} = 62.4 + 1.55 x1 + 0.51 x2 + 0.102 x3 - 0.144 x4\)

Source | DF | SS | MS | F | P |

|---|---|---|---|---|---|

Regression | 4 | 2667.90 | 666.97 | 111.48 | 0.000 |

Residual Error | 8 | 47.86 | 5.98 | ||

Total | 12 | 2715.76 |

Regression Equation

\(\widehat{y} = 52.6 + 1.47 x1 + 0.662 x2\)

Source | DF | SS | MS | F | P |

|---|---|---|---|---|---|

Regression | 2 | 2657.9 | 1328.9 | 229.50 | 0.000 |

Residual Error | 10 | 57.9 | 5.8 | ||

Total | 12 | 2715.8 |

tells us that, here, \(MSE_{all}\) = 5.98 and \(MSE_{p}\) = 5.8. Therefore, just as Minitab claims:

\(C_p=p+\dfrac{(MSE_p-MSE_{all})(n-p)}{MSE_{all}}=3+\dfrac{(5.8-5.98)(13-3)}{5.98}=2.7\)

the \(C_{p}\)-statistic equals 2.7 for the model containing the predictors \(x_{1}\) and \(x_{2}\).

And, the following output is obtained by first regressing y on the predictors \(x_{1}\), \(x_{2}\), \(x_{3}\) and \(x_{4}\) and then by regressing y on the predictors \(x_{1}\) and \(x_{4}\):

Regression Equation

\(\widehat{y} = 62.4 + 1.55 x1 + 0.51 x2 + 0.102 x3 - 0.144 x4\)

Source | DF | SS | MS | F | P |

|---|---|---|---|---|---|

Regression | 4 | 2667.90 | 666.97 | 111.48 | 0.000 |

Residual Error | 8 | 47.86 | 5.98 | ||

Total | 12 | 2715.76 |

Regression Equation

\(\widehat{y} = 103 + 1.44 x1 - 0.614 x4\)

Source | DF | SS | MS | F | P |

|---|---|---|---|---|---|

Regression | 2 | 2641.0 | 1320.5 | 176.63 | 0.000 |

Residual Error | 10 | 74.8 | 7.5 | ||

Total | 12 | 2715.8 |

tells us that, here, \(MSE_{all}\) = 5.98 and \(MSE_{p}\) = 7.5. Therefore, just as Minitab claims:

\(C_p=p+\dfrac{(MSE_p-MSE_{all})(n-p)}{MSE_{all}}=3+\dfrac{(7.5-5.98)(13-3)}{5.98}=5.5\)

the \(C_{p}\)-statistic equals 5.5 for the model containing the predictors \(x_{1}\) and \(x_{4}\).

Try it!

Best subsets regression

When there are four candidate predictors — \(x_1\), \(x_2\), \(x_3\) and \(x_4\) — there are 16 possible regression models to consider. Identify the 16 possible models.

The possible predictor sets and the corresponding models are given below:

Predictor Set | model |

|---|---|

None of \(x_1, x_2, x_3, x_4\) | \(E(Y)=\beta_0\) |

\(x_1\) | \(E(Y)=\beta_0+\beta_1 x_1\) |

\(x_2\) | \(E(Y)=\beta_0+\beta_2 x_2\) |

\(x_3\) | \(E(Y)=\beta_0+\beta_3 x_3\) |

\(x_4\) | \(E(Y)=\beta_0+\beta_4 x_4\) |

\(x_1, x_2\) | \(E(Y)=\beta_0+\beta_1 x_1+\beta_2 x_2\) |

\(x_1, x_3\) | \(E(Y)=\beta_0+\beta_1 x_1+\beta_3 x_3\) |

\(x_1, x_4\) | \(E(Y)=\beta_0+\beta_1 x_1+\beta_4 x_4\) |

\(x_2, x_3\) | \(E(Y)=\beta_0+\beta_2 x_2+\beta_3 x_3\) |

\(x_2, x_4\) | \(E(Y)=\beta_0+\beta_2 x_2+\beta_4 x_4\) |

\(x_3, x_4\) | \(E(Y)=\beta_0+\beta_3 x_3+\beta_4 x_4\) |

\(x_1, x_2,x_3\) | \(E(Y)=\beta_0+\beta_1 x_1+\beta_2 x_2+\beta_3 x_3\) |

\(x_1, x_2,x_4\) | \(E(Y)=\beta_0+\beta_1 x_1+\beta_2 x_2+\beta_4 x_4\) |

\(x_1, x_3,x_4\) | \(E(Y)=\beta_0+\beta_1 x_1+\beta_3 x_3+\beta_4 x_4\) |

\(x_2, x_3,x_4\) | \(E(Y)=\beta_0+\beta_2 x_2+\beta_3 x_3+\beta_4 x_4\) |

\(x_1, x_2,x_3,x_4\) | \(E(Y)=\beta_0+\beta_1 x_1+\beta_2 x_2+\beta_3 x_3+\beta_4 x_4\) |

10.4 - Some Examples

10.4 - Some ExamplesExampe 10-4: Cement Data

Let's take a look at a few more examples to see how the best subsets and stepwise regression procedures assist us in identifying a final regression model.

Let's return one more time to the cement data example (Cement data set). Recall that the stepwise regression procedure:

Stepwise Selection of Terms

Candidate terms: x1, x2, x3, x4

| Terms | -----Step 1----- | -----Step 2----- | -----Step 3----- | -----Step 4----- | ||||

|---|---|---|---|---|---|---|---|---|

| Coef | P | Coef | P | Coef | P | Coef | P | |

| Constant | 117.57 | 103.10 | 71.6 | 52.58 | ||||

| x4 | -0.738 | 0.001 | -0.6140 | 0.000 | -0.237 | 0.205 | ||

| x1 | 1.440 | 0.000 | 1.452 | 0.000 | 1.468 | 0.000 | ||

| x2 | 0.416 | 0.052 | 0.6623 | 0.000 | ||||

| S | 8.96390 | 2.73427 | 2.30874 | 2.40634 | ||||

| R-sq | 67.45% | 97.25% | 98.23% | 97.44% | ||||

| R-sq(adj) | 64.50% | 96.70% | 97.64% | 97.44% | ||||

| R-sq(pred) | 56.03% | 95.54% | 96.86% | 96.54% | ||||

| Mallows' Cp | 138.73 | 5.50 | 3.02 | 2.68 | ||||

\(\alpha\) to enter =0.15, \(\alpha\) to remove 0.15

yielded the final stepwise model with y as the response and \(x_1\) and \(x_2\) as predictors.

The best subsets regression procedure:

Best Subsets Regressions: y versus x1, x2, x3, x4

Response is y

| Vars | R-Sq | R-Sq (adj) |

R-Sq (pred) |

Mallows Cp |

S | x | x | x | x |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | ||||||

| 1 | 67.5 | 64.5 | 56.0 | 138.7 | 8.9639 | X | |||

| 1 | 66.6 | 63.6 | 55.7 | 142.5 | 9.0771 | X | |||

| 2 | 97.9 | 97.4 | 96.5 | 2.7 | 2.4063 | X | X | ||

| 2 | 97.2 | 96.7 | 95.5 | 5.5 | 2.7343 | X | X | ||

| 3 | 98.2 | 97.6 | 96.9 | 3.0 | 2.3087 | X | X | X | |

| 3 | 98.2 | 97.6 | 96.7 | 3.0 | 2.3121 | X | X | X | |

| 4 | 98.2 | 97.4 | 95.9 | 5.0 | 2.4460 | X | X | X | X |

yields various models depending on the different criteria:

- Based on the \(R^{2} \text{-value}\) criterion, the "best" model is the model with the two predictors \(x_1\) and \(x_2\).

- Based on the adjusted \(R^{2} \text{-value}\) and MSE criteria, the "best" model is the model with the three predictors \(x_1\), \(x_2\), and \(x_4\).

- Based on the \(C_p\) criterion, there are three possible "best" models — the model containing \(x_1\) and \(x_2\); the model containing \(x_1\), \(x_2\) and \(x_3\); and the model containing \(x_1\), \(x_2\) and \(x_4\).

So, which model should we "go with"? That's where the final step — the refining step — comes into play. In the refining step, we evaluate each of the models identified by the best subsets and stepwise procedures to see if there is a reason to select one of the models over the other. This step may also involve adding interaction or quadratic terms, as well as transforming the response and/or predictors. And, certainly, when selecting a final model, don't forget why you are performing the research, to begin with — the reason may choose the model obviously.

Well, let's evaluate the three remaining candidate models. We don't have to go very far with the model containing the predictors \(x_1\), \(x_2\), and \(x_4\) :

Analysis of Variance: y versus x1, x2, x4

| Source | DF | Adj SS | Adj MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 3 | 2667.79 | 889.263 | 166.83 | 0.000 |

| x1 | 1 | 820.91 | 820.907 | 154.01 | 0.000 |

| x2 | 1 | 26.79 | 26.789 | 5.03 | 0.052 |

| x4 | 1 | 9.93 | 9.932 | 1.86 | 0.205 |

| Error | 9 | 47.97 | 5.330 | ||

| Total | 12 | 2715.76 |

Model Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 2.30874 | 98.23% | 97.64% | 96.86% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 71.6 | 14.1 | 5.07 | 0.001 | |

| x1 | 1.452 | 0.117 | 12.41 | 0.000 | 1.07 |

| x2 | 0.416 | 0.186 | 2.24 | 0.052 | 18.78 |

| x4 | -0.237 | 0.173 | -1.37 | 0.205 | 18.94 |

Regression Equaation

y = 71.6 + 1.452 x1 + 0.416 x2 - 0.237 x4

We'll learn more about multicollinearity in Lesson 12, but for now, all we need to know is that the variance inflation factors of 18.78 and 18.94 for \(x_2\) and \(x_4\) indicate that the model exhibits substantial multicollinearity. You may recall that the predictors \(x_2\) and \(x_4\) are strongly negatively correlated — indeed, r = -0.973.

While not perfect, the variance inflation factors for the model containing the predictors \(x_1\), \(x_2\), and \(x_3\):

Analysis of Variance: y versus x1, x2, x3

| Source | DF | Adj SS | Adj MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 3 | 2667.65 | 889.22 | 166.34 | 0.000 |

| x1 | 1 | 367.33 | 367.33 | 68.72 | 0.000 |

| x2 | 1 | 1178.96 | 1178.96 | 220.55 | 0.000 |

| x3 | 1 | 9.79 | 9.79 | 1.83 | 0.209 |

| Error | 9 | 48.11 | 5.35 | ||

| Total | 12 | 2715.76 |

Model Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 2.31206 | 98.23% | 97.64% | 96.69% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 48.19 | 3.91 | 12.32 | 0.000 | |

| x1 |

1.696 |

0.205 | 8.29 | 0.000 | 2.25 |

| x2 | 0.6569 | 0.0442 | 14.85 | 0.000 | 1.06 |

| x3 | 0.250 | 0.185 | 1.35 | 0.209 | 3.14 |

Regression Equation

y = 48.19 + 1.696 x1 + 0.6569 x2 + 0.250 x3

are much better (smaller) than the previous variance inflation factors. But, unless there is a good scientific reason to go with this larger model, it probably makes more sense to go with the smaller, simpler model containing just the two predictors \(x_1\) and \(x_2\):

Analysis of Variance: y versus x1, x2

| Source | DF | Adj SS | Adj MS | F-Value | P-Value |

|---|---|---|---|---|---|

| Regression | 2 | 2657.86 | 1328.93 | 229.50 | 0.000 |

| x1 | 1 | 848.43 | 848.43 | 146.52 | 0.000 |

| x2 | 1 | 1207.78 | 1207.78 | 208.58 | 0.000 |

| Error | 10 | 57.90 | 5.79 | ||

| Total | 12 | 2715.76 |

Model Summary

| S | R-sq | R-sq(adj) | R-sq(pred) |

|---|---|---|---|

| 2.40634 | 97.87% | 97.44% | 96.54% |

Coefficients

| Term | Coef | SE Coef | T-Value | P-Value | VIF |

|---|---|---|---|---|---|

| Constant | 52.58 | 2.29 | 23.00 | 0.000 | |

| x1 | 1.468 | 0.121 | 12.10 | 0.000 | 1.06 |

| x2 | 0.6623 | 0.0459 | 14.44 | 0.000 | 1.06 |

Regression Equation

y = 52.58 + 1.468 x1 + 0.6623 x2

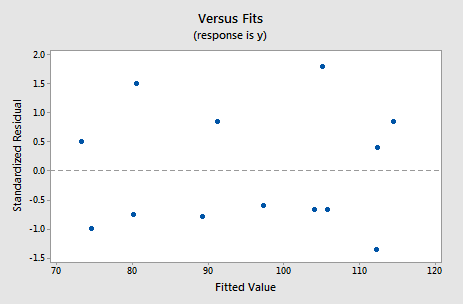

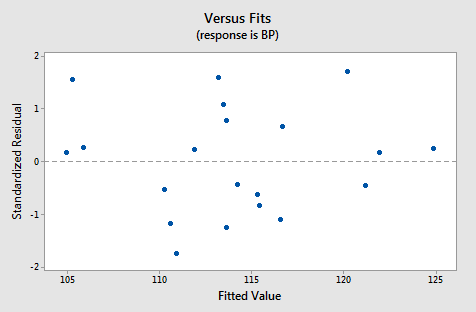

For this model, the variance inflation factors are quite satisfactory (both 1.06), the adjusted \(R^{2} \text{-value}\) (97.44%) is large, and the residual analysis yields no concerns. That is, the residuals versus fits plot:

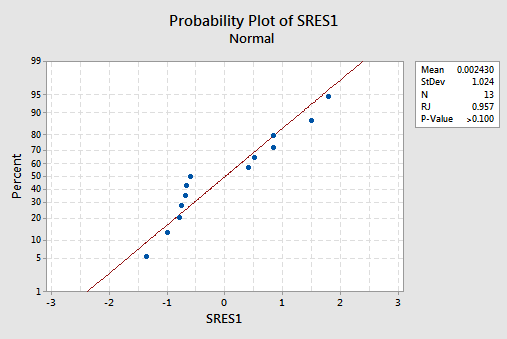

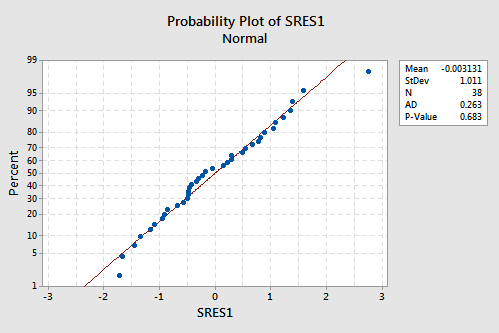

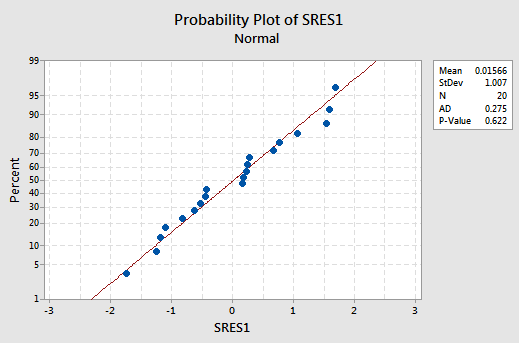

suggests that the relationship is indeed linear and that the variances of the error terms are constant. Furthermore, the normal probability plot:

suggests that the error terms are normally distributed. The regression model with y as the response and \(x_1\) and \(x_2\) as the predictors has been evaluated fully and appears to be ready to answer the researcher's questions.

Example 10-5: IQ Size

Let's return to the brain size and body size study, in which the researchers were interested in determining whether or not a person's brain size and body size are predictive of his or her intelligence. The researchers (Willerman, et al, 1991) collected the following IQ Size data on a sample of n = 38 college students:

- Response (y): Performance IQ scores (PIQ) from the revised Wechsler Adult Intelligence Scale. This variable served as the investigator's measure of the individual's intelligence.

- Potential predictor (\(x_1\)): Brain size based on the count obtained from MRI scans (given as count/10,000).

- Potential predictor (\(x_2\)): Height in inches.

- Potential predictor (\(x_3\)): Weight in pounds.