The basic problem that we’re considering is the construction of a lagged regression in which we predict a y-variable at the present time using lags of an x-variable (including lag 0) and lags of the y-variable. In Week 8, we introduced the CCF (cross-correlation function) as an aid to the identification of the model. One difficulty is that the CCF is affected by the time series structure of the x-variable and any “in common” trends the x and y series may have over time.

One strategy for dealing with this difficulty is called “pre-whitening.” The steps are:

- Determine a time series model for the x-variable and store the residuals from this model.

- Filter the y-variable series using the x-variable model (using the estimated coefficients from step 1). In this step we find differences between observed y-values and “estimated” y-values based on the x-variable model.

- Examine the CCF between the residuals from Step 1 and the filtered y-values from Step 2. This CCF can be used to identify the possible terms for a lagged regression.

This strategy stems from the fact that when the input series (say, \(w_{t}\)) is “white noise” the patterns of the CCF between \(w_{t}\) and \(z_{t}\), a linear combination of lags of the \(w_{t}\), are easily identifiable (and easily derived). Step 1 above creates a “white noise” series as the input. Conceptually, Step 2 above arises from a starting point that y-series = linear combination of x-series. If we “transform” the x-series to white noise (residuals from its ARIMA model) then we should apply the transformation to both sides of the equation to preserve an equality of sorts.

It’s not absolutely crucial that we find the model for x exactly. We just want to get close to the “white noise” input situation. For simplicity, some analysts only consider AR models (with differencing possible) for x because it’s much easier to filter the y-variable with AR coefficients than with MA coefficients.

Pre-whitening is just used to help us identify which lags of x may predict y. After identifying possible model from the CCF, we work with the original variables to estimate the lagged regression.

Alternative strategies to pre-whitening include:

- Looking at the CCF for the original variables – this sometimes works

- De-trending the series using either first differences or linear regressions with time as a predictor

Example 9-1 Section

The data for this example were simulated. The x-series was simulated as an ARIMA (1,1,0) process with “true” \(\phi_1\)=0.7. The error variance was 1. The sample size was n = 200. The y-series was determined as \(y_{t} = 15 + 0.8x_{t-3} + 1.5x_{t-4}\). To be more realistic, we should add random error into y, but we wanted to ensure a clear result so we skipped that step. With the sample data we should be able to identify that lags 3 and 4 of x are predictors of y.

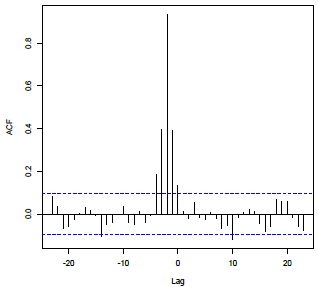

Following is the CCF of the simulated x and y series. We aren’t able to learn anything from it.

- Step 1

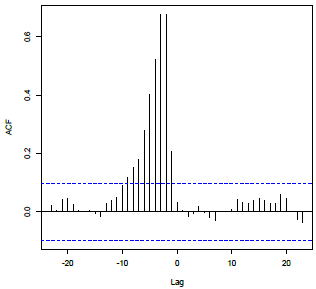

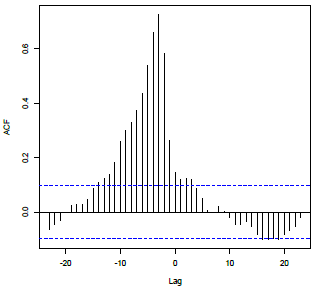

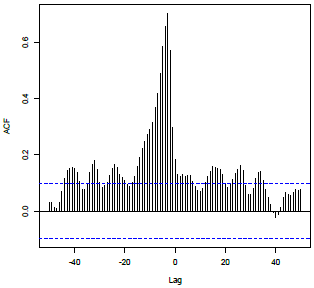

We determine a (possibly approximate) ARIMA model for the x-series. The usual steps would be used. In this case, the ACF of the x-series did not taper to 0 suggesting a non-stationary series. Thus a first difference should be tried. The ACF and PACF of the first differences showed the prototypical pattern of an AR(1), so an ARIMA(1,1,0) should be tried.

For the simulated data, the estimated phi-coefficient for the ARIMA(1,1,0) was \(\widehat{\phi}_1 = 0.7445\).

The estimated model can be written as \( \left(1 - 0.7445B \right) \left(1 - B \right)\left(x_t - \mu \right) = w_t\).

In this form, we’re applying the AR(1) polynomial and a first difference to the x-variable.

- Step 2

Filter the y series using the model for x.

We don’t need to worry about the mean because correlations aren’t affected by the level of the mean. Thus, we’ll calculate the filtered y as

\( \left(1 - 0.7445B \right) \left(1 - B \right) y_{t} = \left(1 - 1.7445B + 0.7445B^{2} \right)y_{t}\)

In the equation(s) just given, the AR(1) polynomial for x and the first differencing are applied to the y-series. An R command that carrys out this operation is:

newpwy = filter(y, filter = c(1,-1.7445,.7445), sides =1) - Step 3

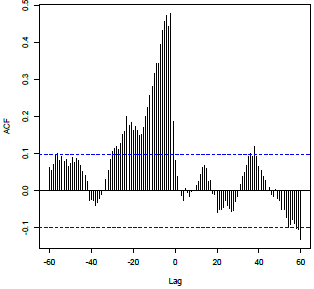

For the simulated data, the following plot is the CCF for the pre-whitened x and the filtered y. The pre-whitened x = residuals from ARIMA(1,1,0) for x. We see clear spikes at lags 3 and 4. Thus \(x_{t-3}\) and \(x_{t-4}\) should be tried as predictors of \(y_{t}\) .

R Code for the Example 9-1 Section

Here’s the R Code for the example. The code includes all steps, including the simulation of the series, and the estimation of the lagged regression after identification of the model has been done. The filter command would have to be modified in a new simulation because the AR coefficient would be different for a new sample.

x = arima.sim(list(order = c(1,1,0), ar = 0.7), n = 200) #Generates random data from ARIMA(1,1,0). This will generate a new data set for each call.

z = ts.intersect(x, lag(x,-3), lag(x,-4)) #Creates a matrix z with columns, xt, xt-3, and xt-4

y = 15+0.8*z[,2]+1.5*z[,3] #Creates y from lags 3 and 4 of randomly generated x

ccf(z[,1],y,na.action = na.omit) #CCF between x and y

acf(x)

diff1x=diff(x,1)

acf(diff1x, na.action = na.omit)

pacf(diff1x, na.action = na.omit)

ar1model = sarima(x,1,1,0)

ar1model

pwx=resid(ar1model$fit)

newpwy = filter(y, filter = c(1,-1.7445,.7445), sides =1)

ccf (pwx,newpwy,na.action=na.omit)

Note!

na.action = na.omit ignores the missing values within the data set a created by aligning the rows of y with lags of x. Print a to see the missing values listed as NA.

If you use arima rather than sarima, you must use: pwx = ar1model$residuals to access the residuals.

CCF Patterns Section

In general, we’re trying to identify lags of x and y that might predict y. The following table describes some typical situations with a peak at lag d. Notice that a smooth tapering pattern in the CCF indicates that we should use \(y_{t-1}\) and perhaps even \(y_{t-2}\) along with lags of x.

| Pattern in CCF | Regression Terms in Model |

|---|---|

|

Non-zero values at lag d and perhaps other lags beyond d, with no particular pattern; correlations near 0 at other lags. |

X: Lag d, \(x_{t-d}\) and perhaps additional lags of x Y: No lags of y |

|

Exponential decay of correlations beginning at lag d; correlations near 0 at lags before lag d. |

X: Lag d, \(x_{t-d}\) Y: Lag 1, \(y_{t-1}\) |

|

Non-zero correlations with no particular pattern beginning at lag d, but then exponential decay begins at last used lag of x; correlations near 0 before lag d. |

X: Lag d, \(x_{t-d}\), and perhaps additional lags of x. Y: Lag 1, \(y_{t-1}\) |

|

Sinusoidal pattern beginning at lag d, with decay toward 0; correlations near 0 before lag d. |

X: Lag d, \(x_{t-d}\) Y: Lags 1 and 2, \(y_{t-1}\), \(y_{t-2}\) |

|

Non-zero correlations with no particular pattern beginning at lag d, but then Sinusoidal pattern beginning at last used lag of x, with decay toward 0; correlations near 0 before lag d. |

X: Lag d, \(x_{t-d}\), and perhaps additional lags of x. Y: Lags 1 and 2, \(y_{t-1}\), \(y_{t-2}\) |

- Examine the CCF for the original data

- If it is unclear, determine a time series for x, and find the residuals

- Pre-whiten

- Examine the CCF for the pre-whitened x and the filtered y

Transfer Functions

The term “transfer function” applies to models in which we predict y from past lags of both y and x (including possibly lag 0), and we also model x and the errors. For prediction of the future past the end of the series, we might first use the model for x to forecast x. Then we would use the forecasted x when we forecast future y.